1. General notes about information in this file

: All background rate plots that are contained in this file in which only the non tracking data was used, used the wrong time to normalize by. Instead of using the active shutter open time of the non tracking part, it used the active time of all the data. As such the background rates are about 1/20 too low. Those that are generated with all data (e.g. sec. 29.1.11.5 for the latest at the moment) use the correct numbers. As does any produced after the above date.

2. Reminder about data taking & detector properties

Recap the data InGrid data taking campaign.

Run-2: October 2017 - March 2018

Run-3: October 2018 - December 2018

| Solar tracking [h] | Background [h] | Active tracking [h] | Active tracking (eventDuration) [h] | Active background [h] | Total time [h] | Active time [h] | Active [%] | |

|---|---|---|---|---|---|---|---|---|

| Run-2 | 106.006 | 2401.43 | 93.3689 | 93.3689 | 2144.67 | 2507.43 | 2238.78 | 0.89285842 |

| Run-3 | 74.2981 | 1124.93 | 67.0066 | 67.0066 | 1012.68 | 1199.23 | 1079.6 | 0.90024432 |

| Total | 180.3041 | 3526.36 | 160.3755 | 160.3755 | 3157.35 | 3706.66 | 3318.38 | 0.89524801 |

Ratio of tracking to background: 3156.8 / 159.8083 = 19.7536673627

Calibration data:

| Calibration [h] | Active calibration [h] | Total time [h] | Active time [h] | |

|---|---|---|---|---|

| Run-2 | 107.422 | 2.60139 | 107.422 | 2.60139 |

| Run-3 | 87.0632 | 3.52556 | 87.0632 | 3.52556 |

| solar tracking | background | calibration | |

|---|---|---|---|

| Run-2 | 106 h | 2401 h | 107 h |

| Run-3 | 74 h | 1125 h | 87 h |

| Total | 180 h | 3526 h | 194 h |

These numbers can be obtained for example with ./../../CastData/ExternCode/TimepixAnalysis/Tools/writeRunList/writeRunList.nim by running it on Run-2 and Run-3 files. They correspond to the total time and not the active detector time!

The following detector features were used:

- \(\SI{300}{\nano\meter} \ce{SiN}\) entrance window available in Run-2 and Run-3

central InGrid surrounded by 6 additional InGrids for background suppression of events

available in Run-2 and Run-3

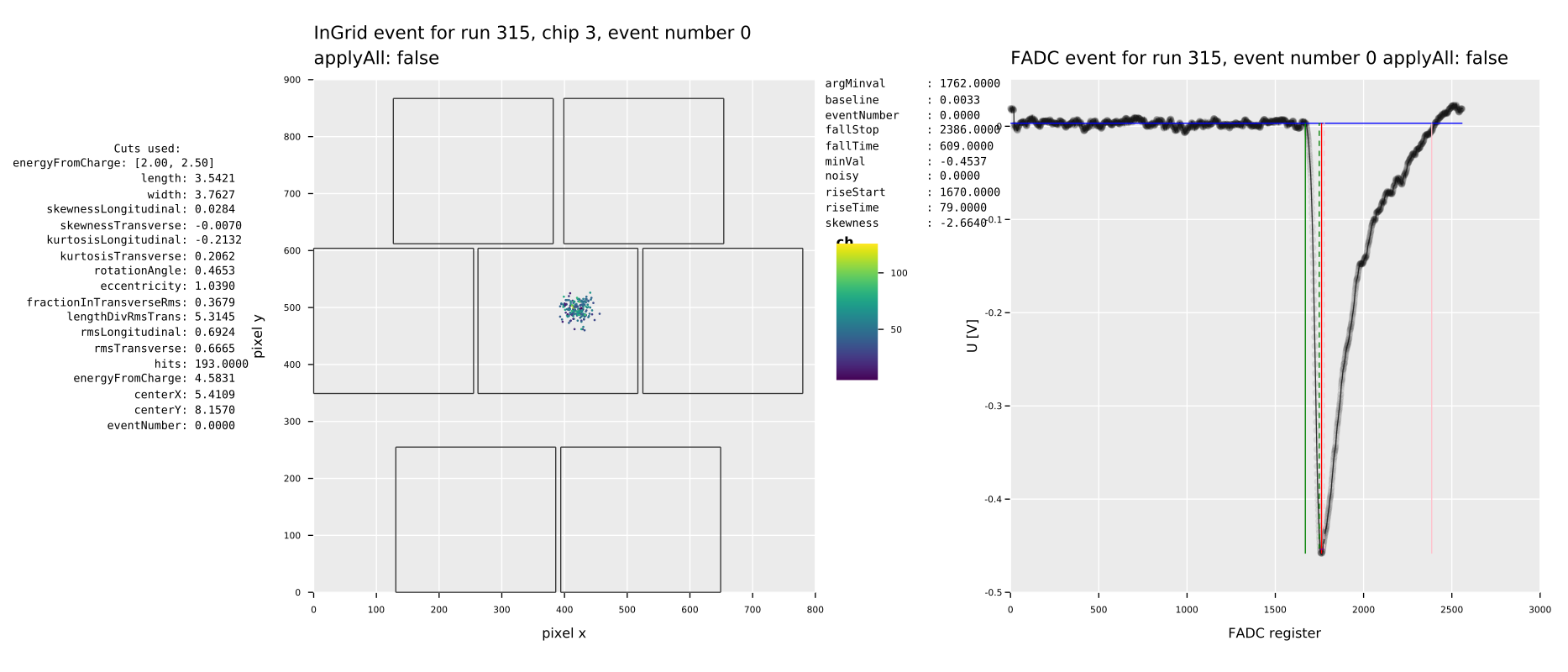

recording analog grid signals from central chip with an FADC for background suppression based on signal shapes and more importantly as trigger for events above \(\mathcal{O}(\SI{1.2}{\kilo\electronvolt})\) (include FADC spectrum somewhere?)

available in Run-2 and Run-3

- two veto scintillators:

- SCL (large "horizontal" scintillator pad) to veto events from cosmics or induced X-ray fluorescence photons (available in Run-3)

- SCS (small scintillator behind anode plane) to veto possible cosmics orthogonal to readout plane (available in Run-3)

As a table: Overview of working (\green{o}), mostly working (\orange{m}), not working (\red{x}) features

| Feature | Run 2 | Run 3 |

|---|---|---|

| Septemboard | \green{o} | \green{o} |

| FADC | \orange{m} | \green{o} |

| Veto scinti | \red{x} | \green{o} |

| SiPM | \red{x} | \green{o} |

2.1. Calculate total tracking and background times used above

UPDATE: These numbers in this section are also now outdated. The most up to date are in ./../../phd/thesis.html. Those numbers appear in the table in the section above now!

The table above is generated by using the ./../../CastData/ExternCode/TimepixAnalysis/Tools/writeRunList/writeRunList.nim tool:

writeRunList -b ~/CastData/data/DataRuns2017_Reco.h5 -c ~/CastData/data/CalibrationRuns2017_Reco.h5

writeRunList -b ~/CastData/data/DataRuns2018_Reco.h5 -c ~/CastData/data/CalibrationRuns2018_Reco.h5

This produces the following table:

| Solar tracking [h] | Background [h] | Active tracking [h] | Active background [h] | Total time [h] | Active time [h] | Active % | |

|---|---|---|---|---|---|---|---|

| Run-2 | 106.006 | 2391.16 | 92.8017 | 2144.12 | 2497.16 | 2238.78 | 0.89653046 |

| Run-3 | 74.2981 | 1124.93 | 67.0066 | 1012.68 | 1199.23 | 1079.6 | 0.90024432 |

| Total | 180.3041 | 3516.09 | 159.8083 | 3156.8 | 3696.39 | 3318.38 | 0.89773536 |

(use org-table-sum C-c + on each column to compute the total).

2.1.1. Outdated numbers

The numbers below were the ones obtained from a faulty calculation. See ./../journal.org#sec:journal:2023_07_08:missing_time

These numbers yielded the following table:

| Solar tracking [h] | Background [h] | Active tracking [h] | Active background [h] | Total time [h] | Active time [h] | Active % | |

|---|---|---|---|---|---|---|---|

| Run-2 | 106.006 | 2401.43 | 94.1228 | 2144.67 | 2507.43 | 2238.78 | 0.89285842 |

| Run-3 | 74.2981 | 1124.93 | 66.9231 | 1012.68 | 1199.23 | 1079.60 | 0.90024432 |

| Total | 180.3041 | 3526.36 | 161.0460 | 3157.35 | 3706.66 | 3318.38 | 0.89524801 |

Run-2:

./writeRunList -b ~/CastData/data/DataRuns2017_Reco.h5 -c ~/CastData/data/CalibrationRuns2017_Reco.h5

Type: rtBackground total duration: 14 weeks, 6 days, 11 hours, 25 minutes, 59 seconds, 97 milliseconds, 615 microseconds, and 921 nanoseconds In hours: 2507.433082670833 active duration: 2238.783333333333 trackingDuration: 4 days, 10 hours, and 20 seconds In hours: 106.0055555555556 active tracking duration: 94.12276972527778 nonTrackingDuration: 14 weeks, 2 days, 1 hour, 25 minutes, 39 seconds, 97 milliseconds, 615 microseconds, and 921 nanoseconds In hours: 2401.427527115278 active background duration: 2144.666241943055

| Solar tracking [h] | Background [h] | Active tracking [h] | Active background [h] | Total time [h] | Active time [h] |

|---|---|---|---|---|---|

| 106.006 | 2401.43 | 94.1228 | 2144.67 | 2507.43 | 2238.78 |

Type: rtCalibration total duration: 4 days, 11 hours, 25 minutes, 20 seconds, 453 milliseconds, 596 microseconds, and 104 nanoseconds In hours: 107.4223482211111 active duration: 2.601388888888889 trackingDuration: 0 nanoseconds In hours: 0.0 active tracking duration: 0.0 nonTrackingDuration: 4 days, 11 hours, 25 minutes, 20 seconds, 453 milliseconds, 596 microseconds, and 104 nanoseconds In hours: 107.4223482211111 active background duration: 2.601391883888889

| Solar tracking [h] | Background [h] | Active tracking [h] | Active background [h] | Total time [h] | Active time [h] |

|---|---|---|---|---|---|

| 0 | 107.422 | 0 | 2.60139 | 107.422 | 2.60139 |

Run-3:

./writeRunList -b ~/CastData/data/DataRuns2018_Reco.h5 -c ~/CastData/data/CalibrationRuns2018_Reco.h5

Type: rtBackground total duration: 7 weeks, 23 hours, 13 minutes, 35 seconds, 698 milliseconds, 399 microseconds, and 775 nanoseconds In hours: 1199.226582888611 active duration: 1079.598333333333 trackingDuration: 3 days, 2 hours, 17 minutes, and 53 seconds In hours: 74.29805555555555 active tracking duration: 66.92306679361111 nonTrackingDuration: 6 weeks, 4 days, 20 hours, 55 minutes, 42 seconds, 698 milliseconds, 399 microseconds, and 775 nanoseconds In hours: 1124.928527333056 active background duration: 1012.677445774444

| Solar tracking [h] | Background [h] | Active tracking [h] | Active background [h] | Total time [h] | Active time [h] |

|---|---|---|---|---|---|

| 74.2981 | 1124.93 | 66.9231 | 1012.68 | 1199.23 | 1079.6 |

Type: rtCalibration total duration: 3 days, 15 hours, 3 minutes, 47 seconds, 557 milliseconds, 131 microseconds, and 279 nanoseconds In hours: 87.06321031416667 active duration: 3.525555555555556 trackingDuration: 0 nanoseconds In hours: 0.0 active tracking duration: 0.0 nonTrackingDuration: 3 days, 15 hours, 3 minutes, 47 seconds, 557 milliseconds, 131 microseconds, and 279 nanoseconds In hours: 87.06321031416667 active background duration: 3.525561761944445

| Solar tracking [h] | Background [h] | Active tracking [h] | Active background [h] | Total time [h] | Active time [h] |

|---|---|---|---|---|---|

| 0 | 87.0632 | 0 | 3.52556 | 87.0632 | 3.52556 |

2.2. Shutter settings

The data taken in 2017 uses Timepix shutter settings of 2 / 32 (very long / 32), which results in frames of length ~2.4 s.

From 2018 on this was reduced to 2 / 30 (very long / 30), which is closer to 2.2 s. The exact reason for the change is not clear to me in hindsight.

[ ]*NOTE: Add event mean duration by run (e.g. from ./../../CastData/ExternCode/TimepixAnalysis/Tools/outerChipActivity/outerChipActivity.nim) here to showcase!

2.3. Data backups

Data is found in the following places:

/datadirectory on tpc19- on tpc00

- tpc06 is the lab computer that was used for testing etc., contains

data for the development, sparking etc.

Under

/data/tpc/datait contains a huge amount of backed up runs, including the whole sparking history etc. It's about 400 GB of data and should be fully backed up soon. Otherwise we might lose it forever. - my laptop & desktop at home contain most data

2.4. Detector documentation

The relevant IMPACT form, which contains the detector documentation is

https://impact.cern.ch/impact/secure/?place=editActivity:101629 A PDF

version of this document can be found at

The version uploaded indeed matches the latest status of the document in ./Detector/CastDetectorDocumentation.html, including the funny notes, comments and TODOs. :)

2.5. Timeline of CAST data taking

[-]add dates of each calibration[X]add Geometer measurements here[X]add time of scintillator calibration- ref:

https://espace.cern.ch/cast-share/elog/Lists/Posts/Post.aspx?ID=3420

and

- June/July detector brought to CERN

- before alignment of LLNL telescope by Jaime

- laser alignment (see

)

) - vacuum leak tests & installation of detector

(see:

)

) - after installation of lead shielding

- Geometer measurement of InGrid alignment for X-ray finger run

- - : first X-ray finger run (not useful to determine position of detector, due to dismount after)

- after: dismounted to make space for KWISP

- ref:

https://espace.cern.ch/cast-share/elog/Lists/Posts/Post.aspx?ID=3420

and

- Remount in September 2017 -

- installation from to

- Alignment with geometers for data taking, magnet warm and under vacuum.

- weekend: (ref: ./../Talks/CCM_2017_Sep/CCM_2017_Sep.html)

- calibration (but all wrong)

- water cooling stopped working

- next week: try fix water cooling

- quick couplings: rubber disintegrating causing cooling flow to go to zero

- attempt to clean via compressed air

- final cleaning : wrong tube, compressed detector…

- detector window exploded…

- show image of window and inside detector

- detector investigation in CAST CDL

see

images & timestamps of images

images & timestamps of images - study of contamination & end of Sep CCM

- detector back to Bonn, fixed

- weekend: (ref: ./../Talks/CCM_2017_Sep/CCM_2017_Sep.html)

- detector installation before first data taking

- reinstall in October for start of data taking in 30th Oct 2017

- remount start

- Alignment with Geometers (after removal & remounting due to window accident) for data taking. Magnet cold and under vacuum.

- calibration of scintillator veto paddle in RD51 lab

- remount installation finished incl. lead shielding (mail "InGrid status update" to Satan Forum on )

- <data taking period from to in

2017>

- between runs 85 & 86: fix of

src/waitconditions.cppTOS bug, which caused scinti triggers to be written in all files up to next FADC trigger - run 101 was the first with FADC noise

significant enough to make me change settings:

- Diff: 50 ns -> 20 ns (one to left)

- Coarse gain: 6x -> 10x (one to right)

- run 109: crazy amounts of noise on FADC

- run 111: stopped early. tried to debug noise and blew a fuse in gas interlock box by connecting NIM crate to wrong power cable

- run 112: change FADC settings again due to noise:

- integration: 50 ns -> 100 ns This was done at around

- integration: 100 ns -> 50 ns again at around .

- run 121: Jochen set the FADC main amplifier integration time from 50 -> 100 ns again, around

- between runs 85 & 86: fix of

- <data taking period from to

beginning 2018>

- start of 2018 period: temperature sensor broken!

- to issues with moving THL values & weird detector behavior. Changed THL values temporarily as an attempted fix, but in the end didn't help, problem got worse. (ref: gmail "Update 17/02" and ./../Mails/cast_power_supply_problem_thlshift/power_supply_problem.html) issue with power supply causing severe drop in gain / increase in THL (unclear, #hits in 55Fe dropped massively ; background eventually only saw random active pixels). Fixed by replugging all power cables and improving the grounding situation. iirc: this was later identified to be an issue with the grounding between the water cooling system and the detector.

- by everything was fixed and detector was running correctly again.

2 runs:

were missed because of this.

- removal of veto scintillator and lead shielding

- X-ray finger run 2 on . This run is actually useful to determine the position of the detector.

- Geometer measurement after warming up magnet and not under vacuum. Serves as reference for difference between vacuum & cold on !

- detector fully removed and taken back to Bonn

- installation started . Mounting due to lead shielding support was more complicated than intended (see mails "ingrid installation" including Damien Bedat)

- shielding fixed by and detector installed the next couple of days

- Alignment with Geometers for data taking. Magnet warm and not under vacuum.

- data taking was supposed to start end of September, but delayed.

- detector had issue w/ power supply, finally fixed on . Issue was a bad soldering joint on the Phoenix connector on the intermediate board. Note: See chain of mails titled "Unser Detektor…" starting on for more information. Detector behavior was weird from beginning Oct. Weird behavior seen on the voltages of the detector. Initial worry: power supply dead or supercaps on it. Replaced power supply (Phips brought it a few days after), but no change.

- data taking starts

- run 297, 298 showed lots of noise again, disabled FADC on (went to CERN next day)

- data taking ends

runs that were missed:

The last one was not a full run.

[ ]CHECK THE ELOG FOR WHAT THE LAST RUN WAS ABOUT

- detector mounted in CAST Detector Lab

- data taking from to .

- detector dismounted and taken back to Bonn

- ref: ./../outerRingNotes.html

- calibration measurements of outer chips with a 55Fe source using a custom anode & window

- between and calibrations of each outer chip using Run 2 and Run 3 detector calibrations

- start of a new detector calibration

- another set of measurements between to with a new set of calibrations

2.6. Detector alignment at CAST [/]

There were 3 different kinds of alignments:

- laser alignment. Done in July 2017 and 27/04/2018 (see mail of

Theodoros for latter "alignment of LLNL telescope")

- images:

the spot is the one on the vertical line from the center down!

The others are just refractions. Was easier visible by eye.

the spot is the one on the vertical line from the center down!

The others are just refractions. Was easier visible by eye. The right one is the alignment as it was after data taking in

Apr 2018. The left is after a slight realignment by loosening

the screws and moving a bit. Theodoros explanation about it

from the mail listed above:

The right one is the alignment as it was after data taking in

Apr 2018. The left is after a slight realignment by loosening

the screws and moving a bit. Theodoros explanation about it

from the mail listed above:

Hello,

After some issues the geometres installed the aligned laser today. Originally Jaime and I saw the spot as seen at the right image. It was +1mm too high. We rechecked Sebastian’s images from the Xray fingers and confirmed that his data indicated a parallel movement of ~1.4 mm (detector towards airport). We then started wondering whether there are effects coming from the target itself or the tolerances in the holes of the screws. By unscrewing it a bit it was clear that one can easily reposition it with an uncertainty of almost +-1mm. For example in the left picture you can see the new position we put it in, in which the spot is almost perfectly aligned.

We believe that the source of these shifts is primarily the positioning of the detector/target on the plexiglass drum. As everything else seems to be aligned, we do not need to realign. On Monday we will lock the manipulator arms and recheck the spot. Jaime will change his tickets to leave earlier.

Thursday-Friday we can dismount the shielding support to send it for machining and the detector can go to Bonn.

With this +-1mm play in the screw holes in mind (and the possible delays from the cavities) we should seriously consider doing an X-ray finger run right after the installation of InGRID which may need to be shifted accordingly. I will try to adjust the schedule next week.

Please let me know if you have any further comments.

Cheers,

Theodoros

- images:

geometer measurements. 4 measurements performed, with EDMS links (the links are fully public!):

- 11.07.2017 https://edms.cern.ch/document/1827959/1

- 14.09.2017 https://edms.cern.ch/document/2005606/1

- 26.10.2017 https://edms.cern.ch/document/2005690/1

- 23.07.2018 https://edms.cern.ch/document/2005895/1

For geometer measurements in particular search gmail archive for Antje Behrens (Antje.Behrens@cern.ch) or "InGrid alignment" The reports can also be found here: ./CAST_Alignment/

- X-ray finger measurements, 2 runs:

[ ]13.07.2017, run number 21 LINK DATA[ ]20.04.2018, run number 189, after first part data taking in 2018. LINK DATA

2.7. X-ray finger

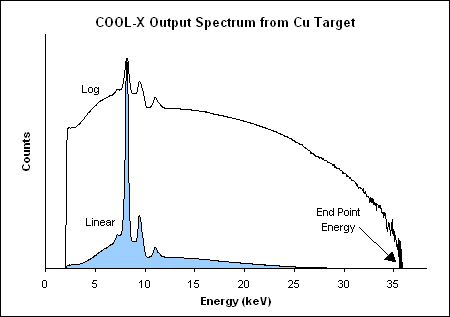

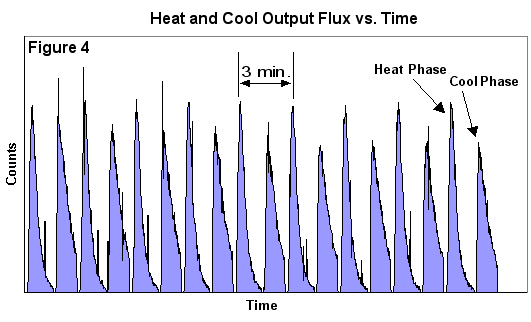

The X-ray finger used at CAST is an Amptek COOL-X:

https://www.amptek.com/internal-products/obsolete-products/cool-x-pyroelectric-x-ray-generator

The relevant plots for our purposes are shown in:

In addition the simple Monte Carlo simulation of the expected signal (written in Clojure) is found in: ./../Code/CAST/XrayFinderCalc/

2 X-ray finger runs:

[ ]13.07.2017, run number 21 LINK DATA[ ]20.04.2018, run number 189, after first part data taking in 2018. LINK DATA

Important note: The detector was removed directly after the first of these X-ray measurements! As such, the measurement has no bearing on the real position the detector was in during the first data taking campaign.

The X-ray finger run is used both to determine a center position of the detector, as well as determine the rotation of the graphite spacer of the LLNL telescope, i.e. the rotation of the telescope.

[X]Determine the rotation angle of the graphite spacer from the X-ray finger data -> do now. X-ray finger run:->

-> It comes out to 14.17°!

But for run 21 (between which detector was dismounted of course):

-> It comes out to 14.17°!

But for run 21 (between which detector was dismounted of course):

-> Only 11.36°!

That's a huge uncertainty given the detector was only dismounted!

3°.

-> Only 11.36°!

That's a huge uncertainty given the detector was only dismounted!

3°.

NOTE: For more information including simulations, for now see here: ./../journal.html from the day of , sec. [BROKEN LINK: sec:journal:2023_09_05_xray_finger].

2.7.1. Run 189

The below is copied from thesis.org.

I copied the X-ray finger runs from tpc19 over to ./../../CastData/data/XrayFingerRuns/. The run of interest is mainly the run 189, as it's the run done with the detector installed as in 2017/18 data taking.

cd /dev/shm # store here for fast access & temporary cp ~/CastData/data/XrayFingerRuns/XrayFingerRun2018.tar.gz . tar xzf XrayFingerRun2018.tar.gz raw_data_manipulation -p Run_189_180420-09-53 --runType xray --out xray_raw_run189.h5 reconstruction -i xray_raw_run189.h5 --out xray_reco_run189.h5 # make sure `config.toml` for reconstruction uses `default` clustering! reconstruction -i xray_reco_run189.h5 --only_charge reconstruction -i xray_reco_run189.h5 --only_gas_gain reconstruction -i xray_reco_run189.h5 --only_energy_from_e plotData --h5file xray_reco_run189.h5 --runType=rtCalibration -b bGgPlot --ingrid --occupancy --config plotData.toml

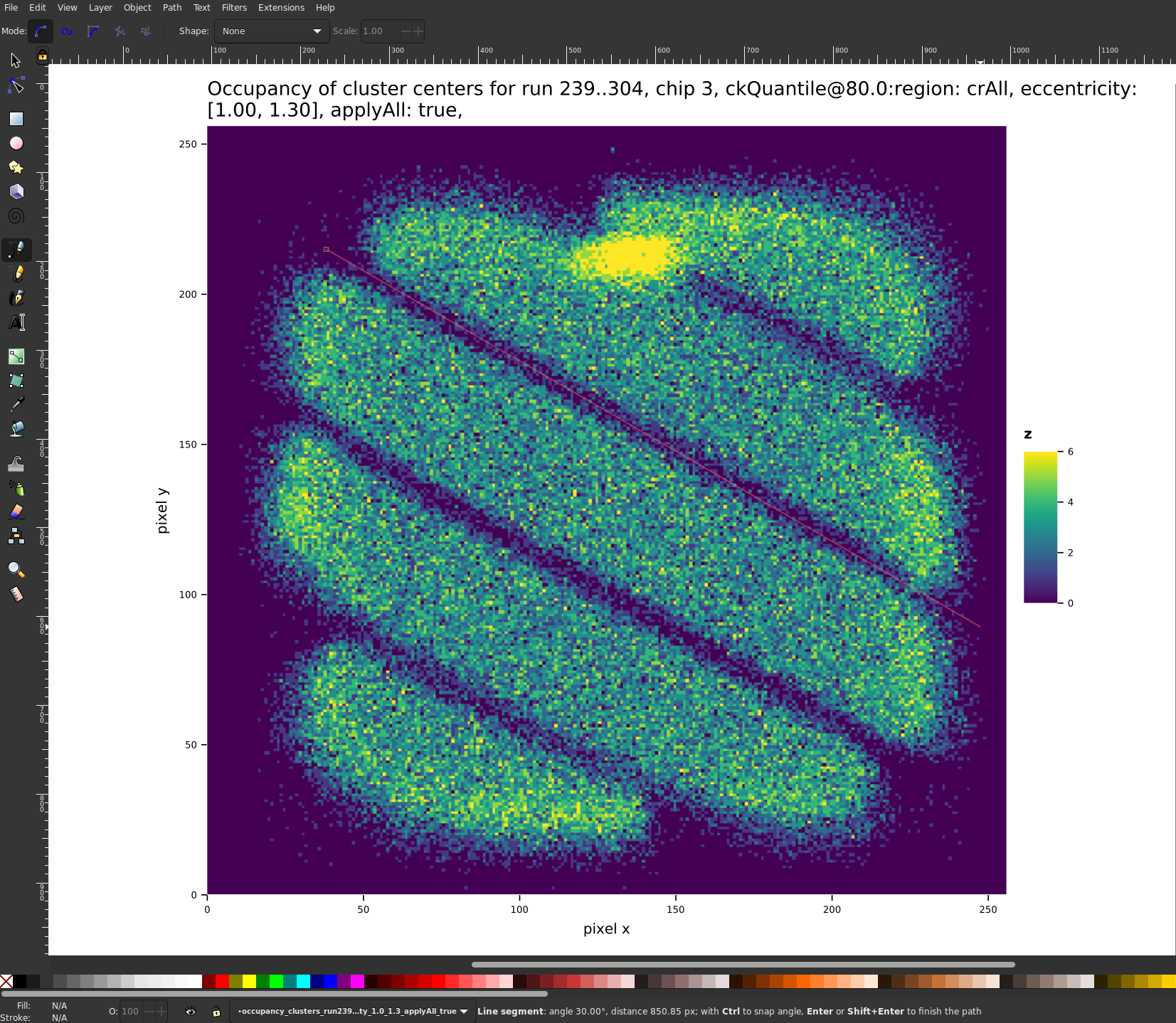

which gives us the following plot:

With many more plots here: ./../Figs/statusAndProgress/xrayFingerRun/run189/

One very important plot:

-> So the peak is at around 3 keV instead of about 8 keV, as the plot

from Amptek in the section above pretends.

[ ]Maybe at CAST they changed the target?

2.8. Detector window

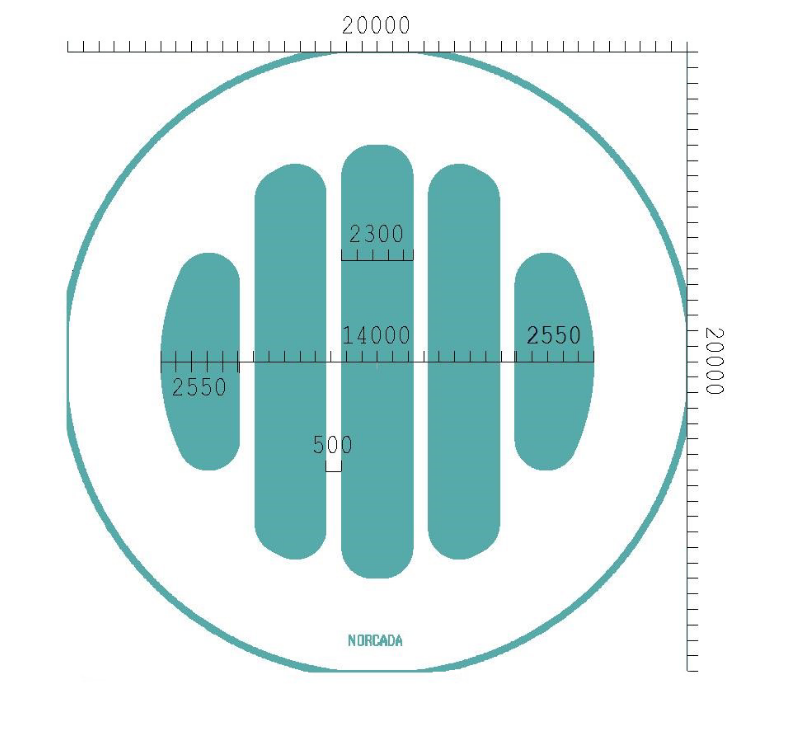

The window layout is shown in fig. 2.

The sizes are thus:

- Diameter: \(\SI{14}{\mm}\)

- 4 strongbacks of:

- width: \(\SI{0.5}{\mm}\)

- thickness: \(\SI{200}{\micro\meter}\)

- \(\SI{20}{\nm}\) Al coating

- they get wider towards the very outside

Let's compute the amount of occlusion by the strongbacks. Using code based on Johanna's raytracer:

## Super dumb MC sampling over the entrance window using the Johanna's code from `raytracer2018.nim` ## to check the coverage of the strongback of the 2018 window import ggplotnim, random, chroma proc colorMe(y: float): bool = const stripDistWindow = 2.3 #mm stripWidthWindow = 0.5 #mm if abs(y) > stripDistWindow / 2.0 and abs(y) < stripDistWindow / 2.0 + stripWidthWindow or abs(y) > 1.5 * stripDistWindow + stripWidthWindow and abs(y) < 1.5 * stripDistWindow + 2.0 * stripWidthWindow: result = true else: result = false proc sample() = randomize(423) const nmc = 100_000 let black = color(0.0, 0.0, 0.0) var dataX = newSeqOfCap[float](nmc) var dataY = newSeqOfCap[float](nmc) var inside = newSeqOfCap[bool](nmc) for idx in 0 ..< nmc: let x = rand(-7.0 .. 7.0) let y = rand(-7.0 .. 7.0) if x*x + y*y < 7.0 * 7.0: dataX.add x dataY.add y inside.add colorMe(y) let df = toDf(dataX, dataY, inside) echo "A fraction of ", df.filter(f{`inside` == true}).len / df.len, " is occluded by the strongback" let dfGold = df.filter(f{abs(idx(`dataX`, float)) <= 2.25 and abs(idx(`dataY`, float)) <= 2.25}) echo "Gold region: A fraction of ", dfGold.filter(f{`inside` == true}).len / dfGold.len, " is occluded by the strongback" ggplot(df, aes("dataX", "dataY", fill = "inside")) + geom_point() + # draw the gold region as a black rectangle geom_linerange(aes = aes(y = 0, x = 2.25, yMin = -2.25, yMax = 2.25), color = some(black)) + geom_linerange(aes = aes(y = 0, x = -2.25, yMin = -2.25, yMax = 2.25), color = some(black)) + geom_linerange(aes = aes(x = 0, y = 2.25, xMin = -2.25, xMax = 2.25), color = some(black)) + geom_linerange(aes = aes(x = 0, y = -2.25, xMin = -2.25, xMax = 2.25), color = some(black)) + xlab("x [mm]") + ylab("y [mm]") + ggsave("/home/basti/org/Figs/statusAndProgress/detector/SiN_window_occlusion.png", width = 1150, height = 1000) sample()

A fraction of 0.16170429252782195 is occluded by the strongback Gold region: A fraction of 0.2215316951907448 is occluded by the strongback (exact should be 22.2 % based on two \SI{0.5}{\mm} strongbacks within a square of \SI{4.5}{\mm} long sides).

So to summarize it in a table, tab 1 and as a figure in fig. 3.

| Region | Occlusion / % |

|---|---|

| Full | 16.2 |

| Gold | 22.2 |

The X-ray absorption properties were obtained using the online calculator from here: https://henke.lbl.gov/optical_constants/

The relevant resource files are found in:

- 200μm Si strongback: ./../resources/Si_density_2.33_thickness_200microns.txt

- 300nm SiN: ./../resources/Si3N4_density_3.44_thickness_0.3microns.txt

- 20nm Al: ./../resources/Al_20nm_transmission_10keV.txt

- 3cm Ar: ./../resources/transmission-argon-30mm-1050mbar-295K.dat

Let's create a plot of:

- window transmission

- gas absorption

- convolution of both

import ggplotnim let al = readCsv("/home/basti/org/resources/Al_20nm_transmission_10keV.txt", sep = ' ', header = "#") let siN = readCsv("/home/basti/org/resources/Si3N4_density_3.44_thickness_0.3microns.txt", sep = ' ') let si = readCsv("/home/basti/org/resources/Si_density_2.33_thickness_200microns.txt", sep = ' ') let argon = readCsv("/home/basti/org/resources/transmission-argon-30mm-1050mbar-295K.dat", sep = ' ') var df = newDataFrame() df["300nm SiN"] = siN["Transmission", float] df["200μm Si"] = si["Transmission", float] df["30mm Ar"] = argon["Transmission", float][0 .. argon.high - 1] df["20nm Al"] = al["Transmission", float] df["Energy [eV]"] = siN["PhotonEnergy(eV)", float] df = df.mutate(f{"Energy [keV]" ~ idx("Energy [eV]") / 1000.0}, f{"30mm Ar Abs." ~ 1.0 - idx("30mm Ar")}, f{"Efficiency" ~ idx("30mm Ar Abs.") * idx("300nm SiN") * idx("20nm Al")}, f{"Eff • SB • ε" ~ `Efficiency` * 0.78 * 0.8}) # strongback occlusion of 22% and ε = 80% .drop(["Energy [eV]", "Ar"]) .gather(["300nm SiN", "Efficiency", "Eff • SB • ε", "30mm Ar Abs.", "200μm Si", "20nm Al"], key = "Type", value = "Efficiency") echo df ggplot(df, aes("Energy [keV]", "Efficiency", color = "Type")) + geom_line() + ggtitle("Detector efficiency of combination of 300nm SiN window and 30mm of Argon absorption, including ε = 80% and strongback occlusion of 22%") + margin(top = 1.5) + ggsave("/home/basti/org/Figs/statusAndProgress/detector/window_plus_argon_efficiency.pdf", width = 800, height = 600)

Fig. 4 shows the combined efficiency of the SiN window, the \SI{20}{\nm} of Al coating and the gas \SI{30}{\mm} of Argon absorption and in addition the software efficiency (at ε = 80%) and strongback occlusion (22% in gold region).

The following code exists to plot the window transmissions for the window material in combination with the axion flux in:

It produces the combined plot as shown in fig. 5.

2.8.1. Window layout with correct window rotation

## Super dumb MC sampling over the entrance window using the Johanna's code from `raytracer2018.nim` ## to check the coverage of the strongback of the 2018 window import ggplotnim, chroma, unchained proc hitsStrongback(y: float): bool = const stripDistWindow = 2.3 #mm stripWidthWindow = 0.5 #mm if abs(y) > stripDistWindow / 2.0 and abs(y) < stripDistWindow / 2.0 + stripWidthWindow or abs(y) > 1.5 * stripDistWindow + stripWidthWindow and abs(y) < 1.5 * stripDistWindow + 2.0 * stripWidthWindow: result = true else: result = false proc sample() = let black = color(0.0, 0.0, 0.0) let nPoints = 256 var xs = linspace(-7.0, 7.0, nPoints) var dataX = newSeqOfCap[float](nPoints^2) var dataY = newSeqOfCap[float](nPoints^2) var inside = newSeqOfCap[bool](nPoints^2) for x in xs: for y in xs: if x*x + y*y < 7.0 * 7.0: when false: dataX.add x * cos(30.°.to(Radian)) + y * sin(30.°.to(Radian)) dataY.add y * cos(30.°.to(Radian)) - x * sin(30.°.to(Radian)) inside.add hitsStrongback(y) else: dataX.add x dataY.add y # rotate current y back, such that we can analyze in a "non rotated" coord. syst let yRot = y * cos(-30.°.to(Radian)) - x * sin(-30.°.to(Radian)) inside.add hitsStrongback(yRot) let df = toDf(dataX, dataY, inside) ggplot(df, aes("dataX", "dataY", fill = "inside")) + geom_point() + # draw the gold region as a black rectangle geom_linerange(aes = aes(y = 0, x = 2.25, yMin = -2.25, yMax = 2.25), color = some(black)) + geom_linerange(aes = aes(y = 0, x = -2.25, yMin = -2.25, yMax = 2.25), color = some(black)) + geom_linerange(aes = aes(x = 0, y = 2.25, xMin = -2.25, xMax = 2.25), color = some(black)) + geom_linerange(aes = aes(x = 0, y = -2.25, xMin = -2.25, xMax = 2.25), color = some(black)) + xlab("x [mm]") + ylab("y [mm]") + xlim(-7, 7) + ylim(-7, 7) + ggsave("/home/basti/org/Figs/statusAndProgress/detector/SiN_window_occlusion_rotated.png", width = 1150, height = 1000) sample()

Which gives us:

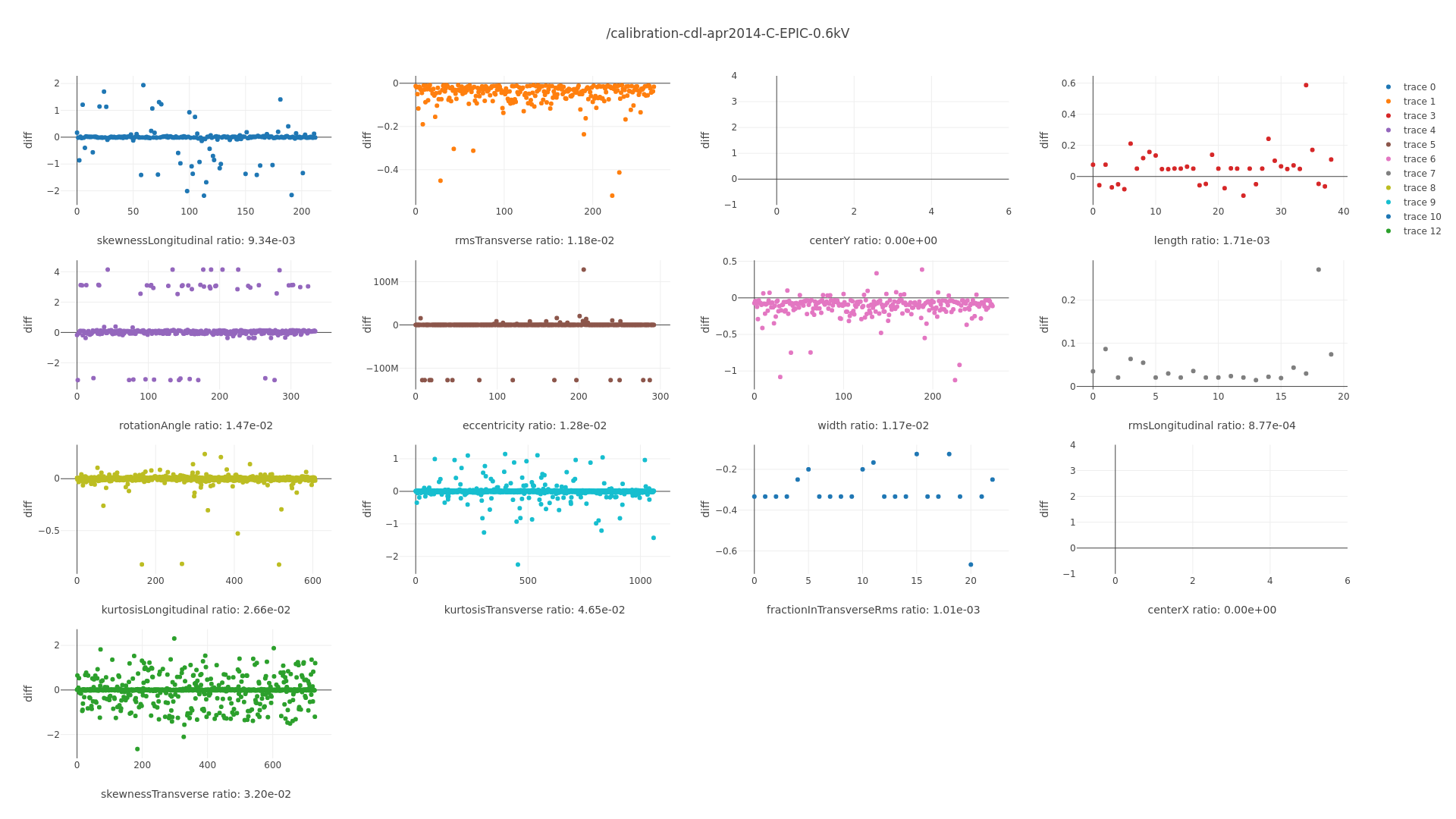

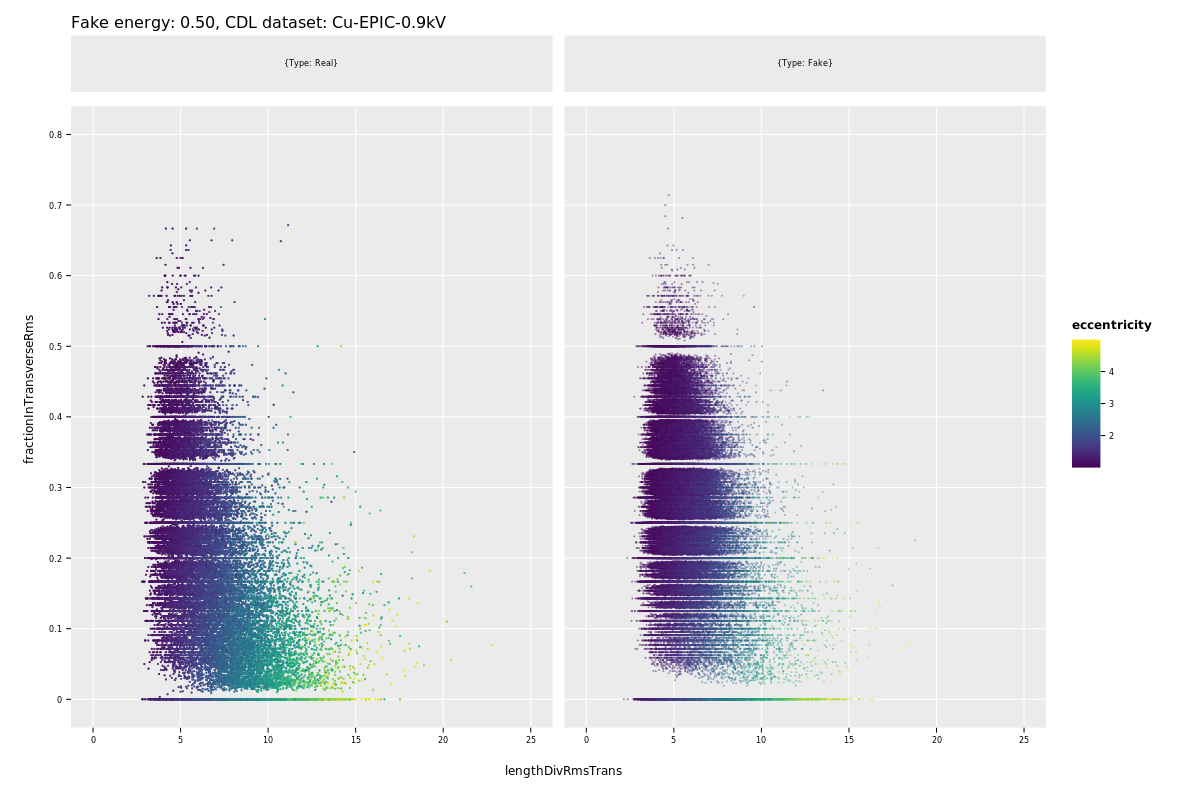

2.9. General event & outer chip information

Running ./../../CastData/ExternCode/TimepixAnalysis/Tools/outerChipActivity/outerChipActivity.nim we can extract information about the total number of events and the activity on the center chip vs. the outer chips.

For both the 2017/18 data (run 2) and the end of 2018 data (run 3) we will now look at:

- number of total events

- number of events with any activity (> 3 hits)

- number of events with activity only on center chip

- number of events with activity on center and outer chips (but not only center)

- number of events with activity only on outer chips

UPDATE: The reason for the two peaks in the Run 2 data of the event duration histogram is that we accidentally used run settings 2/32 in 2017 and 2/30 in 2018! (This does not explain the 0 time events of course)

2.9.1. 2017/18 (Run 2)

Number of total events: 3758960 Number of events without center: 1557934 | 41.44587864728542% Number of events only center: 23820 | 0.633685913124907% Number of events with center activity and outer: 984319 | 26.185939728009878% Number of events any hit events: 2542253 | 67.6318183752953% Mean of event durations: 2.144074329358038

Interestingly, the histogram of event durations looks as follows, fig. 6.

We can cut to the range between 0 and 2.2 s, fig. 7.

The peak at 0 is plain and simply a peak at exact 0 values (the previous figure only removed exact 0 values).

What does the energy distribution look like for these events? Fig. 8.

And the same split up per run (to make sure it's not one bad run), fig. 9.

Hmm. I suppose it's a bug in the firmware that the event duration is not correctly returned? Could happen if FADC triggers and for some reason 0 clock cycles are returned. This could be connected to the weird "hiccups" the readout sometimes does (when the FADC doesn't actually trigger for a full event). Maybe these are the events right after?

- Noisy pixels

In this run there are a few noisy pixels that need to be removed before background rates are calculated. These are listed in tab. 2.

Table 2: Number of counts noisy pixels in 2017/18 dataset contribute to the number of background clusters remaining. The total number of noise clusters amounts to 1265 in this case (depends on the clustering algorithm potentially). These must be removed for a sane background level (and the area must be removed from from the size of active area in this dataset. NOTE: When using these numbers, make sure the x and y coordinates are not accidentally inverted. x y Count after logL 64 109 7 64 110 9 65 108 30 66 108 50 67 108 33 65 109 74 66 109 262 67 109 136 68 109 29 65 110 90 66 110 280 67 110 139 65 111 24 66 111 60 67 111 34 67 112 8 \clearpage

2.9.2. End of 2018 (Run 3)

NOTE: In Run 3 we only used 2/30 as run settings! Hence a single peak in event duration.

And the same plots and numbers for 2018.

Number of total events: 1837330 Number of events without center: 741199 | 40.34109278137297% Number of events only center: 9462 | 0.514986420512374% Number of events with center activity and outer: 470188 | 25.590830172043127% Number of events any hit events: 1211387 | 65.9319229534161% Mean of event durations: 2.1157526632342307

2.10. CAST maximum angle from the sun

A question that came up today. What is the maximum difference in grazing angle that we could see on the LLNL telescope behind CAST for an axion coming from the Sun?

The Sun has an apparent size of ~32 arcminutes https://en.wikipedia.org/wiki/Sun.

If the dominant axion emission comes from the inner 10% of the radius, that's still 3 arcminutes, which is \(\SI{0.05}{°}\).

The first question is whether the magnet bore appears larger or smaller than this size from one end to the other:

import unchained, math const L = 9.26.m # Magnet length const d = 4.3.cm # Magnet bore echo "Maximum angle visible through bore = ", arctan(d / L).Radian.to(°)

so \SI{0.266}{°}, which is larger than the apparent size of the solar core.

That means the maximum angle we can see at a specific point on the telescope is up to the apparent size of the core, namely \(\SI{0.05}{°}\).

2.11. LLNL telescope

IMPORTANT: The multilayer coatings of the LLNL telescope are carbon

at the top and platinum at the bottom, despite "Pt/C" being used to

refer to them. See fig. 4.11 in the PhD thesis

.

.

UPDATE: I randomly stumbled on a PhD thesis about the NuSTAR telescope! It validates some things I have been wondering about. See sec. 2.11.2.

UPDATE: Jaime sent me two text files today:

- ./../resources/LLNL_telescope/cast20l4_f1500mm_asDesigned.txt

- ./../resources/LLNL_telescope/cast20l4_f1500mm_asBuilt.txt

both of which are quite different from the numbers in Anders Jakobsen's thesis! These do reproduce a focal length of \(\SI{1500}{mm}\) instead of \(\SI{1530}{mm}\) when calculating it using the Wolter equation (when not using \(R_3\), but rather the virtual reflection point!).

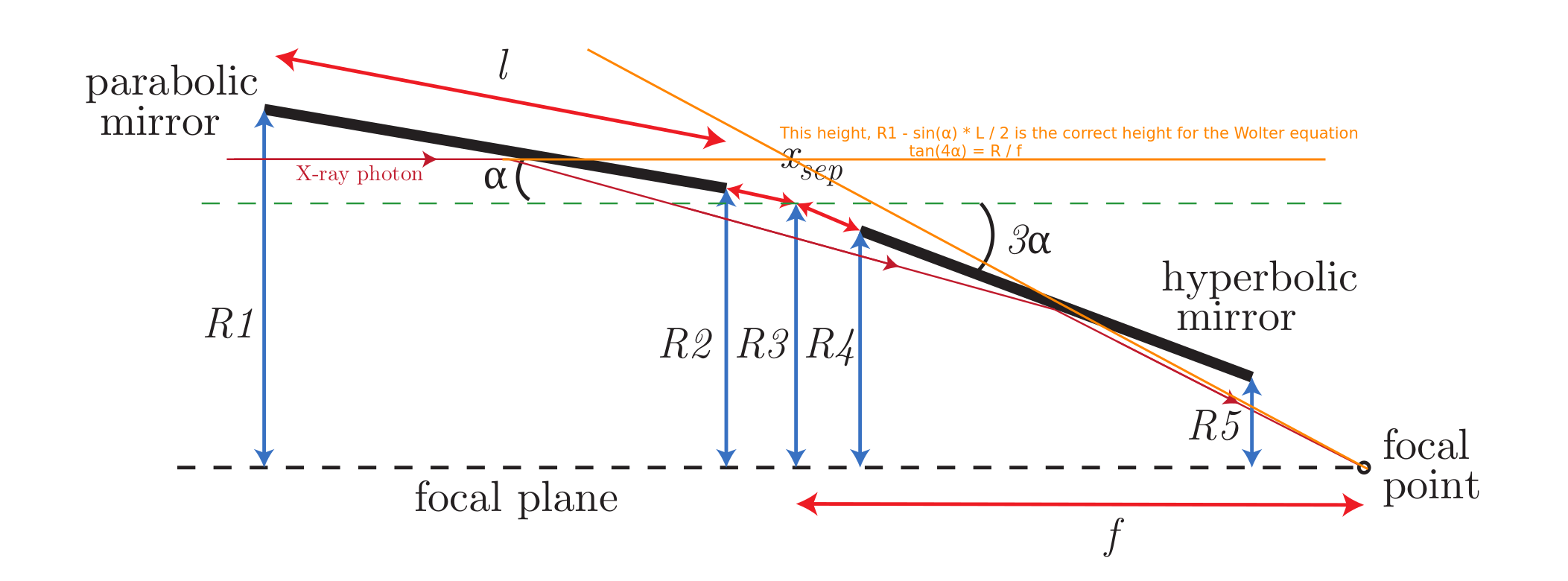

This section covers details about the telescope design, i.e. the mirror angles, radii and all that stuff as well as information about it from external sources (e.g. the raytracing results from LLNL about it). For more information about our raytracing results, see sec. 11.

Further, for more information about the telescope see ./LLNL_def_REST_format/llnl_def_rest_format.html.

Some of the most important information is repeated here.

The information for the LLNL telescope can best be found in the PhD thesis of Anders Clemen Jakobsen from DTU in Denmark: https://backend.orbit.dtu.dk/ws/portalfiles/portal/122353510/phdthesis_for_DTU_orbit.pdf

in particular page 58 (59 in the PDF) for the following table: UPDATE: The numbers in this table are wrong. See update at the top of this section.

| Layer | Area [mm²] | Relative area [%] | Cumulative area [mm²] | α [°] | α [mrad] | R1 [mm] | R5 [mm] |

|---|---|---|---|---|---|---|---|

| 1 | 13.863 | 0.9546 | 13.863 | 0.579 | 10.113 | 63.006 | 53.821 |

| 2 | 48.175 | 3.3173 | 62.038 | 0.603 | 10.530 | 65.606 | 56.043 |

| 3 | 69.270 | 4.7700 | 131.308 | 0.628 | 10.962 | 68.305 | 58.348 |

| 4 | 86.760 | 5.9743 | 218.068 | 0.654 | 11.411 | 71.105 | 60.741 |

| 5 | 102.266 | 7.0421 | 320.334 | 0.680 | 11.877 | 74.011 | 63.223 |

| 6 | 116.172 | 7.9997 | 436.506 | 0.708 | 12.360 | 77.027 | 65.800 |

| 7 | 128.419 | 8.8430 | 564.925 | 0.737 | 12.861 | 80.157 | 68.474 |

| 8 | 138.664 | 9.5485 | 703.589 | 0.767 | 13.382 | 83.405 | 71.249 |

| 9 | 146.281 | 10.073 | 849.87 | 0.798 | 13.921 | 86.775 | 74.129 |

| 10 | 150.267 | 10.347 | 1000.137 | 0.830 | 14.481 | 90.272 | 77.117 |

| 11 | 149.002 | 10.260 | 1149.139 | 0.863 | 15.062 | 93.902 | 80.218 |

| 12 | 139.621 | 9.6144 | 1288.76 | 0.898 | 15.665 | 97.668 | 83.436 |

| 13 | 115.793 | 7.973 | 1404.553 | 0.933 | 16.290 | 101.576 | 86.776 |

| 14 | 47.648 | 3.2810 | 1452.201 | 0.970 | 16.938 | 105.632 | 90.241 |

Further information can be found in the JCAP paper about the LLNL telescope for CAST: https://iopscience.iop.org/article/10.1088/1475-7516/2015/12/008/meta

in particular table 1 (extracted with caption):

| Property | Value |

|---|---|

| Mirror substrates | glass, Schott D263 |

| Substrate thickness | 0.21 mm |

| L, length of upper and lower mirrors | 225 mm |

| Overall telescope length | 454 mm |

| f , focal length | 1500 mm |

| Layers | 13 |

| Total number of individual mirrors in optic | 26 |

| ρmax , range of maximum radii | 63.24–102.4 mm |

| ρmid , range of mid-point radii | 62.07–100.5 mm |

| ρmin , range of minimum radii | 53.85–87.18 mm |

| α, range of graze angles | 0.592–0.968 degrees |

| Azimuthal extent | Approximately 30 degrees |

2.11.1. Information (raytracing, effective area etc) from CAST Nature paper

Jaime finally sent the information about the raytracing results from the LLNL telescope to Cristina . She shared it with me: https://unizares-my.sharepoint.com/personal/cmargalejo_unizar_es/_layouts/15/onedrive.aspx?ga=1&id=%2Fpersonal%2Fcmargalejo%5Funizar%5Fes%2FDocuments%2FDoctorado%20UNIZAR%2FCAST%20official%2FLimit%20calculation%2FJaime%27s%20data

I downloaded and extracted the files to here: ./../resources/llnl_cast_nature_jaime_data/

Things to note:

- the CAST2016Dec* directories contain

.fitsfiles for the axion image for different energies - the same directories also contain text files for the effective area!

- the

./../resources/llnl_cast_nature_jaime_data/2016_DEC_Final_CAST_XRT/

directory contains the axion images actually used for the limit - I

presume - in form of

.txtfiles. that directory also contains a "final" ? effective area file! excerpt from that file: UPDATE: In the meeting with Jaime and Julia on Jaime mentioned this is the final effective area that they calculated and we should use this!

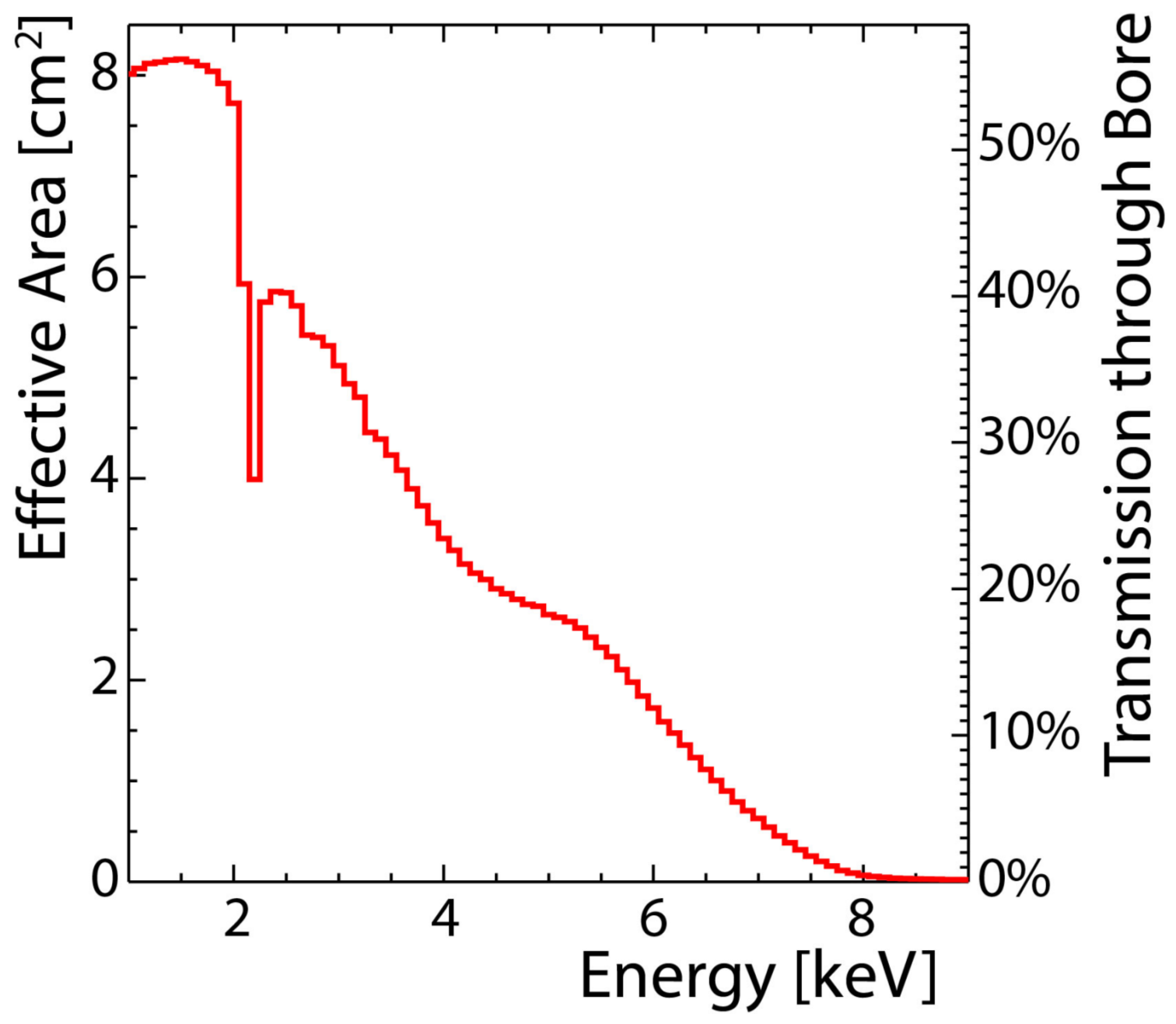

E(keV) Area(cm^2) Area_lower_limit(cm^2) Area_higher_limit(cm^2) 0.000000 9.40788 8.93055 9.87147 0.100000 2.51070 1.76999 3.56970 0.200000 5.96852 5.06843 6.93198 0.300000 4.05163 3.55871 4.60069 0.400000 5.28723 4.70362 5.92018 0.500000 6.05037 5.50801 6.63493 0.600000 5.98980 5.44433 6.56380 0.700000 6.33760 5.81250 6.86565 0.800000 6.45533 5.97988 6.94818 0.900000 6.68399 6.22210 7.15994 1.00000 6.87400 6.42313 7.32568 1.10000 7.01362 6.57078 7.44991 1.20000 7.11297 6.68403 7.53477 1.30000 7.18784 6.76026 7.60188 1.40000 7.23464 6.82698 7.65152 1.50000 7.26598 6.85565 7.66851 1.60000 7.28027 6.86977 7.67453 1.70000 7.26311 6.86645 7.66171 1.80000 7.22509 6.83192 7.61740 1.90000 7.14513 6.76611 7.52503 2.00000 6.96418 6.58820 7.32984 2.10000 5.28441 5.00942 5.55890 2.20000 3.64293 3.45370 3.82893 2.30000 5.17823 4.90664 5.44582 2.40000 5.29972 5.02560 5.57611 2.50000 5.29166 5.02555 5.57095 2.60000 5.17942 4.91425 5.43329 2.70000 4.92675 4.67978 5.18098 2.80000 4.92422 4.66858 5.17432 2.90000 4.83265 4.58795 5.08459 3.00000 4.64834 4.41387 4.89098i.e. it peaks at ~7.3.

Plot the "final" effective area against the extracted data from the JCAP paper:

Note that we do not know with certainty that this is indeed the effective area used for the CAST Nature limit. That's just my assumption!

import ggplotnim const path = "/home/basti/org/resources/llnl_cast_nature_jaime_data/2016_DEC_Final_CAST_XRT/EffectiveArea.txt" const pathJCAP = "/home/basti/org/resources/llnl_xray_telescope_cast_effective_area.csv" let dfJcap = readCsv(pathJCAP) let df = readCsv(path, sep = ' ') .rename(f{"Energy[keV]" <- "E(keV)"}, f{"EffectiveArea[cm²]" <- "Area(cm^2)"}) .select("Energy[keV]", "EffectiveArea[cm²]") let dfC = bind_rows([("JCAP", dfJcap), ("Nature", df)], "Type") ggplot(dfC, aes("Energy[keV]", "EffectiveArea[cm²]", color = "Type")) + geom_line() + ggsave("/tmp/effective_area_jcap_vs_nature_llnl.pdf")

So it seems like the effective area here is even lower than the

effective area in the JCAP LLNL paper! That's ridiculous.

HOWEVER the shape seems to match much better with the shape we get

from computing the effective area ourselves!

-> UPDATE: No, not really. I ran the code in journal.org with

makePlot and makeRescaledPlot using dfJaimeNature as a rescaling

reference using the 3 arcmin code.

So the shape is very different after all.

[ ]Is there a chance the difference is due toxrayAttenuation? Note the weird energy dependent linear offset comparingxrayAttenuationreflectivity compared to the DarpanX numbers! Could that shift be the reason?

- LLNL raytracing for axion image and CoolX X-ray finger

The DTU thesis contains raytracing images (from page 78) for the X-ray finger run and for the axion image.

- X-ray finger

The image (as a screenshot) from the X-ray finger:

where we can see a few things:

- the caption mentions the source was 14.2 m away from the optic. This is nonsensical. The magnet is 9.26m long and even with the cryo housing etc. we won't get to much more than 10 m from the telescope. The X-ray finger was installed in the bore of the magnet!

- it mentions the source being 6 mm diameter (text mentions diameter

explicitly). All we know about it is from the manufacturer that the

size is given as 15 mm. But there is nothing about the actual size

of the emission surface.

- the resulting raytraced image has a size of only slightly less than 3 mm in the short axis and maybe about 3 mm in the long axis.

About 3: Our own X-ray finger is the following: file:///home/basti/phd/Figs/CAST_Alignment/xray_finger_centers_run_189.pdf (Note: it needs to be rotated of course) We can see that our real image is much larger! Along "x" it goes from about 5.5 to 10 mm or so! Quite a bit larger. And along y from less than 4 to maybe 10!

Given that we have the raytracing data from Jaime, let's plot their data to see if it actually looks like that:

import ggplotnim, sequtils, seqmath let df = readCsv("/home/basti/org/resources/llnl_cast_nature_jaime_data/2016_DEC_Final_CAST_XRT/3.00keV_2Dmap_CoolX.txt", sep = ' ', skipLines = 2, colNames = @["x", "y", "z"]) .mutate(f{"x" ~ `x` - mean(`x`)}, f{"y" ~ `y` - mean(`y`)}) var customInferno = inferno() customInferno.colors[0] = 0 # transparent ggplot(df, aes("x", "y", fill = "z")) + geom_raster() + scale_fill_gradient(customInferno) + xlab("x [mm]") + ylab("y [mm]") + ggtitle("LLNL raytracing of X-ray finger (Jaime)") + ggsave("~/org/Figs/statusAndProgress/rayTracing/raytracing_xray_finger_llnl_jaime.pdf") ggplot(df.filter(f{`x` >= -7.0 and `x` <= 7.0 and `y` >= -7.0 and `y` <= 7.0}), aes("x", "y", fill = "z")) + geom_raster() + scale_fill_gradient(customInferno) + xlab("x [mm]") + ylab("y [mm]") + xlim(-7.0, 7.0) + ylim(-7.0, 7.0) + ggtitle("LLNL raytracing of X-ray finger zoomed (Jaime)") + ggsave("~/org/Figs/statusAndProgress/rayTracing/raytracing_xray_finger_llnl_jaime_gridpix_size.pdf")

This yields the following figure:

and cropped to the range of the GridPix:

This is MUCH bigger than the plot from the paper indicates. And the shape is also much more elongated! More in line with what we really see.

Let's use our raytracer to produce the X-ray finger according to the specification of 14.2 m first and then a more reasonable estimate.

Make sure to put the following into the

config.tomlfile:[TestXraySource] useConfig = true # sets whether to read these values here. Can be overriden here or useng flag `--testXray` active = true # whether the source is active (i.e. Sun or source?) sourceKind = "classical" # whether a "classical" source or the "sun" (Sun only for position *not* for energy) parallel = false energy = 3.0 # keV The energy of the X-ray source distance = 14200 # 9260.0 #106820.0 #926000 #14200 #9260.0 #2000.0 # mm Distance of the X-ray source from the readout radius = 3.0 #21.5 #44.661 #8.29729 #46.609 #4.04043 #3.0 #4.04043 #21.5 # #21.5 # mm Radius of the X-ray source offAxisUp = 0.0 # mm offAxisLeft = 0.0 # mm activity = 0.125 # GBq The activity in `GBq` of the source lengthCol = 0.0 #0.021 # mm Length of a collimator in front of the source

./raytracer --ignoreDetWindow --ignoreGasAbs --suffix "_xrayFinger_14.2m_3mm"which more or less matches the size of our real data.

Now the same with a source that is 10 m away:

[TestXraySource] useConfig = true # sets whether to read these values here. Can be overriden here or useng flag `--testXray` active = true # whether the source is active (i.e. Sun or source?) sourceKind = "classical" # whether a "classical" source or the "sun" (Sun only for position *not* for energy) parallel = false energy = 3.0 # keV The energy of the X-ray source distance = 10000 # 9260.0 #106820.0 #926000 #14200 #9260.0 #2000.0 # mm Distance of the X-ray source from the readout radius = 3.0 #21.5 #44.661 #8.29729 #46.609 #4.04043 #3.0 #4.04043 #21.5 # #21.5 # mm Radius of the X-ray source offAxisUp = 0.0 # mm offAxisLeft = 0.0 # mm activity = 0.125 # GBq The activity in `GBq` of the source lengthCol = 0.0 #0.021 # mm Length of a collimator in front of the source

./raytracer --ignoreDetWindow --ignoreGasAbs --suffix "_xrayFinger_10m_3mm"which is quite a bit bigger than our real data. Maybe we allow some angles that we shouldn't, i.e. the X-ray finger has a collimator? Or our reflectivities are too good for too large angles?

Without good knowledge of the real size of the X-ray finger emission this is hard to get right.

- Axion image

The axion image as mentioned in the PhD thesis is the following:

First of all let's note that the caption talks about emission of a 3 arcminute source. Let's check the apparent size of the sun and the typical emission, which is from the inner 30%:

import unchained, math let Rsun = 696_342.km # SOHO mission 2003 & 2006 # use the tangent to compute based on radius of sun: # tan α = Rsun / 1.AU echo "Apparent size of the sun = ", arctan(Rsun / 1.AU).Radian.to(ArcMinute) echo "Typical emission sun from inner 30% = ", arctan(Rsun * 0.3 / 1.AU).Radian.to(ArcMinute) let R3arc = (tan(3.ArcMinute.to(Radian)) * 1.AU).to(km) echo "Used radius for 3' = ", R3arc echo "As fraction of solar radius = ", R3arc / RSun

So 3' correspond to about 18.7% of the radius. All in all that seems reasonable at least.

Let's plot the axion image as we have it from Jaime's data:

import ggplotnim, seqmath import std / [os, sequtils, strutils] proc readRT(p: string): DataFrame = result = readCsv(p, sep = ' ', skipLines = 4, colNames = @["x", "y", "z"]) result["File"] = p proc meanData(df: DataFrame): DataFrame = result = df.mutate(f{"x" ~ `x` - mean(col("x"))}, f{"y" ~ `y` - mean(col("y"))}) proc plots(df: DataFrame, title, outfile: string) = var customInferno = inferno() customInferno.colors[0] = 0 # transparent ggplot(df, aes("x", "y", fill = "z")) + geom_raster() + scale_fill_gradient(customInferno) + xlab("x [mm]") + ylab("y [mm]") + ggtitle(title) + ggsave(outfile) ggplot(df.filter(f{`x` >= -7.0 and `x` <= 7.0 and `y` >= -7.0 and `y` <= 7.0}), aes("x", "y", fill = "z")) + geom_raster() + scale_fill_gradient(customInferno) + xlab("x [mm]") + ylab("y [mm]") + xlim(-7.0, 7.0) + ylim(-7.0, 7.0) + ggtitle(title & " (zoomed)") + ggsave(outfile.replace(".pdf", "_gridpix_size.pdf")) block Single: let df = readRT("/home/basti/org/resources/llnl_cast_nature_jaime_data/2016_DEC_Final_CAST_XRT/3.00keV_2Dmap.txt") .meanData() df.plots("LLNL raytracing of axion image @ 3 keV (Jaime)", "~/org/Figs/statusAndProgress/rayTracing/raytracing_axion_image_llnl_jaime_3keV.pdf") block All: var dfs = newSeq[DataFrame]() for f in walkFiles("/home/basti/org/resources/llnl_cast_nature_jaime_data/2016_DEC_Final_CAST_XRT/*2Dmap.txt"): echo "Reading: ", f dfs.add readRT(f) echo "Summarize" var df = dfs.assignStack() df = df.group_by(@["x", "y"]) .summarize(f{float: "z" << sum(`z`)}, f{float: "zMean" << mean(`z`)}) df.writeCsv("/tmp/llnl_raytracing_jaime_all_energies_raw_sum.csv") df = df.meanData() df.writeCsv("/tmp/llnl_raytracing_jaime_all_energies.csv") plots(df, "LLNL raytracing of axion image (sum all energies) (Jaime)", "~/org/Figs/statusAndProgress/rayTracing/raytracing_axion_image_llnl_jaime_all_energies.pdf")

The 3 keV data for the axion image:

and cropped again:

And the sum of all energies:

and cropped again:

Both clearly show the symmetric shape that is so weird but also - again - does NOT reproduce the raytracing seen in the screenshot above! That one clearly has a very stark tiny center with the majority of the flux, which is gone and replaced by a much wider region of significant flux!

Both are in strong contrast to our own axion image. Let's compute that using the Primakoff only (make sure to disable the X-ray test source in the config file!):

./raytracer --ignoreDetWindow --ignoreGasAbs --suffix "_axionImagePrimakoff_focal_point"and for a more realistic image at the expected conversion point:

[DetectorInstallation] useConfig = true # sets whether to read these values here. Can be overriden here or using flag `--detectorInstall` # Note: 1500mm is LLNL focal length. That corresponds to center of the chamber! distanceDetectorXRT = 1487.93 # mm distanceWindowFocalPlane = 0.0 # mm lateralShift = 0.0 # mm lateral ofset of the detector in repect to the beamline transversalShift = 0.0 # mm transversal ofset of the detector in repect to the beamline #0.0.mm #

./raytracer --ignoreDetWindow --ignoreGasAbs --suffix "_axionImagePrimakoff_conversion_point"which yields:

which is not that far off in size of the LLNL raytraced image. The shape is just quite different!

- X-ray finger

- Reply to Igor about LLNL telescope raytracing

Igor wrote me the following mail:

Hi Sebastian, Now that we are checking with Cristina the shape of the signal after the LLNL telescope for the SRMM analysis, I got two questions on your analysis:

- The signal spot shape that you present is different from the one we have for the Nature physics paper. Do you understand why? There was a change in the Ingrid setup wrt the SRMM setup that explains it, maybe?

- Do you have a spot calibration data that allows to crosscheck the position (and rotation) of the signal spot in the Ingrid chip coordinates?

Best, Igor

as a reply to my "Limit method for 7-GridPix @ CAST" mail on .

I ended up writing a lengthy reply.

The reply is also found here: ./../Mails/igorReplyLLNL/igor_reply_llnl_axion_image.html

- My reply

Hey,

sorry for the late reply. I didn't want to reply with one sentence for each question. While looking into the questions in more details more things came up.

One thing - embarrassingly - is that I completely forgot to apply the rotation of my detector in the limit calculation (in our case the detector is rotated by 90° compared to the "data" x-y plane). Added to that is the slight rotation of the LLNL axis, which I also need to include (here I simply forgot that we never added it to the raytracer. Given that the spacer is not visible in the axion image, it didn't occur to me).

Let's start with your second question

Do you have a spot calibration data that allows to crosscheck the position (and rotation) of the signal spot in the Ingrid chip coordinates?

Yes, we have two X-ray finger runs. Unfortunately, one of them is not useful, as it was taken in July 2017 after our detector had to be removed again to make space for a short KWISP data taking. We have a second one from April 2018, which is partially useful. However, the detector was again dismounted between April and October 2018 and we don't have an X-ray finger run for the last data taking between Oct 2018 to Dec 2018.

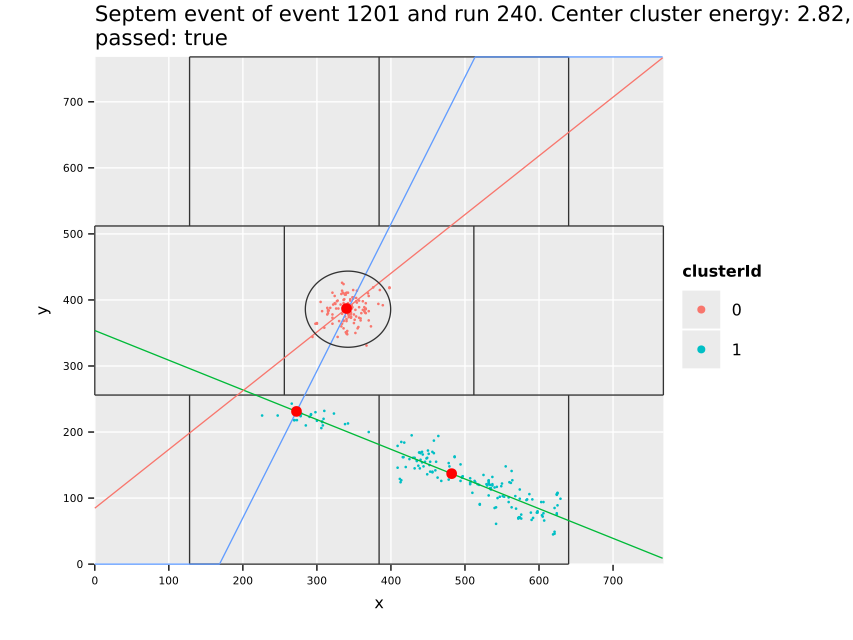

Fig. 14 shows the X-ray finger run shows the latter X-ray finger run. The two parallel lines with few clusters are two of the window strongbacks. The other line is the graphite spacer of the telescope. The center positions of the clusters are at

- (x, y) = (7.43, 6.59)

(the chip center is at (7, 7). This is what makes up the basis of our position systematic uncertainty of 5%. The 5% correspond to 0.05*7 mm = 0.35 mm.

Figure 14: X-ray finger run from April 2018, which can be used as a rough guide for the spot center. Center position is at \((x, y) = (\SI{7.43}{mm}, \SI{6.59}{mm})\). I decided not to move the actual center of the solar axion image because the X-ray finger data is hard to interpret for three different reasons:

- The entire CAST setup is "modified" in between normal data takings and installation of the X-ray finger. Who knows the effect warming up the magnet etc. is on the spot position?

- determining the actual center position of the axion spot based on the X-ray finger cluster centers is problematic due to the fact that the LLNL telescope is only a portion of a full telescope. With the resulting shape of the X-ray finger signal, combined with the missing data due to the window strongback and graphite spacer and relatively low statistics in the first place, makes trusting the numbers problematic.

- as I said before, we don't even have an X-ray finger run for the last part of the data taking. While we have the geometer measurements from the targets, I don't have the patience to learn about the coordinate system they use and attempt to reconstruct the possible movement based on those measured coordinates.

Given that we take into account the possible movement in the systematics, I believe this is acceptable.

The signal spot shape that you present is different from the one we have for the Nature physics paper. Do you understand why? There was a change in the Ingrid setup wrt the SRMM setup that explains it, maybe?

Here we now come to the actual part that is frustrating for me, too. Unfortunately, due to the "black box" nature of the LLNL telescope, Johanna and me never fully understood this. We don't understand how the raytracing calculations done by Michael Pivovaroff can ever produce a symmetric image given that the LLNL telescope is a) not a perfect Wolter design, but has cone shaped mirrors, b) is only a small portion of a full telescope and c) the incoming X-rays are not perfectly parallel. Intuitively I don't expect to have a symmetric image there. And our raytracing result does not produce anything like that.

A couple of years ago Johanna tried to find out more information about the LLNL raytracing results, but back then when Julia and Jaime were still at LLNL, the answer was effectively a "it's a secret, we can't provide more information".

As such all I can do is try to reproduce the results as well as possible. If they don't agree all I can do is provide explanations about what we compute and give other people access to my data, code and results. Then at least we can all hopefully figure out if there's something wrong with our approach.

Fig. 15 is the raytracing result as it is presented on page 78 of the PhD thesis of A. Jakobsen. It mentions that the Sun is considered as a 3' source, implying the inner ~18% of the Sun are contributing to axion emission.

Figure 15: Raytracing result as shown on page 78 (79 in PDF) of the PhD thesis of A. Jakobsen. Mentions a 3' source and has a very pronounced tiny focal spot. If I compute this with our own raytracer for the focal spot, I get the plot shown in fig. \ref{fig:axion_image_primakoff_focal_spot}. Fig. \ref{fig:axion_image_primakoff_median_conv} then corresponds to the point that sees the median of all conversions in the gas based on X-ray absorption in the gas. This is now for the case of a pure Primakoff emission and not for dominant axion-electron coupling, as I showed in my presentation (this changes the dominant contributions by radius slightly, see fig. \ref{fig:radial_production_primakoff} { Primakoff } and fig. \ref{fig:radial_production_axion_electron} { axion-electron }). They look very similar, but there are slight changes between the two axion images.

This is one of the big reasons I want to have my own raytracing simulation. Different emission models result in different axion images!

\begin{figure}[htbp] \centering \begin{subfigure}{0.5\linewidth} \centering \includegraphics[width=0.95\textwidth]{/home/basti/org/Figs/statusAndProgress/rayTracing/raytracing_axion_image_primakoff_focal_point.pdf} \caption{Focal spot} \label{fig:axion_image_primakoff_focal_spot} \end{subfigure}% \begin{subfigure}{0.5\linewidth} \centering \includegraphics[width=0.95\textwidth]{/home/basti/org/Figs/statusAndProgress/rayTracing/raytracing_axion_image_primakoff_conversion_point.pdf} \caption{Median conversion point} \label{fig:axion_image_primakoff_median_conv} \end{subfigure} \label{fig:axion_image} \caption{\subref{fig:axion_image_primakoff_focal_spot} Axion image for Primakoff emission from the Sun, computed for the exact LLNL focal spot. (Ignore the title) \subref{fig:axion_image_primakoff_median_conv} Axion image for the median conversion point of the X-rays actually entering the detector. } \end{figure} \begin{figure}[htbp] \centering \begin{subfigure}{0.5\linewidth} \centering \includegraphics[width=0.95\textwidth]{~/org/Figs/statusAndProgress/axionProduction/sampled_radii_primakoff.pdf} \caption{Primakoff radii} \label{fig:radial_production_primakoff} \end{subfigure}% \begin{subfigure}{0.5\linewidth} \centering \includegraphics[width=0.95\textwidth]{~/org/Figs/statusAndProgress/axionProduction/sampled_radii_axion_electron.pdf} \caption{Axion-electron radii} \label{fig:radial_production_axion_electron} \end{subfigure} \label{fig:radial_production} \caption{\subref{fig:radial_production_primakoff} Radial production in the Sun for Primakoff emission. \subref{fig:radial_production_axion_electron} Radial production for axion-electron emission. } \end{figure}Note that this currently does not yet take into account the slight rotation of the telescope. I first need to extract the rotation angle from the X-ray finger run.

Fig. 16 is the sum of all energies of the raytracing results that Jaime finally sent to Cristina a couple of weeks ago. In this case cropped to the size of our detector, placed at the center. These should be - as far as I understand - the ones that the contours used in the Nature paper are based on. However, these clearly do not match the results shown in the PhD thesis of Jakobsen. The extremely small focus area in black is gone and replaced by a much more diffuse area. But again, it is very symmetric, which I don't understand.

Figure 16: Raytracing image (sum of all energies) presumabely from LLNL. Likely what the Nature contours are based on. And while I was looking into this I also thought I should try to (attempt to) reproduce the X-ray finger raytracing result. Here came another confusion, because the raytracing results for that shown in the PhD thesis, fig. 17, mention that the X-ray finger was placed \SI{14.2}{m} away from the optic with a diameter of \SI{6}{mm}. That seems very wrong, given that the magnet bore is only \SI{9.26}{m} long. In total the entire magnet is - what - maybe \SI{10}{m}? At most it's maybe \SI{11}{m} to the telescope when the X-ray finger is installed in the bore? Unfortunately, the website about the X-ray finger from Amptek is not very helpful either:

https://www.amptek.com/internal-products/obsolete-products/cool-x-pyroelectric-x-ray-generator

as the only thing it says about the size is:

Miniature size: 0.6 in dia x 0.4 in (15 mm dia x 10 mm)

Figure 17: X-ray finger raytracing simulation from PhD thesis of A. Jakobsen. Mentions a distance of \(\SI{14.2}{m}\) and a source diameter of \(\SI{6}{mm}\), but size is only a bit more than \(2·\SI{2}{mm²}\). Nothing about the actual size of the area that emits X-rays. Neither do I know anything about a possible collimator used.

Furthermore, the spot size seen here is only about \(\sim 2.5·\SI{3}{mm²}\) or so. Comparing it to the spot size seen with our detector it's closer to \(\sim 5·\SI{5}{mm²}\) or even a bit larger!

So I decided to run a raytracing following these numbers, i.e. \(\SI{14.2}{m}\) and a \(\SI{3}{mm}\) radius disk shaped source without a collimator. That yields fig. 18. As we can see the size is more in line with our actually measured data.

Figure 18: Raytracing result of an "X-ray finger" at a distance of \(\SI{14.2}{m}\) and diameter of \(\SI{6}{mm}\). Results in a size closer to our real X-ray finger result. (Ignore the title) Again, I looked at the raytracing results that Jaime sent to Cristina, which includes a file with suffix "CoolX". That plot is shown in fig. 19. As we can see, it is also much larger suddenly than shown in the PhD thesis (more than \(4 · \SI{4}{mm²}\)), slightly smaller than ours.

Note that the Nature paper mentions the source is about \(\SI{12}{m}\) away. I was never around when the X-ray finger was installed, nor do I have any good data about the real magnet size or lengths of the pipes between magnet and telescope.

Figure 19: LLNL raytracing image from Jaime. Shows a much larger size now than presented in the PhD thesis. So, uhh, yeah. This is all very confusing. No matter where one looks regarding this telescope, one is bound to find contradictions or just confusing statements… :)

2.11.2. Information from NuSTAR PhD thesis

I found the following PhD thesis:

which is about the NuSTAR optic and also from DTU. It explains a lot

of things:

which is about the NuSTAR optic and also from DTU. It explains a lot

of things:

- in the introductory part about multilayers it expands on why the low density material is at the top!

- Fig. 1.11 shows that indeed the spacers are 15° apart from one another.

- Fig. 1.11 mentions the graphite spacers are only 1.2 mm wide instead of 2 mm! But the DTU LLNL thesis explicitly mentions \(x_{gr} = \SI{2}{mm}\) on page 64.

- it has a plot of energy vs angle of the reflectivity similar to what we produce! It looks very similar.

- for the NuSTAR telescope they apparently have measurements of the surface roughness to μm levels, which are included in their simulations!

2.11.3. X-ray raytracers

Other X-ray raytracers:

- McXtrace from DTU and Synchrotron SOLEIL: https://www.mcxtrace.org/about/ https://github.com/McStasMcXtrace/McCode

- MTRAYOR (mentioned in DTU NuSTAR PhD thesis):

written in Yorick https://github.com/LLNL/yorick

https://en.wikipedia.org/wiki/Yorick_(programming_language)

a language developed at LLNL!

->

https://web.archive.org/web/20170102091157/http://www.jeh-tech.com/yorick.html

for an 'introduction'

https://ftp.spacecenter.dk/pub/njw/MT_RAYOR/mt_rayor_man4.pdf

We have the MTRAYOR code here: ./../../src/mt_rayor/ it needs Yorick, which can be found here:

2.11.4. DTU FTP server [/]

The DTU has a publicly accessible FTP server with a lot of useful information. I found it by googling for MTRAYOR, because the manual is found there.

https://ftp.spacecenter.dk/pub/njw/

I have a mirror of the entire FTP here: ./../../Documents/ftpDTU/

[ ]Remove all files larger than X MB if they appear uninteresting to us.

2.11.5. Michael Pivovaroff talk about Axions, CAST, IAXO

Michael Pivovaroff giving a talk about axions, CAST, IAXO at LLNL: https://youtu.be/H_spkvp8Qkk

First he mentions: https://youtu.be/H_spkvp8Qkk?t=2372 "Then we took the telescope to PANTER" -> implying yes the CAST optic really was at PANTER. Then he says wrongly there was a 55Fe source at the other end of the magnet, showing the X-ray finger data + simulation below that title. And finally in https://youtu.be/H_spkvp8Qkk?t=2468 he says ABOUT HIS OWN RAYTRACING SIMULATION that it was a simulation for a source at infinity…

https://youtu.be/H_spkvp8Qkk?t=3134 He mentions Jaime and Julia wanted to write a paper about using NuSTAR data to set an ALP limit for reconversion of axions etc in the solar corona by looking at the center…

3. Theory

3.1. Solar axion flux

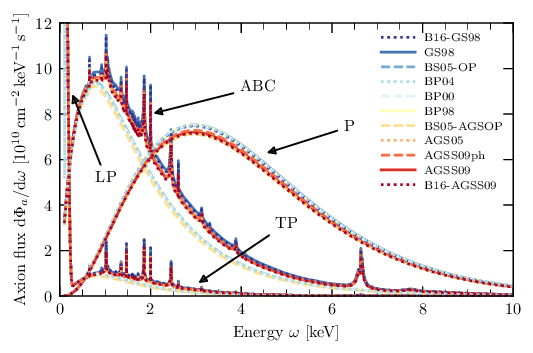

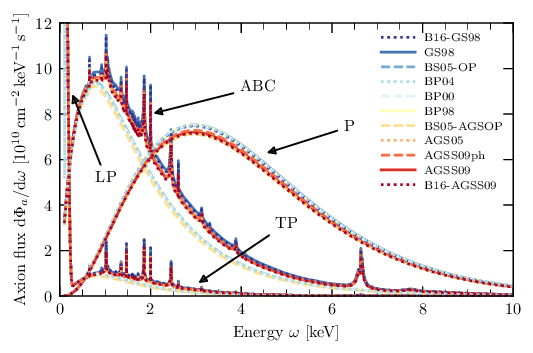

From ./../Papers/first_cast_results_physrevlett.94.121301.pdf

There are different analytical expressions for the solar axion flux for Primakoff production. These stem from the fact that a solar model is used to model the internal density, temperature, etc. in the Sun to compute the photon distribution (essentially the blackbody radiation) near the core. From it (after converting via the Primakoff effect) we get the axion flux.

Different solar models result in different expressions for the flux. The first one uses an older model, while the latter ones use newer models.

Analytical flux from first CAST result paper: g₁₀ = gaγ • 10¹⁰ GeV dΦa/dEa = g²₁₀ 3.821•10¹⁰ cm⁻²•s⁻¹•keV⁻¹ (Ea / keV)³ / (exp(Ea / (1.103 keV)) - 1) results in an integrated flux: Φa = g²₁₀ 3.67•10¹¹ cm⁻²•s⁻¹

In comparison I used in my master thesis:

def axion_flux_primakoff(w, g_ay): # axion flux produced by the Primakoff effect # in units of m^(-2) year^(-1) keV^(-1) val = 2.0 * 10**18 * (g_ay / 10**(-12) )**2 * w**(2.450) * np.exp(-0.829 * w) return val

(./../../Documents/Masterarbeit/PyAxionFlux/PyAxionFlux.py / ./../Code/CAST/PyAxionFlux/PyAxionFlux.py) The version I use is from the CAST paper about the axion electron coupling: ./../Papers/cast_axion_electron_jcap_2013_pnCCD.pdf eq. 3.1 on page 7.

Another comparison from here:

- Weighing the solar axion

Contains, among others, a plot and (newer) description for the solar axion flux (useful as a comparison)

ΦP₁₀ = 6.02e10.cm⁻²•s⁻¹•keV⁻¹

dΦa/dEa = ΦP₁₀ (gaγ / 1e-10.GeV⁻¹) * pow(Ea / 1.keV, 2.481) / (exp(Ea / (1.205.keV)))

3.1.1. Solar axion-electron flux

We compute the differential axion flux using ./../../CastData/ExternCode/AxionElectronLimit/src/readOpacityFile.nim

We have a version of the plot that is generated by it here:

but let's generate one from the setup we use as a "base" at CAST, namely the file: ./../resources/differential_flux_sun_earth_distance/solar_axion_flux_differential_g_ae_1e-13_g_ag_1e-12_g_aN_1e-15_0.989AU.csv which uses a distance Sun ⇔ Earth of 0.989 AU, corresponding to the mean of all solar trackings we took at CAST.

import ggplotnim const path = "~/org/resources/differential_flux_sun_earth_distance/solar_axion_flux_differential_g_ae_1e-13_g_ag_1e-12_g_aN_1e-15_0.989AU.csv" let df = readCsv(path) .filter(f{`type` notin ["LP Flux", "TP Flux", "57Fe Flux"]}) echo df ggplot(df, aes("Energy", "diffFlux", color = "type")) + geom_line() + xlab(r"Energy [$\si{keV}$]", margin = 1.5) + ylab(r"Flux [$\si{keV^{-1}.cm^{-2}.s^{-1}}$]", margin = 2.75) + ggtitle(r"Differential solar axion flux for $g_{ae} = \num{1e-13}, g_{aγ} = \SI{1e-12}{GeV^{-1}}$") + xlim(0, 10) + margin(top = 1.5, left = 3.25) + theme_transparent() + ggsave("~/org/Figs/statusAndProgress/differential_flux_sun_earth_distance/differential_solar_axion_fluxg_ae_1e-13_g_ag_1e-12_g_aN_1e-15_0.989AU.pdf", width = 800, height = 480, useTeX = true, standalone = true)

3.1.2. Radial production

Part of the raytracer are now also plots about the radial emission for the production.

With our default file (axion-electron)

solarModelFile = "solar_model_dataframe.csv"

running via:

./raytracer --ignoreDetWindow --ignoreGasAbs --suffix "_axion_electron" --sanity

yields

And for the Primakoff flux, using the new file:

solarModelFile = "solar_model_dataframe_fluxKind_fkAxionPhoton_0.989AU.csv" #solar_model_dataframe.csv"

running:

./raytracer --ignoreDetWindow --ignoreGasAbs --suffix "_primakoff" --sanity

we get

3.2. Axion conversion probability

Ref:

Biljana's and Kreso's notes on the axion-photon interaction here:

Further see the notes on the IAXO gas phase:

which contains the explicit form of \(P\) in the next equation!

I think it should be straight forward to derive this one from what's

given in the former PDF in eq. (3.41) (or its derivation).

[ ]Investigate this There is a chance it is non trivial due to Γ. The first PDF includes \(m_γ\), but does not mention gas in any way. So I'm not sure how one ends up at the latter. Potentially by 'folding' with the losses after the conversion?

The axion-photon conversion probability \(P_{a\rightarrow\gamma}\) in general is given by:

\begin{equation} \label{eq_conversion_prob} P_{a\rightarrow\gamma} = \left(\frac{g_{a\gamma} B}{2}\right)^2 \frac{1}{q^2 + \Gamma^2 / 4} \left[ 1 + e^{-\Gamma L} - 2e^{-\frac{\Gamma L}{2}} \cos(qL)\right], \end{equation}where \(\Gamma\) is the inverse absorption length for photons (or attenuation length).

The coherence condition for axions is

with \(L\) the length of the magnetic field (20m for IAXO, 10m for BabyIAXO), \(m_a\) the axion mass and \(E_a\) the axion energy (taken from solar axion spectrum).

In the presence of a low pressure gas, the photon receives an effective mass \(m_{\gamma}\), resulting in a new \(q\):

Thus, we first need some values for the effective photon mass in a low pressure gas, preferably helium.

From this we can see that coherence in the gas is restored if \(m_{\gamma} = m_a\), \(q \rightarrow 0\) for \(m_a \rightarrow m_{\gamma}\). This means that in those cases the energy of the incoming axion is irrelevant for the sensitivity!

Analytically the vacuum conversion probability can be derived from the expression eq. \eqref{eq_conversion_prob} by simplifying \(q\) for \(m_{\gamma} \rightarrow 0\) and \(\Gamma = 0\):

\begin{align} \label{eq_conversion_prob_vacuum} P_{a\rightarrow\gamma, \text{vacuum}} &= \left(\frac{g_{a\gamma} B}{2}\right)^2 \frac{1}{q^2} \left[ 1 + 1 - 2 \cos(qL) \right] \\ P_{a\rightarrow\gamma, \text{vacuum}} &= \left(\frac{g_{a\gamma} B}{2}\right)^2 \frac{2}{q^2} \left[ 1 - \cos(qL) \right] \\ P_{a\rightarrow\gamma, \text{vacuum}} &= \left(\frac{g_{a\gamma} B}{2}\right)^2 \frac{2}{q^2} \left[ 2 \sin^2\left(\frac{qL}{2}\right) \right] \\ P_{a\rightarrow\gamma, \text{vacuum}} &= \left(g_{a\gamma} B\right)^2 \frac{1}{q^2} \sin^2\left(\frac{qL}{2}\right) \\ P_{a\rightarrow\gamma, \text{vacuum}} &= \left(\frac{g_{a\gamma} B L}{2} \right)^2 \left(\frac{\sin\left(\frac{qL}{2}\right)}{ \left( \frac{qL}{2} \right)}\right)^2 \\ P_{a\rightarrow\gamma, \text{vacuum}} &= \left(\frac{g_{a\gamma} B L}{2} \right)^2 \left(\frac{\sin\left(\delta\right)}{\delta}\right)^2 \\ \end{align}The conversion probability in the simplified case amounts to:

\[ P(g_{aγ}, B, L) = \left(\frac{g_{aγ} \cdot B \cdot L}{2}\right)^2 \] in natural units, where the relevant numbers for the CAST magnet are:

- \(B = \SI{8.8}{T}\)

- \(L = \SI{9.26}{m}\)

and in the basic axion-electron analysis a fixed axion-photon coupling of \(g_{aγ} = \SI{1e-12}{\per\giga\electronvolt}\).

This requires either conversion of the equation into SI units by adding the "missing" constants or converting the SI units into natural units. As the result is a unit less number, the latter approach is simpler.

The conversion factors from Tesla and meter to natural units are as follows:

import unchained echo "Conversion factor Tesla: ", 1.T.toNaturalUnit() echo "Conversion factor Meter: ", 1.m.toNaturalUnit()

Conversion factor Tesla: 195.353 ElectronVolt² Conversion factor Meter: 5.06773e+06 ElectronVolt⁻¹

As such, the resulting conversion probability ends up as:

import unchained, math echo "9 T = ", 9.T.toNaturalUnit() echo "9.26 m = ", 9.26.m.toNaturalUnit() echo "P = ", pow( 1e-12.GeV⁻¹ * 9.T.toNaturalUnit() * 9.26.m.toNaturalUnit() / 2.0, 2.0)

9 T = 1758.18 ElectronVolt² 9.26 m = 4.69272e+07 ElectronVolt⁻¹ P = 1.701818225891982e-21

\begin{align} P(g_{aγ}, B, L) &= \left(\frac{g_{aγ} \cdot B \cdot L}{2}\right)^2 \\ &= \left(\frac{\SI{1e-12}{GeV^{-1}} \cdot \SI{1758.18}{eV^2} \cdot \SI{4.693e7}{eV^{-1}}}{2}\right)^2 \\ &= \num{1.702e-21} \end{align}Note that this is of the same (inverse) order of magnitude as the flux of solar axions (\(\sim10^{21}\) in some sensible unit of time), meaning the experiment expects \(\mathcal{O}(1)\) counts, which is sensible.

import unchained, math echo "9 T = ", 9.T.toNaturalUnit() echo "9.26 m = ", 9.26.m.toNaturalUnit() echo "P(natural) = ", pow( 1e-12.GeV⁻¹ * 9.T.toNaturalUnit() * 9.26.m.toNaturalUnit() / 2.0, 2.0) echo "P(SI) = ", ε0 * (hp / (2*π)) * (c^3) * (1e-12.GeV⁻¹ * 9.T * 9.26.m / 2.0)^2

3.2.1. Deriving the missing constants in the conversion probability

The conversion probability is given in natural units. In order to plug in SI units directly without the need for a conversion to natural units for the magnetic field and length, we need to reconstruct the missing constants.

The relevant constants in natural units are:

\begin{align*} ε_0 &= \SI{8.8541878128e-12}{A.s.V^{-1}.m^{-1}} \\ c &= \SI{299792458}{m.s^{-1}} \\ \hbar &= \frac{\SI{6.62607015e-34}{J.s}}{2π} \end{align*}which are each set to 1.

If we plug in the definition of a volt we get for \(ε_0\) units of:

\[ \left[ ε_0 \right] = \frac{\si{A^2.s^4}}{\si{kg.m^3}} \]

The conversion probability naively in natural units has units of:

\[ \left[ P_{aγ, \text{natural}} \right] = \frac{\si{T^2.m^2}}{J^2} = \frac{1}{\si{A^2.m^2}} \]

where we use the fact that \(g_{aγ}\) has units of \(\si{GeV^{-1}}\) which is equivalent to units of \(\si{J^{-1}}\) (care has to be taken with the rest of the conversion factors of course!) and Tesla in SI units:

\[ \left[ B \right] = \si{T} = \frac{\si{kg}}{\si{s^2.A}} \]

From the appearance of \(\si{A^2}\) in the units of \(P_{aγ, \text{natural}}\) we know a factor of \(ε_0\) is missing. This leaves the question of the correct powers of \(\hbar\) and \(c\), which come out to:

\begin{align*} \left[ ε_0 \hbar c^3 \right] &= \frac{\si{A^2.s^4}}{\si{kg.m^3}} \frac{\si{kg.m^2}}{\si{s}} \frac{\si{m^3}}{\si{s^3}} \\ &= \si{A^2.m^2}. \end{align*}So the correct expression in SI units is:

\[ P_{aγ} = ε_0 \hbar c^3 \left( \frac{g_{aγ} B L}{2} \right)^2 \]

where now only \(g_{aγ}\) needs to be expressed in units of \(\si{J^{-1}}\) for a correct result using tesla and meter.

3.3. Gaseous detector physics

I have a big confusion.

In the Bethe equation there is the factor I, the mean excitation

energy. It is roughly \(I(Z) = 10 Z\), where \(Z\) is the charge of the

element.

To determine the number of primary electrons however we have the distinction between:

- the actual excitation energy of the element / the molecules, e.g. ~15 eV for Argon gas

- the "average ionization energy per ion" \(w\), which is the well known 26 eV for Argon gas

- where does the difference between \(I\) and \(w\) come from? What does one mean vs. the other? They are different by a factor of 10 after all!

- why the large distinction between excitation energy and average energy per ion? Is it only because of rotational / vibrational modes of the molecules?

Relevant references:

- PDG chapter 33 (Bethe, losses) and 34 (Gaseous detector)

- Mean excitation energies for the stopping power of atoms and molecules evaluated from oscillator-strength spectra https://aip.scitation.org/doi/10.1063/1.2345478 about ionization energy I

- A method to improve tracking and particle identification in TPCs and silicon detectors https://doi.org/10.1016/j.nima.2006.03.009 About more correct losses in gases

This is all very confusing.

3.3.1. Average distance X-rays travel in Argon at CAST conditions [/]

In order to be able to compute the correct distance to use in the raytracer for the position of the axion image, we need a good understanding of where the average X-ray will convert in the gas.

By combining the expected axion flux (or rather that folded with the telescope and window transmission to get the correct energy distribution) with the absorption length of X-rays at different energies we can compute a weighted mean of all X-rays and come up with a single number.

For that reason we wrote xrayAttenuation.

Let's give it a try.

- Analytical approach

import xrayAttenuation, ggplotnim, unchained # 1. read the file containing efficiencies var effDf = readCsv("/home/basti/org/resources/combined_detector_efficiencies.csv") .mutate(f{"NoGasEff" ~ idx("300nm SiN") * idx("20nm Al") * `LLNL`}) # 2. compute the absorption length for Argon let ar = Argon.init() let ρ_Ar = density(1050.mbar.to(Pascal), 293.K, ar.molarMass) effDf = effDf .filter(f{idx("Energy [keV]") > 0.05}) .mutate(f{float: "l_abs" ~ absorptionLength(ar, ρ_Ar, idx("Energy [keV]").keV).float}) # compute the weighted mean of the effective flux behind the window with the # absorption length, i.e. # `<x> = Σ_i (ω_i x_i) / Σ_i ω_i` let weightedMean = (effDf["NoGasEff", float] *. effDf["l_abs", float]).sum() / effDf["NoGasEff", float].sum() echo "Weighted mean of distance: ", weightedMean.Meter.to(cm) # for reference the effective flux: ggplot(effDf, aes("Energy [keV]", "NoGasEff")) + geom_line() + ggsave("/tmp/combined_efficiency_no_gas.pdf") ggplot(effDf, aes("Energy [keV]", "l_abs")) + geom_line() + ggsave("/tmp/absorption_length_argon_cast.pdf")

This means the "effective" position of the axion image should be 0.0122 m or 1.22 cm in the detector. This is (fortunately) relatively close to the 1.5 cm (center of the detector) that we used so far.

[X]Is the above even correct? The absorption length describes the distance at which only 1/e particles are left. That means at that distance (1 - 1/e) have disappeared. To get a number don't we need to do a monte carlo (or some kind of integral) of the average? -> Well, the mean of an exponential distribution is 1/λ (if defined as \(\exp(-λx)\)!), from that point of view I think the above is perfectly adequate! Note however that the median of the distribution is \(\frac{\ln 2}{λ}\)! When looking at the distribution of our transverse RMS values for example the peak corresponds to something that is closer to the median (but is not exactly the median either; the peak is the 'mode' of the distribution). Arguably more interesting is the cutoff we see in the data as that corresponds to the largest possible diffusion (but again that is being folded with the statistics of getting a larger RMS! :/ )

UPDATE: See the section below for the numerical approach. As it turns out the above unfortunately is not correct for 3 important reasons (2 of which we were aware of):

- It does not include the axion spectrum, it changes the location of the mean slightly.

- It implicitly assumes all X-rays of all energies will be detected. This implies an infinitely long detector and not our detector limited by a length of 3 cm! This skews the actual mean to lower values, because the mean of those that are detected are at smaller values.

- Point 2 implies not only that some X-rays won't be detected, but effectively it gives a higher weight to energies that are absorbed with certainty compared to those that sometimes are not absorbed! This further reduces the mean. This can be interpreted as reducing the input flux by the percentage of the absorption probability for each energy. In this sense the above needs to be multiplied by the absorption probability to be more correct! Yet this still does not make it completely right, as that just assumes the fraction of photons of a given energy are reduced, but not that all detected ones have lengths consistent with a 3cm long volume!

- (minor) does not include isobutane.

A (shortened and) improved version of the above (but still not quite correct!):