Table of Contents

This is a subsection from file:///home/basti/org/Doc/StatusAndProgress.html.

1. Average distance X-rays travel in Argon at CAST conditions

In order to be able to compute the correct distance to use in the raytracer for the position of the axion image, we need a good understanding of where the average X-ray will convert in the gas.

By combining the expected axion flux (or rather that folded with the telescope and window transmission to get the correct energy distribution) with the absorption length of X-rays at different energies we can compute a weighted mean of all X-rays and come up with a single number.

For that reason we wrote xrayAttenuation.

Let's give it a try.

1.1. Analytical approach

import xrayAttenuation, ggplotnim, unchained # 1. read the file containing efficiencies var effDf = readCsv("/home/basti/org/resources/combined_detector_efficiencies.csv") .mutate(f{"NoGasEff" ~ idx("300nm SiN") * idx("20nm Al") * `LLNL`}) # 2. compute the absorption length for Argon let ar = Argon.init() let ρ_Ar = density(1050.mbar.to(Pascal), 293.K, ar.molarMass) effDf = effDf .filter(f{idx("Energy [keV]") > 0.05}) .mutate(f{float: "l_abs" ~ absorptionLength(ar, ρ_Ar, idx("Energy [keV]").keV).float}) # compute the weighted mean of the effective flux behind the window with the # absorption length, i.e. # `<x> = Σ_i (ω_i x_i) / Σ_i ω_i` let weightedMean = (effDf["NoGasEff", float] *. effDf["l_abs", float]).sum() / effDf["NoGasEff", float].sum() echo "Weighted mean of distance: ", weightedMean.Meter.to(cm) # for reference the effective flux: ggplot(effDf, aes("Energy [keV]", "NoGasEff")) + geom_line() + ggsave("/tmp/combined_efficiency_no_gas.pdf") ggplot(effDf, aes("Energy [keV]", "l_abs")) + geom_line() + ggsave("/tmp/absorption_length_argon_cast.pdf")

This means the "effective" position of the axion image should be 0.0122 m or 1.22 cm in the detector. This is (fortunately) relatively close to the 1.5 cm (center of the detector) that we used so far.

[X]Is the above even correct? The absorption length describes the distance at which only 1/e particles are left. That means at that distance (1 - 1/e) have disappeared. To get a number don't we need to do a monte carlo (or some kind of integral) of the average? -> Well, the mean of an exponential distribution is 1/λ (if defined as \(\exp(-λx)\)!), from that point of view I think the above is perfectly adequate! Note however that the median of the distribution is \(\frac{\ln 2}{λ}\)! When looking at the distribution of our transverse RMS values for example the peak corresponds to something that is closer to the median (but is not exactly the median either; the peak is the 'mode' of the distribution). Arguably more interesting is the cutoff we see in the data as that corresponds to the largest possible diffusion (but again that is being folded with the statistics of getting a larger RMS! :/ )

UPDATE: See the section below for the numerical approach. As it turns out the above unfortunately is not correct for 3 important reasons (2 of which we were aware of):

- It does not include the axion spectrum, it changes the location of the mean slightly.

- It implicitly assumes all X-rays of all energies will be detected. This implies an infinitely long detector and not our detector limited by a length of 3 cm! This skews the actual mean to lower values, because the mean of those that are detected are at smaller values.

- Point 2 implies not only that some X-rays won't be detected, but effectively it gives a higher weight to energies that are absorbed with certainty compared to those that sometimes are not absorbed! This further reduces the mean. This can be interpreted as reducing the input flux by the percentage of the absorption probability for each energy. In this sense the above needs to be multiplied by the absorption probability to be more correct! Yet this still does not make it completely right, as that just assumes the fraction of photons of a given energy are reduced, but not that all detected ones have lengths consistent with a 3cm long volume!

- (minor) does not include isobutane.

A (shortened and) improved version of the above (but still not quite correct!):

import xrayAttenuation, ggplotnim, unchained # 1. read the file containing efficiencies var effDf = readCsv("/home/basti/org/resources/combined_detector_efficiencies.csv") .mutate(f{"NoGasEff" ~ idx("300nm SiN") * idx("20nm Al") * `LLNL` * idx("30mm Ar Abs.")}) # 2. compute the absorption length for Argon let ar = Argon.init() let ρ_Ar = density(1050.mbar.to(Pascal), 293.K, ar.molarMass) effDf = effDf.filter(f{idx("Energy [keV]") > 0.05}) .mutate(f{float: "l_abs" ~ absorptionLength(ar, ρ_Ar, idx("Energy [keV]").keV).float}) let weightedMean = (effDf["NoGasEff", float] *. effDf["l_abs", float]).sum() / effDf["NoGasEff", float].sum() echo "Weighted mean of distance: ", weightedMean.Meter.to(cm)

We could further multiply in the axion flux of course, but as this cannot be fully correct in this way, we'll do it numerically. We would have to calculate the real mean of the exponential distribution for each energy based on the truncated exponential distribution. Effectively we have a bonded exponential between 0 and 3 cm, whose mean is of course going to differ from the parameter \(λ\).

1.2. Numerical approach

Let's write a version of the above code that computes the result by sampling from the exponential distribution for the conversion point.

What we need:

- our sampling logic

- sampling from exponential distribution depending on energy

- the axion flux

Let's start by importing the modules we need:

import helpers / sampling_helper # sampling distributions import unchained # sane units import ggplotnim # see something! import xrayAttenuation # window efficiencies import math, sequtils

where the sampling_helpers is a small module to sample from a

procedure or a sequence.

In addition let's define some helpers:

from os import `/` const ResourcePath = "/home/basti/org/resources" const OutputPath = "/home/basti/org/Figs/statusAndProgress/axion_conversion_point_sampling/"

Now let's read the LLNL telescope efficiency as well as the axion flux model. Note that we may wish to calculate the absorption points not only for a specific axion flux model, but potentially any other kind of signal. We'll build in functionality to disable different contributions.

let dfAx = readCsv(ResourcePath / "solar_axion_flux_differential_g_ae_1e-13_g_ag_1e-12_g_aN_1e-15.csv") .filter(f{`type` == "Total flux"}) let dfLLNL = readCsv(ResourcePath / "llnl_xray_telescope_cast_effective_area_parallel_light_DTU_thesis.csv") .mutate(f{"Efficiency" ~ idx("EffectiveArea[cm²]") / (PI * 2.15 * 2.15)})

Note: to get the differential axion flux use readOpacityFile from

https://github.com/jovoy/AxionElectronLimit. It generates the CSV

file.

Next up we need to define the material properties of the detector window in order to compute its transmission.

let Si₃N₄ = compound((Si, 3), (N, 4)) # actual window const ρSiN = 3.44.g•cm⁻³ const lSiN = 300.nm # window thickness let Al = Aluminium.init() # aluminium coating const ρAl = 2.7.g•cm⁻³ const lAl = 20.nm # coating thickness

With these numbers we can compute the transmission at an arbitrary energy. In order to compute the correct inputs for the calculation we now have everything. We wish to compute the following, the intensity \(I(E)\) is the flux that enters the detector

\[ I(E) = f(E) · ε_{\text{LLNL}} · ε_{\ce{Si3.N4}} · ε_{\ce{Al}} \]

where \(f(E)\) is the solar axion flux and the \(ε_i\) are the efficiencies associated with the telescope and transmission of the window. The idea is to sample from this intensity distribution to get a realistic set of X-rays as they would be experienced in the experiment. One technical aspect still to be done is an interpolation of the axion flux and LLNL telescope efficiency to evaluate the data at an arbitrary energy as to define a function that yields \(I(E)\).

Important note: We fully neglect here the conversion probability and area of the magnet bore. These (as well as a potential time component) are purely constants and do not affect the shape of the distribution \(I(E)\). We want to sample from it to get the correct weighting of the different energies, but do not care about absolute numbers. So differential fluxes are fine.

The idea is to define the interpolators and then create a procedure that captures the previously defined properties and interpolators.

from numericalnim import newLinear1D, eval let axInterp = newLinear1D(dfAx["Energy", float].toSeq1D, dfAx["diffFlux", float].toSeq1D) let llnlInterp = newLinear1D(dfLLNL["Energy[keV]", float].toSeq1D, dfLLNL["Efficiency", float].toSeq1D)

With the interpolators defined let's write the implementation for \(I(E)\):

proc I(E: keV): float = ## Compute the intensity of the axion flux after telescope & window eff. ## ## Axion flux and LLNL efficiency can be disabled by compiling with ## `-d:noAxionFlux` and `-d:noLLNL`, respectively. result = transmission(Si₃N₄, ρSiN, lSiN, E) * transmission(Al, ρAl, lAl, E) when not defined(noAxionFlux): result *= axInterp.eval(E.float) when not defined(noLLNL): result *= llnlInterp.eval(E.float)

Let's test it and see what we get for e.g. \(\SI{1}{keV}\):

echo I(1.keV)

yields \(1.249e20\). Not the most insightful, but it seems to work. Let's plot it:

let energies = linspace(0.01, 10.0, 1000).mapIt(it.keV) let Is = energies.mapIt(I(it)) block PlotI: let df = toDf({ "E [keV]" : energies.mapIt(it.float), "I" : Is }) ggplot(df, aes("E [keV]", "I")) + geom_line() + ggtitle("Intensity entering the detector gas") + ggsave(OutputPath / "intensity_axion_conversion_point_simulation.pdf")

shown in fig. 1. It looks exactly as we would expect.

Figure 1: Intensity that enters the detector taking into account LLNL telescope and window efficiencies as well as the solar axion flux

Now we define the sampler for the intensity distribution \(I(E)\), which returns an energy weighted by \(I(E)\):

let Isampler = sampler( (proc(x: float): float = I(x.keV)), # wrap `I(E)` to take `float` 0.01, 10.0, num = 1000 # use 1000 points for EDF & sample in 0.01 to 10 keV )

and define a random number generator:

import random var rnd = initRand(0x42)

First we will sample 100,000 energies from the distribution to see if we recover the intensity plot from before.

block ISampled: const nmc = 100_000 let df = toDf( {"E [keV]" : toSeq(0 ..< nmc).mapIt(rnd.sample(Isampler)) }) ggplot(df, aes("E [keV]")) + geom_histogram(bins = 200, hdKind = hdOutline) + ggtitle("Energies sampled from I(E)") + ggsave(OutputPath / "energies_intensity_sampled.pdf")

This yields fig. 2, which clearly shows the sampling works as intended.

Figure 2: Energies sampled from the distribution \(I(E)\) using 100k samples. The shape is nicely reproduced, here plotted using a histogram of 200 bins.

The final piece now is to use the same sampling logic to generate energies according to \(I(E)\), which correspond to X-rays of said energy entering the detector. For each of these energies then sample from the Beer-Lambert law

\[ I(z) = I_0 \exp\left[ - \frac{z}{l_{\text{abs}} } \right] \] where \(I_0\) is some initial intensity and \(l_\text{abs}\) the absorption length. The absorption length is computed from the gas mixture properties of the gas used at CAST, namely Argon/Isobutane 97.7/2.3 at \(\SI{1050}{mbar}\). It is the inverse of the attenuation coefficient \(μ_M\)

\[ l_{\text{abs}} = \frac{1}{μ_M} \]

where the attenuation coefficient is computed via

\[ μ_m = \frac{N_A}{M * σ_A} \]

with \(N_A\) Avogadro's constant, \(M\) the molar mass of the compound and \(σ_A\) the atomic absorption cross section. The latter again is defined by

\[ σ_A = 2 r_e λ f₂ \]

with \(r_e\) the classical electron radius, \(λ\) the wavelength of the

X-ray and \(f₂\) the second scattering factor. Scattering factors are

tabulated for different elements, for example by NIST and Henke. For a

further discussion of this see the README and implementation of

xrayAttenuation.

We will now go ahead and define the CAST gas mixture:

proc initCASTGasMixture(): GasMixture = ## Returns the absorption length for the given energy in keV for CAST ## gas conditions: ## - Argon / Isobutane 97.7 / 2.3 % ## - 20°C ( for this difference in temperature barely matters) let arC = compound((Ar, 1)) # need Argon gas as a Compound let isobutane = compound((C, 4), (H, 10)) # define the gas mixture result = initGasMixture(293.K, 1050.mbar, [(arC, 0.977), (isobutane, 0.023)]) let gm = initCASTGasMixture()

To sample from the Beer-Lambert law with a given absorption length we also define a helper that returns a sampler for the target energy using the definition of a normalized exponential distribution

\[ f_e(x, λ) = \frac{1}{λ} \exp \left[ -\frac{x}{λ} \right] \]

The sampling of the conversion point is the crucial aspect of this. Naively we might want to sample between the detector volume from 0 to \(\SI{3}{cm}\). However, this skews our result. Our calculation depends on the energy distribution of the incoming X-rays. If the absorption length is long enough the probability of reaching the readout plane and thus not being detected is significant. Restricting the sampler to \(\SI{3}{cm}\) would pretend that independent of absorption length we would always convert within the volume, giving too large a weight to the energies that should sometimes not be detected!

Let's define the sampler now. It takes the gas mixture and the target

energy. A constant SampleTo is defined to adjust the position to

which we sample at compile time (to play around with different numbers).

proc generateSampler(gm: GasMixture, targetEnergy: keV): Sampler = ## Generate the exponential distribution to sample from based on the ## given absorption length # `xrayAttenuation` `absorptionLength` returns number in meter! let λ = absorptionLength(gm, targetEnergy).to(cm) let fnSample = (proc(x: float): float = result = expFn(x, λ.float) # expFn = 1/λ · exp(-x/λ) ) const SampleTo {.intdefine.} = 20 ## `SampleTo` can be set via `-d:SampleTo=<int>` let num = (SampleTo.float / 3.0 * 1000).round.int # number of points to sample at result = sampler(fnSample, 0.0, SampleTo, num = num)

Note that this is inefficient, because we generate a new sampler from which we only sample a single point, namely the conversion point of that X-ray. If one intended to perform a more complex calculation or wanted to sample orders of magnitude more X-rays, one should either restructure the code (i.e. sample from known energies and then reorder based on the weight defined by \(I(E)\) or cache the samplers and pre-bin the energies.

For reference let's compute the absorption length as a function of energy for the CAST gas mixture:

block GasAbs: let df = toDf({ "E [keV]" : linspace(0.03, 10.0, 1000), "l_abs [cm]" : linspace(0.03, 10.0, 1000).mapIt(absorptionLength(gm, it.keV).m.to(cm).float) }) ggplot(df, aes("E [keV]", "l_abs [cm]")) + geom_line() + ggtitle("Absorption length of X-rays in CAST gas mixture: " & $gm) + margin(top = 1.5) + ggsave(OutputPath / "cast_gas_absorption_length.pdf")

which yields fig. 3

Figure 3: Absorption length in the CAST gas mixture as a function of X-ray energy.

So, finally: let's write the MC sampling!

const nmc = 500_000 # start with 100k samples var Es = newSeqOfCap[keV](nmc) var zs = newSeqOfCap[cm](nmc) while zs.len < nmc: # 1. sample an energy according to `I(E)` let E = rnd.sample(Isampler).keV # 2. get the sampler for this energy let distSampler = generateSampler(gm, E) # 3. sample from it var z = Inf.cm when defined(Equiv3cmSampling): ## To get the same result as directly sampling ## only up to 3 cm use the following code while z > 3.0.cm: z = rnd.sample(distSampler).cm elif defined(UnboundedVolume): ## This branch pretends the detection volume ## is unbounded if we sample within 20cm z = rnd.sample(distSampler).cm else: ## This branch is the physically correct one. If an X-ray reaches the ## readout plane it is _not_ recorded, but it was still part of the ## incoming flux! z = rnd.sample(distSampler).cm if z > 3.0.cm: continue # just drop this X-ray zs.add z Es.add E

Great, now we have sampled the conversion points according to the correct intensity. We can now ask for statistics or create different plots (e.g. conversion point by energies etc.).

import stats, seqmath # mean, variance and percentile let zsF = zs.mapIt(it.float) # for math echo "Mean conversion position = ", zsF.mean().cm echo "Median conversion position = ", zsF.percentile(50).cm echo "Variance of conversion position = ", zsF.variance().cm

This prints the following:

Mean conversion position = 0.556813 cm Median conversion position = 0.292802 cm Variance of conversion position = 0.424726 cm

As we can see (unfortunately) our initial assumption of a mean distance of \(\SI{1.22}{cm}\) are quite of the mark. The more realistic number is only \(\SI{0.56}{cm}\). And if we were to use the median it's only \(\SI{0.29}{cm}\).

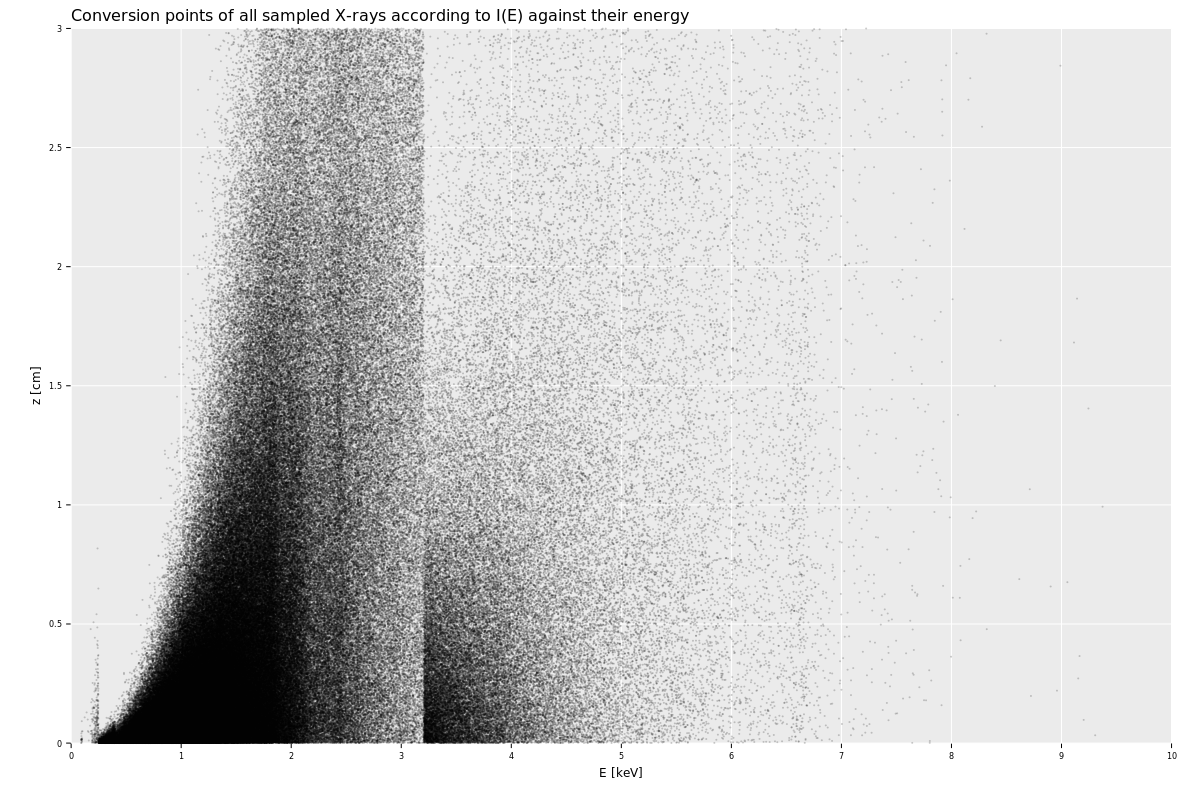

Let's plot the conversion points of all sampled (and recorded!) X-rays as well as what their distribution against energy looks like.

let dfZ = toDf({ "E [keV]" : Es.mapIt(it.float), "z [cm]" : zs.mapIt(it.float) }) ggplot(dfZ, aes("z [cm]")) + geom_histogram(bins = 200, hdKind = hdOutline) + ggtitle("Conversion points of all sampled X-rays according to I(E)") + ggsave(OutputPath / "sampled_axion_conversion_points.pdf") ggplot(dfZ, aes("E [keV]", "z [cm]")) + geom_point(size = 1.0, alpha = 0.2) + ggtitle("Conversion points of all sampled X-rays according to I(E) against their energy") + ggsave(OutputPath / "sampled_axion_conversion_points_vs_energy.png", width = 1200, height = 800)

The former is shown in fig. 4. The overlapping exponential distribution is obvious, as one would expect. The same data is shown in fig. 5, but in this case not as a histogram, but by their energy as a scatter plot. We can clearly see the impact of the absorption length on the conversion points for each energy!

Figure 4: Distribution of the conversion points of all sampled X-rays for which conversion in the detector took place as sampled from \(I(E)\).

Figure 5: Distribution of the conversion points of all sampled X-rays for which conversion in the detector took place as sampled from \(I(E)\) as a scatter plot against the energy for each X-ray.

1.3. Compiling and running the code

The code above is written in literate programming style. To compile

and run it we use ntangle to extract it from the Org file:

ntangle <file>

which generates file:///tmp/sample_axion_xrays_conversion_points.nim.

Compiling and running it can be done via:

nim r -d:danger /tmp/sample_axion_xrays_conversion_points.nim

which compiles and runs it as an optimized build.

We have the following compilation flags to compute different cases:

-d:noLLNL: do not include the LLNL efficiency into the input intensity-d:noAxionFlux: do not include the axion flux into the input intensity-d:SampleTo=<int>: change to where we sample the position (only to 3cm for example)-d:UnboundedVolume: if used together with the defaultSampleTo(or any large value) will effectively compute the case of an unbounded detection volume (i.e. every X-ray recorded with 100% certainty).-d:Equiv3cmSampling: Running this with the defaultSampleTo(or any large value) will effectively change the sampling to a maximum \SI{3}{cm} sampling. This can be used as a good crossheck to verify the sampling behavior is independent of the sampling range.

Configurations of note:

nim r -d:danger -d:noAxionFlux /tmp/sample_axion_xrays_conversion_points.nim

\(⇒\) realistic case for a flat input spectrum Yields:

Mean conversion position = 0.712102 cm Median conversion position = 0.445233 cm Variance of conversion position = 0.528094 cm

nim r -d:danger -d:noAxionFlux -d:UnboundedVolume /tmp/sample_axion_xrays_conversion_points.nim

\(⇒\) the closest analogue to the analytical calculation from section 1.1 (outside of including isobutane here) Yields:

Mean conversion position = 1.25789 cm Median conversion position = 0.560379 cm Variance of conversion position = 3.63818 cm

nim r -d:danger /tmp/sample_axion_xrays_conversion_points.nim

\(⇒\) the case we most care about and of which the numbers are mentioned in the text above.