1. Journal (day to day) extended

1.1. [1/1]

[X]arraymancer NN DSL PR

1.2. [3/5]

[X]write mail to CAST PC about talk at Patras.[ ]implement MCMC multiple chains starting & mean value of them for limit calculation[ ]fix segfault when multithreading[X]compute correct "depth" for raytracing focal spot[X]split window strongback from signal & position uncertainty Implemented the axion image w/o window & strongback separately. Still have to be implemented into limit calc.

1.3. [2/4]

[X]implement MCMC multiple chains starting & mean value of them for limit calculation[ ]fix segfault when multithreading[X]implement strongback / signal split into limit calc[ ]Timepix3 background rate!

1.4.

Questions for meeting with Klaus today:

- Did you hear something from Igor? -> Nope he hasn't either. Apparently Igor is very busy currently. But Klaus doesn't think there will be any showstoppers regarding making the data available.

- For reference distributions and logL morphing:

We morph bin wise on pre-binned data. This leads to jumps in the logL cut value.

Maybe a good idea after all not use a histogram, but a smooth KDE? Unbinned is not directly possible, because we don't have data to compute an unbinned distribution for everything outside main fluorescence lines! -> Klaus had a good idea here: We can estimate the systematic effect of our binning by moving the bin edges by half a bin width to the left / right and computing the expected limit based on these. If the effective limit changes, we know there is some systematic effect going on. More likely though, the expected limit remains unchanged (within variance) and therefore the systematic impact is smaller than the variance of the limit.

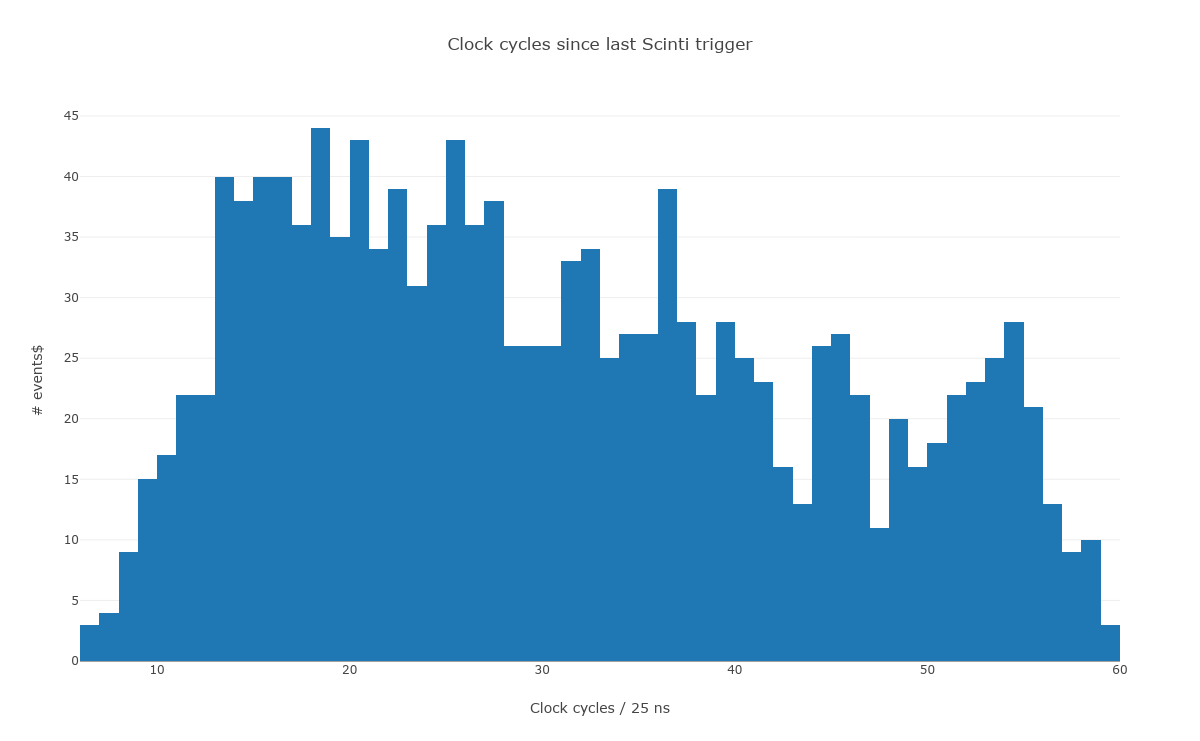

About septem veto and line veto: What to do with random coincidences? Is it honest to use those clusters? -> Klaus had an even better idea here: we can estimate the dead time by doing the following:

- read full septemboard data

- shuffle center + outer chip event data around such that we know the two are not correlated

- compute the efficiency of the septem veto.

In theory 0% of all events should trigger either the septem or the line veto. The percentage that does anyway is our random coincidence!

1.5.

All files that were in /tmp/playground (/t/playground) referenced

here and in the meeting notes are backed up in

~/development_files/07_03_2023/playground

(to not make sure we lose something / for reference to recreate some

in development behavior etc.)

Just because I'll likely shut down the computer for the first time in 26 days soon and not sure if everything was backed up from there. I believe so, but who knows.

1.6.

Let's rerun the likelihood after adding the tracking information

back to the H5 files and fixing how the total duration is calculated

from the data files.

Previously we used the total duration in every case, even when excluding tracking information and thus having less time in actuality. 'Fortunately', all background rate plots in the thesis as of today ran without any tracking info in the H5 files, meaning they include the solar tracking itself. Therefore the total duration is correct in those cases.

Run-2 testing (all vetoes):

likelihood -f ~/CastData/data/DataRuns2017_Reco.h5 \ --h5out /tmp/playground/test_run2.h5 \ --region crGold \ --cdlYear 2018 \ --cdlFile ~/CastData/data/CDL_2019/calibration-cdl-2018.h5 \ --lineveto --scintiveto --fadcveto --septemveto \ --calibFile ~/CastData/data/CalibrationRuns2017_Reco.h5

Run-3 testing (all vetoes):

likelihood -f ~/CastData/data/DataRuns2018_Reco.h5 \ --h5out /tmp/playground/test_run3.h5 \ --region crGold \ --cdlYear 2018 \ --cdlFile ~/CastData/data/CDL_2019/calibration-cdl-2018.h5 \ --lineveto --scintiveto --fadcveto --septemveto \ --calibFile ~/CastData/data/CalibrationRuns2018_Reco.h5

The likelihood outputs are here: ./resources/background_rate_test_correct_time_no_tracking/

Background:

plotBackgroundRate \ /tmp/playground/test_run2.h5 \ /tmp/playground/test_run3.h5 \ --combName 2017/18 \ --combYear 2017 \ --centerChip 3 \ --title "Background rate from CAST data, all vetoes" \ --showNumClusters \ --showTotalTime \ --topMargin 1.5 \ --energyDset energyFromCharge \ --outfile background_rate_crGold_all_vetoes.pdf \ --outpath /tmp/playground/ \ --quiet

The number coming out (see title of the generated plot) is now 3158.57 h, which matches our (new :( ) expectation.

which is also in the same directory: ./resources/background_rate_test_correct_time_no_tracking/background_rate_crGold_all_vetoes.pdf

[X]RerunwriteRunListand update thestatusAndProgressandthesistables about times![ ]Update data in

thesis! Run-2:./writeRunList -b ~/CastData/data/DataRuns2017_Reco.h5 -c ~/CastData/data/CalibrationRuns2017_Reco.h5

Type: rtBackground total duration: 14 weeks, 6 days, 11 hours, 25 minutes, 59 seconds, 97 milliseconds, 615 microseconds, and 921 nanoseconds In hours: 2507.433082670833 active duration: 2238.783333333333 trackingDuration: 4 days, 10 hours, and 20 seconds In hours: 106.0055555555556 active tracking duration: 94.12276972527778 nonTrackingDuration: 14 weeks, 2 days, 1 hour, 25 minutes, 39 seconds, 97 milliseconds, 615 microseconds, and 921 nanoseconds In hours: 2401.427527115278 active background duration: 2144.666241943055

Solar tracking [h] Background [h] Active tracking [h] Active background [h] Total time [h] Active time [h] 106.006 2401.43 94.1228 2144.67 2507.43 2238.78 Type: rtCalibration total duration: 4 days, 11 hours, 25 minutes, 20 seconds, 453 milliseconds, 596 microseconds, and 104 nanoseconds In hours: 107.4223482211111 active duration: 2.601388888888889 trackingDuration: 0 nanoseconds In hours: 0.0 active tracking duration: 0.0 nonTrackingDuration: 4 days, 11 hours, 25 minutes, 20 seconds, 453 milliseconds, 596 microseconds, and 104 nanoseconds In hours: 107.4223482211111 active background duration: 2.601391883888889

Solar tracking [h] Background [h] Active tracking [h] Active background [h] Total time [h] Active time [h] 0 107.422 0 2.60139 107.422 2.60139 Run-3:

./writeRunList -b ~/CastData/data/DataRuns2018_Reco.h5 -c ~/CastData/data/CalibrationRuns2018_Reco.h5

Type: rtBackground total duration: 7 weeks, 23 hours, 13 minutes, 35 seconds, 698 milliseconds, 399 microseconds, and 775 nanoseconds In hours: 1199.226582888611 active duration: 1079.598333333333 trackingDuration: 3 days, 2 hours, 17 minutes, and 53 seconds In hours: 74.29805555555555 active tracking duration: 66.92306679361111 nonTrackingDuration: 6 weeks, 4 days, 20 hours, 55 minutes, 42 seconds, 698 milliseconds, 399 microseconds, and 775 nanoseconds In hours: 1124.928527333056 active background duration: 1012.677445774444

Solar tracking [h] Background [h] Active tracking [h] Active background [h] Total time [h] Active time [h] 74.2981 1124.93 66.9231 1012.68 1199.23 1079.6 Type: rtCalibration total duration: 3 days, 15 hours, 3 minutes, 47 seconds, 557 milliseconds, 131 microseconds, and 279 nanoseconds In hours: 87.06321031416667 active duration: 3.525555555555556 trackingDuration: 0 nanoseconds In hours: 0.0 active tracking duration: 0.0 nonTrackingDuration: 3 days, 15 hours, 3 minutes, 47 seconds, 557 milliseconds, 131 microseconds, and 279 nanoseconds In hours: 87.06321031416667 active background duration: 3.525561761944445

Solar tracking [h] Background [h] Active tracking [h] Active background [h] Total time [h] Active time [h] 0 87.0632 0 3.52556 87.0632 3.52556

[X]Rerun the

createAllLikelihoodCombinationsnow that tracking information is there. -> Currently running (Update: We could now combine the below with the one further down that excludes the FADC!)../createAllLikelihoodCombinations \ --f2017 ~/CastData/data/DataRuns2017_Reco.h5 \ --f2018 ~/CastData/data/DataRuns2018_Reco.h5 \ --c2017 ~/CastData/data/CalibrationRuns2017_Reco.h5 \ --c2018 ~/CastData/data/CalibrationRuns2018_Reco.h5 \ --regions crGold --regions crAll \ --vetoSets "{fkScinti, fkFadc, fkSeptem, fkLineVeto, fkExclusiveLineVeto}" \ --fadcVetoPercentiles 0.9 --fadcVetoPercentiles 0.95 --fadcVetoPercentiles 0.99 \ --out /t/lhood_outputs_adaptive_fadc \ --cdlFile ~/CastData/data/CDL_2019/calibration-cdl-2018.h5 \ --multiprocessing

Found here: ./resources/lhood_limits_automation_correct_duration/

[ ]Now generate the other likelihood outputs we need for more expected limit cases from sec. [BROKEN LINK: sec:meetings:10_03_23] in StatusAndProgress:

[ ]Calculate expected limits also for the following cases:[X]Septem, line combinations without the FADC[ ]Best case (lowest row of below) with lnL efficiencies of:[ ]0.7[ ]0.9

The former (septem, line without FADC) will be done using

createAllLikelihoodCombinations:./createAllLikelihoodCombinations \ --f2017 ~/CastData/data/DataRuns2017_Reco.h5 \ --f2018 ~/CastData/data/DataRuns2018_Reco.h5 \ --c2017 ~/CastData/data/CalibrationRuns2017_Reco.h5 \ --c2018 ~/CastData/data/CalibrationRuns2018_Reco.h5 \ --regions crGold --regions crAll \ --vetoSets "{+fkScinti, fkSeptem, fkLineVeto, fkExclusiveLineVeto}" \ --out /t/lhood_outputs_adaptive_fadc \ --cdlFile ~/CastData/data/CDL_2019/calibration-cdl-2018.h5 \ --multiprocessing

For simplicity, this will regenerate some of the files already generated (i.e. the no vetoes & the scinti case)

These files are also found here: ./resources/lhood_limits_automation_correct_duration/ together with a rerun of the regular cases above.

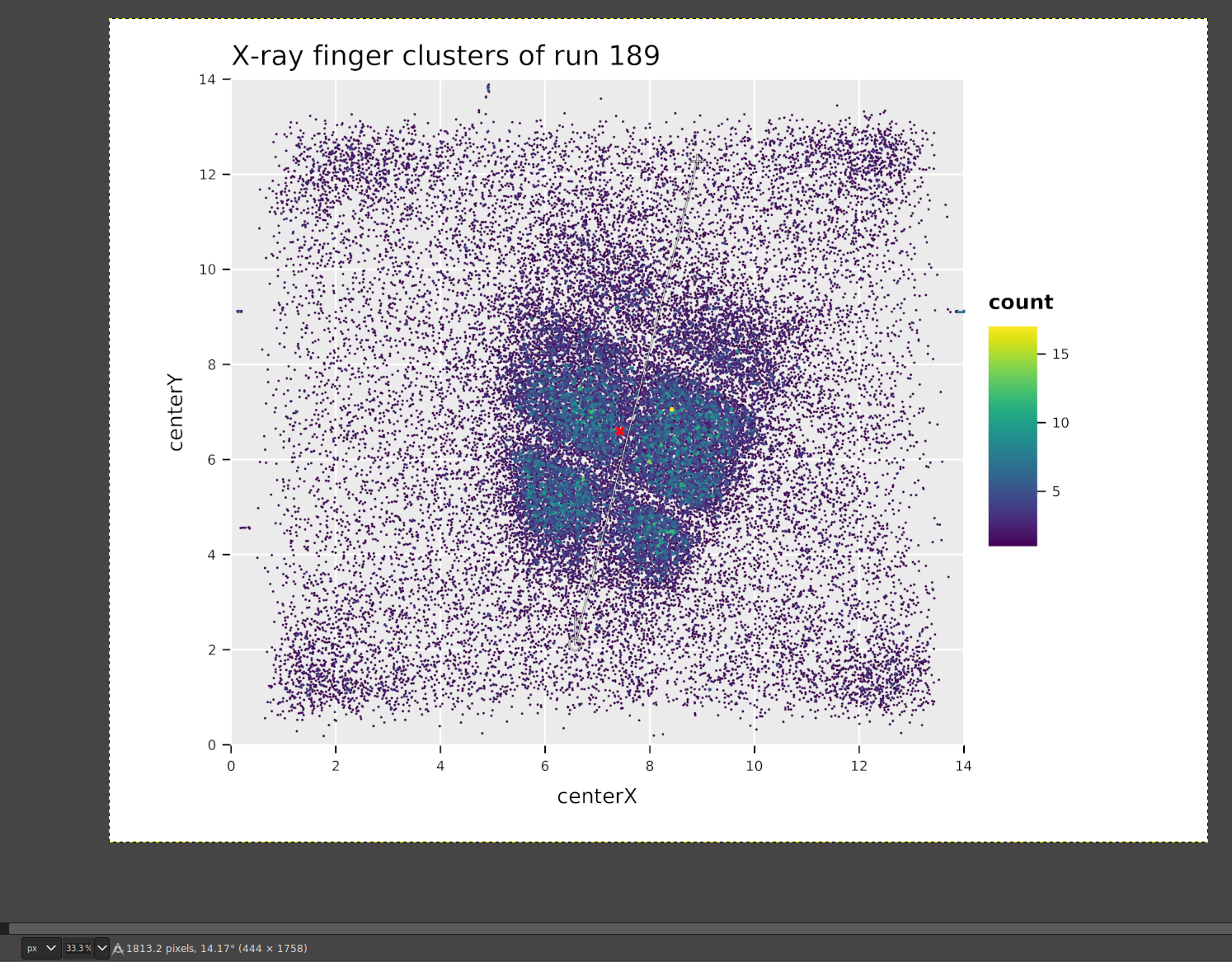

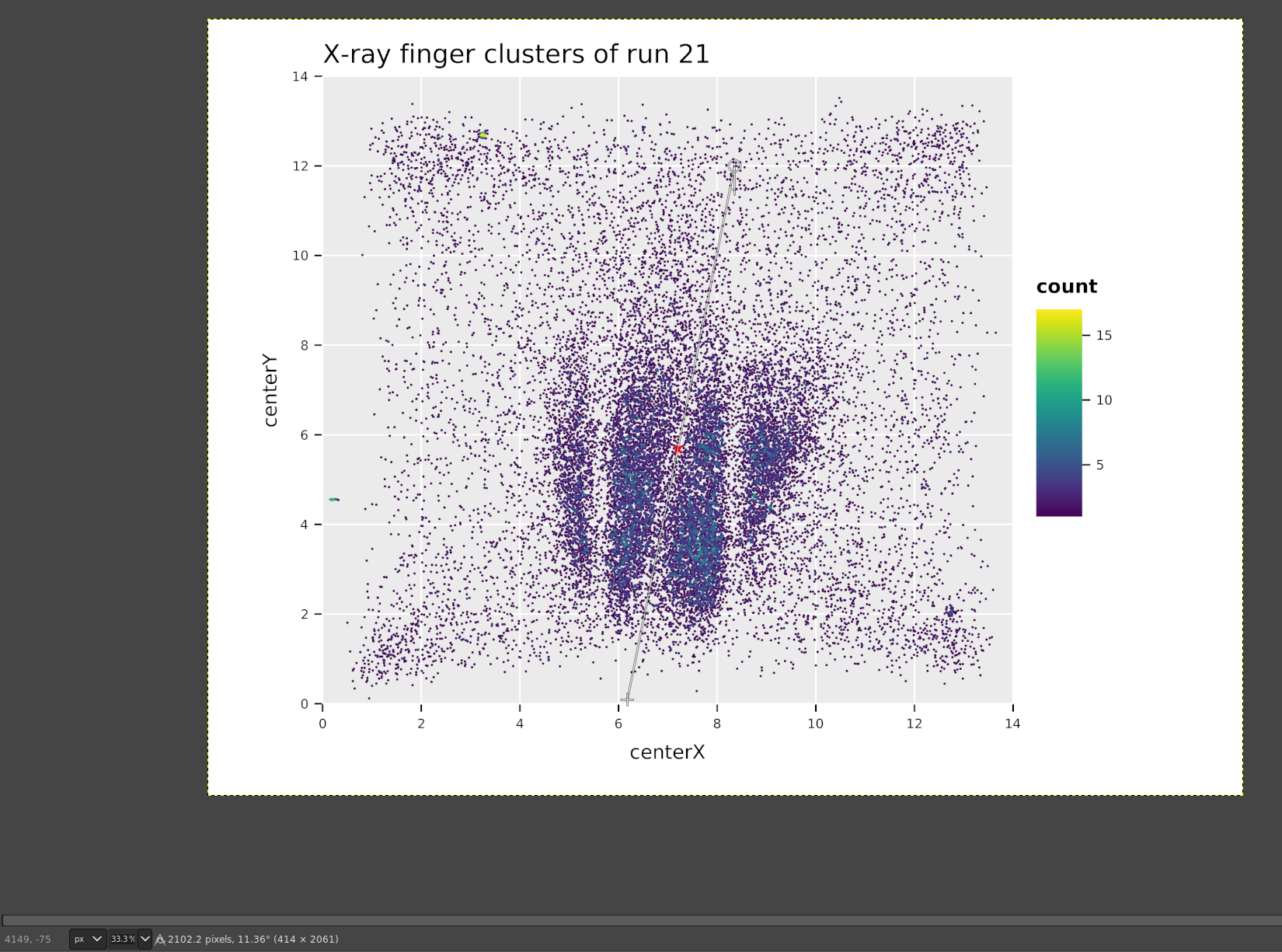

Plot the background clusters to see if we indeed have less over the whole chip.

plotBackgroundClusters \ /t/lhood_outputs_adaptive_duplicated_fadc_stuff/likelihood_cdl2018_Run2_crAll_scinti_vetoPercentile_0.99_fadc_vetoPercentile_0.99_septem_vetoPercentile_0.99_line_vetoPercentile_0.99.h5 \ /t/lhood_outputs_adaptive_duplicated_fadc_stuff/likelihood_cdl2018_Run3_crAll_scinti_vetoPercentile_0.99_fadc_vetoPercentile_0.99_septem_vetoPercentile_0.99_line_vetoPercentile_0.99.h5 \ --zMax 5 \ --title "X-ray like clusters of CAST data after all vetoes" \ --outpath /tmp/playground/ \ --filterNoisyPixels \ --filterEnergy 12.0 \ --suffix "_all_vetoes"

Available here: resources/background_rate_test_correct_time_no_tracking/background_cluster_centers_all_vetoes.pdf

Where we can see that indeed we now have less than 10,000 clusters compared to the ~10,500 we had when using all data (including tracking).

1.7.

Continue from yesterday:

[X]Now generate the other likelihood outputs we need for more expected limit cases from sec. [BROKEN LINK: sec:meetings:10_03_23] in StatusAndProgress: -> All done, path to files below.

[X]Calculate expected limits also for the following cases:[X]Septem, line combinations without the FADC (done yesterday)[X]Best case (lowest row of below) with lnL efficiencies of:[X]0.7[X]0.9

The latter has now also been implemented as functionality in

likelihoodandcreateAllLikelihoodCombinations(adjust signal efficiency from command line and add options to runner). Now run:./createAllLikelihoodCombinations \ --f2017 ~/CastData/data/DataRuns2017_Reco.h5 \ --f2018 ~/CastData/data/DataRuns2018_Reco.h5 \ --c2017 ~/CastData/data/CalibrationRuns2017_Reco.h5 \ --c2018 ~/CastData/data/CalibrationRuns2018_Reco.h5 \ --regions crGold --regions crAll \ --signalEfficiency 0.7 --signalEfficiency 0.9 \ --vetoSets "{+fkScinti, +fkFadc, +fkSeptem, fkLineVeto}" \ --fadcVetoPercentile 0.9 \ --out /t/lhood_outputs_adaptive_fadc \ --cdlFile ~/CastData/data/CDL_2019/calibration-cdl-2018.h5 \ --multiprocessing \ --dryRun

to reproduce numbers for the best expected limit case together with a lnL signal efficiency of 70 and 90%.

Finally, these files are also in: ./resources/lhood_limits_automation_correct_duration/ which means now all the setups we initially care about are there.

Let's look at the background rate we get from the 70% vs the 90% case:

plotBackgroundRate \ likelihood_cdl2018_Run2_crGold_signalEff_0.7_scinti_fadc_septem_line_vetoPercentile_0.9.h5 \ likelihood_cdl2018_Run3_crGold_signalEff_0.7_scinti_fadc_septem_line_vetoPercentile_0.9.h5 \ likelihood_cdl2018_Run2_crGold_signalEff_0.9_scinti_fadc_septem_line_vetoPercentile_0.9.h5 \ likelihood_cdl2018_Run3_crGold_signalEff_0.9_scinti_fadc_septem_line_vetoPercentile_0.9.h5 \ --names "0.7" --names "0.7" \ --names "0.9" --names "0.9" \ --centerChip 3 \ --title "Background rate from CAST data, incl. all vetoes, 70vs90" \ --showNumClusters --showTotalTime \ --topMargin 1.5 --energyDset energyFromCharge \ --outfile background_rate_cast_all_vetoes_70p_90p.pdf \ --outpath . \ --quiet

[INFO]:Dataset: 0.7 [INFO]: Integrated background rate in range: 0.0 .. 12.0: 4.1861e-05 cm⁻²·s⁻¹ [INFO]: Integrated background rate/keV in range: 0.0 .. 12.0: 3.4884e-06 keV⁻¹·cm⁻²·s⁻¹ [INFO]:Dataset: 0.9 [INFO]: Integrated background rate in range: 0.0 .. 12.0: 9.3221e-05 cm⁻²·s⁻¹ [INFO]: Integrated background rate/keV in range: 0.0 .. 12.0: 7.7684e-06 keV⁻¹·cm⁻²·s⁻¹ [INFO]:Dataset: 0.7 [INFO]: Integrated background rate in range: 0.5 .. 2.5: 8.6185e-06 cm⁻²·s⁻¹ [INFO]: Integrated background rate/keV in range: 0.5 .. 2.5: 4.3093e-06 keV⁻¹·cm⁻²·s⁻¹ [INFO]:Dataset: 0.9 [INFO]: Integrated background rate in range: 0.5 .. 2.5: 1.4775e-05 cm⁻²·s⁻¹ [INFO]: Integrated background rate/keV in range: 0.5 .. 2.5: 7.3873e-06 keV⁻¹·cm⁻²·s⁻¹ [INFO]:Dataset: 0.7 [INFO]: Integrated background rate in range: 0.5 .. 5.0: 1.8116e-05 cm⁻²·s⁻¹ [INFO]: Integrated background rate/keV in range: 0.5 .. 5.0: 4.0259e-06 keV⁻¹·cm⁻²·s⁻¹ [INFO]:Dataset: 0.9 [INFO]: Integrated background rate in range: 0.5 .. 5.0: 3.4650e-05 cm⁻²·s⁻¹ [INFO]: Integrated background rate/keV in range: 0.5 .. 5.0: 7.7000e-06 keV⁻¹·cm⁻²·s⁻¹ [INFO]:Dataset: 0.7 [INFO]: Integrated background rate in range: 0.0 .. 2.5: 1.4423e-05 cm⁻²·s⁻¹ [INFO]: Integrated background rate/keV in range: 0.0 .. 2.5: 5.7691e-06 keV⁻¹·cm⁻²·s⁻¹ [INFO]:Dataset: 0.9 [INFO]: Integrated background rate in range: 0.0 .. 2.5: 2.4273e-05 cm⁻²·s⁻¹ [INFO]: Integrated background rate/keV in range: 0.0 .. 2.5: 9.7090e-06 keV⁻¹·cm⁻²·s⁻¹ [INFO]:Dataset: 0.7 [INFO]: Integrated background rate in range: 4.0 .. 8.0: 4.3972e-06 cm⁻²·s⁻¹ [INFO]: Integrated background rate/keV in range: 4.0 .. 8.0: 1.0993e-06 keV⁻¹·cm⁻²·s⁻¹ [INFO]:Dataset: 0.9 [INFO]: Integrated background rate in range: 4.0 .. 8.0: 1.1785e-05 cm⁻²·s⁻¹ [INFO]: Integrated background rate/keV in range: 4.0 .. 8.0: 2.9461e-06 keV⁻¹·cm⁻²·s⁻¹ [INFO]:Dataset: 0.7 [INFO]: Integrated background rate in range: 0.0 .. 8.0: 2.7790e-05 cm⁻²·s⁻¹ [INFO]: Integrated background rate/keV in range: 0.0 .. 8.0: 3.4738e-06 keV⁻¹·cm⁻²·s⁻¹ [INFO]:Dataset: 0.9 [INFO]: Integrated background rate in range: 0.0 .. 8.0: 5.3998e-05 cm⁻²·s⁻¹ [INFO]: Integrated background rate/keV in range: 0.0 .. 8.0: 6.7497e-06 keV⁻¹·cm⁻²·s⁻¹ [INFO]:Dataset: 0.7 [INFO]: Integrated background rate in range: 2.0 .. 8.0: 1.3895e-05 cm⁻²·s⁻¹ [INFO]: Integrated background rate/keV in range: 2.0 .. 8.0: 2.3159e-06 keV⁻¹·cm⁻²·s⁻¹ [INFO]:Dataset: 0.9 [INFO]: Integrated background rate in range: 2.0 .. 8.0: 3.0956e-05 cm⁻²·s⁻¹ [INFO]: Integrated background rate/keV in range: 2.0 .. 8.0: 5.1594e-06 keV⁻¹·cm⁻²·s⁻¹

yielded

which shows quite the incredibly change especially in the 8 keV peak!

And in the 4 to 8 keV range we even almost got to the 1e-7 range for the 70% case (however note that in this case the total efficiency is only about 40% or so!).

[X]Verify that the signal efficiency used is written to output logL file[X]If not implement -> It was not, now implemented.

[X]read signal efficiency from logL file in mcmclimit and stop using the efficiency includingεin the context. Instead merge with the calculator for veto efficiencies. -> Implemented.

From meeting notes:

[ ]Verify that those elements with lower efficiency indeed have \(R_T = 0\) at higher values! -> Just compute \(R_T = 0\) for all input files and output result, easiest.

1.8.

Old model from March 2022 ./resources/mlp_trained_march2022.pt :

Test set: Average loss: 0.9876 | Accuracy: 0.988 Cut value: 2.483305978775025 Test set: Average loss: 0.9876 | Accuracy: 0.988 Total efficiency = 0.8999892098945267 Test set: Average loss: 0.9995 | Accuracy: 0.999 Target: Ag-Ag-6kV eff = 0.9778990694345026 Test set: Average loss: 0.9916 | Accuracy: 0.992 Target: Al-Al-4kV eff = 0.9226669690441093 Test set: Average loss: 0.9402 | Accuracy: 0.940 Target: C-EPIC-0.6kV eff = 0.6790938280413843 Test set: Average loss: 0.9941 | Accuracy: 0.994 Target: Cu-EPIC-0.9kV eff = 0.8284986713906112 Test set: Average loss: 0.9871 | Accuracy: 0.987 Target: Cu-EPIC-2kV eff = 0.8687534321801208 Test set: Average loss: 1.0000 | Accuracy: 1.000 Target: Cu-Ni-15kV eff = 0.9939449541284404 Test set: Average loss: 0.9999 | Accuracy: 1.000 Target: Mn-Cr-12kV eff = 0.9938112429087158 Test set: Average loss: 1.0000 | Accuracy: 1.000 Target: Ti-Ti-9kV eff = 0.9947166683932456

New model from yesterday ./resources/mlp_trained_bsz8192_hidden_5000.pt :

Test set: Average loss: 0.9714 | Accuracy: 0.971 Cut value: 1.847297704219818 Test set: Average loss: 0.9714 | Accuracy: 0.971 Total efficiency = 0.8999892098945267 Test set: Average loss: 0.9945 | Accuracy: 0.994 Target: Ag-Ag-6kV eff = 0.9525769506084467 Test set: Average loss: 0.9804 | Accuracy: 0.980 Target: Al-Al-4kV eff = 0.9097403333711305 Test set: Average loss: 0.8990 | Accuracy: 0.899 Target: C-EPIC-0.6kV eff = 0.7640920442383161 Test set: Average loss: 0.9584 | Accuracy: 0.958 Target: Cu-EPIC-0.9kV eff = 0.8211913197519929 Test set: Average loss: 0.9636 | Accuracy: 0.964 Target: Cu-EPIC-2kV eff = 0.8543657331136738 Test set: Average loss: 0.9980 | Accuracy: 0.998 Target: Cu-Ni-15kV eff = 0.981651376146789 Test set: Average loss: 0.9978 | Accuracy: 0.998 Target: Mn-Cr-12kV eff = 0.9807117070654977 Test set: Average loss: 0.9982 | Accuracy: 0.998 Target: Ti-Ti-9kV eff = 0.9776235367243344

Calculated with determineCdlEfficiency.

To get a background rate estimate we use the simple functionality in

the NN training tool itself. Note that it needs the total time as an

input to scale the data correctly (hardcoded has a value of Run-2, but

for Run-3 need to use the totalTime argument!)

Background rate at 95% for Run-2 data:

cd ~/CastData/ExternCode/TimepixAnalysis/Tools/NN_playground

./train_ingrid -f ~/CastData/data/DataRuns2017_Reco.h5 --ε 0.95

which yields:

Background rate at 90% for Run-2 data:

cd ~/CastData/ExternCode/TimepixAnalysis/Tools/NN_playground

./train_ingrid -f ~/CastData/data/DataRuns2017_Reco.h5 --ε 0.9

Test set: Average loss: 0.9714 | Accuracy: 0.971 Cut value: 1.847297704219818

Test set: Average loss: 0.9714 | Accuracy: 0.971 Total efficiency = 0.8999892098945267

Test set: Average loss: 0.9945 | Accuracy: 0.994 Target: Ag-Ag-6kV eff = 0.9525769506084467

Test set: Average loss: 0.9804 | Accuracy: 0.980 Target: Al-Al-4kV eff = 0.9097403333711305

Test set: Average loss: 0.8990 | Accuracy: 0.899 Target: C-EPIC-0.6kV eff = 0.7640920442383161

Test set: Average loss: 0.9584 | Accuracy: 0.958 Target: Cu-EPIC-0.9kV eff = 0.8211913197519929

Test set: Average loss: 0.9636 | Accuracy: 0.964 Target: Cu-EPIC-2kV eff = 0.8543657331136738

Test set: Average loss: 0.9980 | Accuracy: 0.998 Target: Cu-Ni-15kV eff = 0.981651376146789

Test set: Average loss: 0.9978 | Accuracy: 0.998 Target: Mn-Cr-12kV eff = 0.9807117070654977

Test set: Average loss: 0.9982 | Accuracy: 0.998 Target: Ti-Ti-9kV eff = 0.9776235367243344

which yields:

Background rate at 80% for Run-2 data:

cd ~/CastData/ExternCode/TimepixAnalysis/Tools/NN_playground

./train_ingrid -f ~/CastData/data/DataRuns2017_Reco.h5 --ε 0.8

Test set: Average loss: 0.9714 | Accuracy: 0.971 Cut value: 3.556154251098633

Test set: Average loss: 0.9714 | Accuracy: 0.971 Total efficiency = 0.799991907420895

Test set: Average loss: 0.9945 | Accuracy: 0.994 Target: Ag-Ag-6kV eff = 0.856209735146743

Test set: Average loss: 0.9804 | Accuracy: 0.980 Target: Al-Al-4kV eff = 0.7955550515931511

Test set: Average loss: 0.8990 | Accuracy: 0.899 Target: C-EPIC-0.6kV eff = 0.6546557260078487

Test set: Average loss: 0.9584 | Accuracy: 0.958 Target: Cu-EPIC-0.9kV eff = 0.7030558015943313

Test set: Average loss: 0.9636 | Accuracy: 0.964 Target: Cu-EPIC-2kV eff = 0.7335529928610653

Test set: Average loss: 0.9980 | Accuracy: 0.998 Target: Cu-Ni-15kV eff = 0.9102752293577981

Test set: Average loss: 0.9978 | Accuracy: 0.998 Target: Mn-Cr-12kV eff = 0.9036616812790098

Test set: Average loss: 0.9982 | Accuracy: 0.998 Target: Ti-Ti-9kV eff = 0.8947477468144618

which yields:

Background rate at 70% for Run-2 data:

cd ~/CastData/ExternCode/TimepixAnalysis/Tools/NN_playground

./train_ingrid -f ~/CastData/data/DataRuns2017_Reco.h5 --ε 0.7

Test set: Average loss: 0.9714 | Accuracy: 0.971 Cut value: 5.098100709915161

Test set: Average loss: 0.9714 | Accuracy: 0.971 Total efficiency = 0.6999946049472634

Test set: Average loss: 0.9945 | Accuracy: 0.994 Target: Ag-Ag-6kV eff = 0.7380100214745884

Test set: Average loss: 0.9804 | Accuracy: 0.980 Target: Al-Al-4kV eff = 0.6931624900782402

Test set: Average loss: 0.8990 | Accuracy: 0.899 Target: C-EPIC-0.6kV eff = 0.5882090617195861

Test set: Average loss: 0.9584 | Accuracy: 0.958 Target: Cu-EPIC-0.9kV eff = 0.6312001771479185

Test set: Average loss: 0.9636 | Accuracy: 0.964 Target: Cu-EPIC-2kV eff = 0.6507413509060955

Test set: Average loss: 0.9980 | Accuracy: 0.998 Target: Cu-Ni-15kV eff = 0.7844036697247706

Test set: Average loss: 0.9978 | Accuracy: 0.998 Target: Mn-Cr-12kV eff = 0.7790613718411552

Test set: Average loss: 0.9982 | Accuracy: 0.998 Target: Ti-Ti-9kV eff = 0.7758209882937946

which yields:

[X]Add background rates for new model using 95%, 90%, 80%[X]And the outputs as above for the local efficiencies

[ ]Implement selection of global vs. local target efficiency[ ]Check the efficiency we get when applying the model to the 55Fe calibration data (need same efficiency!). Cross check our helper program that does this for lnL method[ ]Check the background rate from the Run-3 data. Is it compatible? Or does it break down?

Practical:

[ ]Move the model logic over tolikelihood.nimas a replacement for thefkAggressiveveto[ ]including the selection of the target efficiency

[X]Clean up veto system inlikelihoodfor better insertion of NN[X]add lnL as a form of veto

[X]add vetoes for MLP and ConvNet (in principle)[ ]move NN code to main ingrid module[ ]make MLP / ConvNet types accessible inlikelihoodif compiled on cpp backend (and with CUDA?)[ ]add path to model file[ ]adjustCutValueInterpolatorto make it work for both lnL as well as NN. Idea is the same!

Questions:

[ ]Is there still a place for something like an equivalent for the likelihood morphing? In this case based on likely just interpolating the cut values?

1.9.

TODOs from yesterday:

[X]Add background rates for new model using 95%, 90%, 80%[X]And the outputs as above for the local efficiencies

[X]Implement selection of global vs. local target efficiency[X]Check the efficiency we get when applying the model to the 55Fe calibration data (need same efficiency!). Cross check our helper program that does this for lnL method -> Wroteeffective_eff_55fe.niminNN_playground-> Efficiency in 55Fe data is abysmal! ~40-55 % at 95% ![ ]Check the background rate from the Run-3 data. Is it compatible? Or does it break down?

Practical:

[ ]Move the model logic over tolikelihood.nimas a replacement for thefkAggressiveveto[ ]including the selection of the target efficiency

[X]Clean up veto system inlikelihoodfor better insertion of NN[X]add lnL as a form of veto

[X]add vetoes for MLP and ConvNet (in principle)[ ]move NN code to main ingrid module[X]make MLP / ConvNet types accessible inlikelihoodif compiled on cpp backend (and with CUDA?)[X]add path to model file[X]adjustCutValueInterpolatorto make it work for both lnL as well as NN. Idea is the same!

Questions:

[ ]Is there still a place for something like an equivalent for the likelihood morphing? In this case based on likely just interpolating the cut values?

With the refactor of likelihood we can now do things like disable

the lnL cut itself and only use the vetoes.

NOTE: All outputs below that were placed in /t/testing can be

found in ./resources/nn_testing_outputs/.

For example look at only using the FADC (with a much harsher cut than usual):

likelihood -f ~/CastData/data/DataRuns2017_Reco.h5 \ --h5out /tmp/testing/test_run2_only_fadc.h5 \ --region crGold \ --cdlYear 2018 \ --cdlFile ~/CastData/data/CDL_2019/calibration-cdl-2018.h5 \ --fadcveto \ --vetoPercentile 0.75 \ --calibFile ~/CastData/data/CalibrationRuns2017_Reco.h5 plotBackgroundRate /t/testing/test_run2_only_fadc.h5 \ --combName "onlyFadc" \ --combYear 2017 \ --centerChip 3 \ --title "Background rate from CAST data, only FADC veto" \ --showNumClusters \ --showTotalTime \ --topMargin 1.5 \ --energyDset energyFromCharge \ --outfile background_rate_only_fadc_veto.pdf \ --outpath /t/testing/ --quiet

The plot is here:

The issue is there's a couple of runs that have no FADC / in which the

FADC was extremely noisy, hence all we really see is the background distribution of those.

But for the more interesting stuff, let's try to create the background rate using the NN veto at 95% efficiency!:

likelihood -f ~/CastData/data/DataRuns2017_Reco.h5 \ --h5out /tmp/testing/test_run2_only_mlp_0.95.h5 \ --region crGold \ --cdlYear 2018 \ --cdlFile ~/CastData/data/CDL_2019/calibration-cdl-2018.h5 \ --mlp ~/org/resources/mlp_trained_bsz8192_hidden_5000.pt \ --nnSignalEff 0.95 \ --nnCutKind global \ --calibFile ~/CastData/data/CalibrationRuns2017_Reco.h5

plotBackgroundRate /t/testing/test_run2_only_mlp_0.95.h5 \ --combName "onlyMLP" \ --combYear 2017 \ --centerChip 3 \ --title "Background rate from CAST data, only MLP @ 95%" \ --showNumClusters \ --showTotalTime \ --topMargin 1.5 \ --energyDset energyFromCharge \ --outfile background_rate_only_mlp_0.95.pdf \ --outpath /t/testing/ --quiet

At 90% global efficiency:

likelihood -f ~/CastData/data/DataRuns2017_Reco.h5 \ --h5out /tmp/testing/test_run2_only_mlp_0.9.h5 \ --region crGold \ --cdlYear 2018 \ --cdlFile ~/CastData/data/CDL_2019/calibration-cdl-2018.h5 \ --mlp ~/org/resources/mlp_trained_bsz8192_hidden_5000.pt \ --nnSignalEff 0.9 \ --nnCutKind global \ --calibFile ~/CastData/data/CalibrationRuns2017_Reco.h5

plotBackgroundRate /t/testing/test_run2_only_mlp_0.9.h5 \ --combName "onlyMLP" \ --combYear 2017 \ --centerChip 3 \ --title "Background rate from CAST data, only MLP @ 90%" \ --showNumClusters \ --showTotalTime \ --topMargin 1.5 \ --energyDset energyFromCharge \ --outfile background_rate_only_mlp_0.9.pdf \ --outpath /t/testing/ --quiet

At 80% global efficiency:

likelihood -f ~/CastData/data/DataRuns2017_Reco.h5 \ --h5out /tmp/testing/test_run2_only_mlp_0.8.h5 \ --region crGold \ --cdlYear 2018 \ --cdlFile ~/CastData/data/CDL_2019/calibration-cdl-2018.h5 \ --mlp ~/org/resources/mlp_trained_bsz8192_hidden_5000.pt \ --nnSignalEff 0.8 \ --nnCutKind global \ --calibFile ~/CastData/data/CalibrationRuns2017_Reco.h5

plotBackgroundRate /t/testing/test_run2_only_mlp_0.8.h5 \ --combName "onlyMLP" \ --combYear 2017 \ --centerChip 3 \ --title "Background rate from CAST data, only MLP @ 90%" \ --showNumClusters \ --showTotalTime \ --topMargin 1.5 \ --energyDset energyFromCharge \ --outfile background_rate_only_mlp_0.8.pdf \ --outpath /t/testing/ --quiet

At 70% global efficiency:

likelihood -f ~/CastData/data/DataRuns2017_Reco.h5 \ --h5out /tmp/testing/test_run2_only_mlp_0.7.h5 \ --region crGold \ --cdlYear 2018 \ --cdlFile ~/CastData/data/CDL_2019/calibration-cdl-2018.h5 \ --mlp ~/org/resources/mlp_trained_bsz8192_hidden_5000.pt \ --nnSignalEff 0.7 \ --nnCutKind global \ --calibFile ~/CastData/data/CalibrationRuns2017_Reco.h5

plotBackgroundRate /t/testing/test_run2_only_mlp_0.7.h5 \ --combName "onlyMLP" \ --combYear 2017 \ --centerChip 3 \ --title "Background rate from CAST data, only MLP @ 90%" \ --showNumClusters \ --showTotalTime \ --topMargin 1.5 \ --energyDset energyFromCharge \ --outfile background_rate_only_mlp_0.7.pdf \ --outpath /t/testing/ --quiet

NOTE: Make sure to set neuralNetCutKind to local in the config file!

And local 95%:

likelihood -f ~/CastData/data/DataRuns2017_Reco.h5 \ --h5out /tmp/testing/test_run2_only_mlp_local_0.95.h5 \ --region crGold \ --cdlYear 2018 \ --cdlFile ~/CastData/data/CDL_2019/calibration-cdl-2018.h5 \ --mlp ~/org/resources/mlp_trained_bsz8192_hidden_5000.pt \ --nnSignalEff 0.95 \ --nnCutKind local \ --calibFile ~/CastData/data/CalibrationRuns2017_Reco.h5

plotBackgroundRate /t/testing/test_run2_only_mlp_local_0.95.h5 \ --combName "onlyMLP" \ --combYear 2017 \ --centerChip 3 \ --title "Background rate from CAST data, only MLP @ local 95%" \ --showNumClusters \ --showTotalTime \ --topMargin 1.5 \ --energyDset energyFromCharge \ --outfile background_rate_only_mlp_local_0.95.pdf \ --outpath /t/testing/ --quiet

And local 80%:

likelihood -f ~/CastData/data/DataRuns2017_Reco.h5 \ --h5out /tmp/testing/test_run2_only_mlp_local_0.8.h5 \ --region crGold \ --cdlYear 2018 \ --cdlFile ~/CastData/data/CDL_2019/calibration-cdl-2018.h5 \ --mlp ~/org/resources/mlp_trained_bsz8192_hidden_5000.pt \ --nnSignalEff 0.8 \ --nnCutKind local \ --calibFile ~/CastData/data/CalibrationRuns2017_Reco.h5

plotBackgroundRate /t/testing/test_run2_only_mlp_local_0.8.h5 \ --combName "onlyMLP" \ --combYear 2017 \ --centerChip 3 \ --title "Background rate from CAST data, only MLP @ local 80%" \ --showNumClusters \ --showTotalTime \ --topMargin 1.5 \ --energyDset energyFromCharge \ --outfile background_rate_only_mlp_local_0.8.pdf \ --outpath /t/testing/ --quiet

1.10.

Continue on from yesterday:

[ ]Implement 55Fe calibration data into the training process. E.g. add about 1000 events per calibration run to the training data as signal target to have a wider distribution of what real X-rays should look like. Hopefully that increases our efficiency![ ]It seems like only very few events pass the cuts in

readCalibData(e.g. what we use for effective efficiency check and in mixed data training). Why is that? Especially for escape peak often less than 300 events are valid! So little statistics, really? Looking at spectra, e.g. in~/CastData/ExternCode/TimepixAnalysis/Analysis/ingrid/out/CalibrationRuns2018_Raw_2020-04-28_15-06-54there is really this little statistics in the escape peak often. (peaks at less than per bin 50!) How do these spectra look without any cuts? Are our cuts rubbish? Quick look:plotData --h5file ~/CastData/data/CalibrationRuns2017_Reco.h5 \ --runType rtCalibration \ --config ~/CastData/ExternCode/TimepixAnalysis/Plotting/karaPlot/config.toml \ --region crSilver \ --ingrid --separateRuns

seems to support that there simply isn't much more statistics available!

[X]First training with a mixed set of data, using, per run:

- min(500, total) escape peak (after cuts)

- min(500, total) photo peak (after cuts)

- 6000 background

- all CDL

-> all of these are of course shuffled and then split into training and test datasets The resulting model is in: ./resources/nn_devel_mixing/trained_mlp_mixed_data.pt

The generated plots are in: ./Figs/statusAndProgress/neuralNetworks/development/mixing_data/

Looking at these figures we can see mainly that the ROC curve is extremely 'clean', but fitting for the separation seen in the training and validation output distributions.

[ ]effective efficiencies for 55Fe[ ]efficiencies of CDL data!

[X]make loss / accuracy curves log10[ ]Implement snapshots of the model during training whenever the training and test (or only test) accuracy improves

As discussed in the meeting today (sec. [BROKEN LINK: sec:meetings:17_03_23] in notes), let's rerun all expected limits and add the new two, namely:

[ ]redo all expected limit calculations with the following new cases:- 0.9 lnL + scinti + FADC@0.98 + line

- 0.8 lnL + scinti + FADC@0.98 + line εcut:

- 1.0, 1.2, 1.4, 1.6

The standard cases (lnL 80 + all veto combinations with different FADC settings):

./createAllLikelihoodCombinations \ --f2017 ~/CastData/data/DataRuns2017_Reco.h5 \ --f2018 ~/CastData/data/DataRuns2018_Reco.h5 \ --c2017 ~/CastData/data/CalibrationRuns2017_Reco.h5 \ --c2018 ~/CastData/data/CalibrationRuns2018_Reco.h5 \ --regions crGold --regions crAll \ --vetoSets "{fkLogL, fkScinti, fkFadc, fkSeptem, fkLineVeto, fkExclusiveLineVeto}" \ --fadcVetoPercentiles 0.9 --fadcVetoPercentiles 0.95 --fadcVetoPercentiles 0.99 \ --out /t/lhood_outputs_adaptive_fadc \ --cdlFile ~/CastData/data/CDL_2019/calibration-cdl-2018.h5 \ --multiprocessing

The no septem veto + different lnL efficiencies:

[X]0.9 lnL + scinti + FADC@0.98 + line

./createAllLikelihoodCombinations \ --f2017 ~/CastData/data/DataRuns2017_Reco.h5 \ --f2018 ~/CastData/data/DataRuns2018_Reco.h5 \ --c2017 ~/CastData/data/CalibrationRuns2017_Reco.h5 \ --c2018 ~/CastData/data/CalibrationRuns2018_Reco.h5 \ --regions crGold --regions crAll \ --vetoSets "{+fkLogL, +fkScinti, fkSeptem, fkLineVeto, fkExclusiveLineVeto}" \ --signalEfficiency 0.7 --signalEfficiency 0.9 \ --out /t/lhood_outputs_adaptive_fadc \ --cdlFile ~/CastData/data/CDL_2019/calibration-cdl-2018.h5 \ --multiprocessing

The older case of changing lnL efficiency with different lnL efficiency

[X]add a case with less extreme FADC veto

./createAllLikelihoodCombinations \ --f2017 ~/CastData/data/DataRuns2017_Reco.h5 \ --f2018 ~/CastData/data/DataRuns2018_Reco.h5 \ --c2017 ~/CastData/data/CalibrationRuns2017_Reco.h5 \ --c2018 ~/CastData/data/CalibrationRuns2018_Reco.h5 \ --regions crGold --regions crAll \ --signalEfficiency 0.7 --signalEfficiency 0.9 \ --vetoSets "{+fkLogL, +fkScinti, +fkFadc, +fkSeptem, fkLineVeto}" \ --fadcVetoPercentile 0.95 --fadcVetoPercentile 0.99 \ --out /t/lhood_outputs_adaptive_fadc \ --cdlFile ~/CastData/data/CDL_2019/calibration-cdl-2018.h5 \ --multiprocessing

And finally different eccentricity cutoffs for the line veto:

./createAllLikelihoodCombinations \ --f2017 ~/CastData/data/DataRuns2017_Reco.h5 \ --f2018 ~/CastData/data/DataRuns2018_Reco.h5 \ --c2017 ~/CastData/data/CalibrationRuns2017_Reco.h5 \ --c2018 ~/CastData/data/CalibrationRuns2018_Reco.h5 \ --regions crGold --regions crAll \ --signalEfficiency 0.7 --signalEfficiency 0.8 --signalEfficiency 0.9 \ --vetoSets "{+fkLogL, +fkScinti, +fkFadc, fkLineVeto}" \ --eccentricityCutoff 1.0 --eccentricityCutoff 1.2 --eccentricityCutoff 1.4 --eccentricityCutoff 1.6 \ --out /t/lhood_outputs_adaptive_fadc \ --cdlFile ~/CastData/data/CDL_2019/calibration-cdl-2018.h5 \ --multiprocessing

The output H5 files will be placed in: ./resources/lhood_limits_automation_with_nn_support

1.11.

With the likelihood output files generated over night in

resources/lhood_limits_automation_with_nn_support

it's now time to let the limits run.

I noticed something else was missing from these files: I forgot to re

add the actual vetoes in use to the output (because those were written

manually).

[X]addflagstoLikelihoodContextto auto serialize them[X]updatemcmclimit code to use the new serialized data as veto efficiency and veto usage[X]rerun all limits with all the different setups.[X]updaterunLimitsto be smarter about what has been done. In principle we can now quit the limit calculation and it should continue automatically on a restart (with the last file worked on!)

The script we actually ran today. This will be part of the thesis (or a variation thereof).

#!/usr/bin/zsh cd ~/CastData/ExternCode/TimepixAnalysis/Analysis/ ./createAllLikelihoodCombinations \ --f2017 ~/CastData/data/DataRuns2017_Reco.h5 \ --f2018 ~/CastData/data/DataRuns2018_Reco.h5 \ --c2017 ~/CastData/data/CalibrationRuns2017_Reco.h5 \ --c2018 ~/CastData/data/CalibrationRuns2018_Reco.h5 \ --regions crGold --regions crAll \ --vetoSets "{fkLogL, fkScinti, fkFadc, fkSeptem, fkLineVeto, fkExclusiveLineVeto}" \ --fadcVetoPercentiles 0.9 --fadcVetoPercentiles 0.95 --fadcVetoPercentiles 0.99 \ --out /t/lhood_outputs_adaptive_fadc \ --cdlFile ~/CastData/data/CDL_2019/calibration-cdl-2018.h5 \ --multiprocessing \ --jobs 12 ./createAllLikelihoodCombinations \ --f2017 ~/CastData/data/DataRuns2017_Reco.h5 \ --f2018 ~/CastData/data/DataRuns2018_Reco.h5 \ --c2017 ~/CastData/data/CalibrationRuns2017_Reco.h5 \ --c2018 ~/CastData/data/CalibrationRuns2018_Reco.h5 \ --regions crGold --regions crAll \ --vetoSets "{+fkLogL, +fkScinti, fkSeptem, fkLineVeto, fkExclusiveLineVeto}" \ --signalEfficiency 0.7 --signalEfficiency 0.9 \ --out /t/lhood_outputs_adaptive_fadc \ --cdlFile ~/CastData/data/CDL_2019/calibration-cdl-2018.h5 \ --multiprocessing \ --jobs 12 ./createAllLikelihoodCombinations \ --f2017 ~/CastData/data/DataRuns2017_Reco.h5 \ --f2018 ~/CastData/data/DataRuns2018_Reco.h5 \ --c2017 ~/CastData/data/CalibrationRuns2017_Reco.h5 \ --c2018 ~/CastData/data/CalibrationRuns2018_Reco.h5 \ --regions crGold --regions crAll \ --signalEfficiency 0.7 --signalEfficiency 0.9 \ --vetoSets "{+fkLogL, +fkScinti, +fkFadc, +fkSeptem, fkLineVeto}" \ --fadcVetoPercentile 0.95 --fadcVetoPercentile 0.99 \ --out /t/lhood_outputs_adaptive_fadc \ --cdlFile ~/CastData/data/CDL_2019/calibration-cdl-2018.h5 \ --multiprocessing \ --jobs 12 ./createAllLikelihoodCombinations \ --f2017 ~/CastData/data/DataRuns2017_Reco.h5 \ --f2018 ~/CastData/data/DataRuns2018_Reco.h5 \ --c2017 ~/CastData/data/CalibrationRuns2017_Reco.h5 \ --c2018 ~/CastData/data/CalibrationRuns2018_Reco.h5 \ --regions crGold --regions crAll \ --signalEfficiency 0.7 --signalEfficiency 0.8 --signalEfficiency 0.9 \ --vetoSets "{+fkLogL, +fkScinti, +fkFadc, fkLineVeto}" \ --eccentricityCutoff 1.0 --eccentricityCutoff 1.2 --eccentricityCutoff 1.4 --eccentricityCutoff 1.6 \ --out /t/lhood_outputs_adaptive_fadc \ --cdlFile ~/CastData/data/CDL_2019/calibration-cdl-2018.h5 \ --multiprocessing \ --jobs 12

(all in ./resources/lhood_limits_automation_with_nn_support/)

And currently running:

./runLimits --path ~/org/resources/lhood_limits_automation_with_nn_support --nmc 1000

Train NN:

./train_ingrid ~/CastData/data/CalibrationRuns2017_Reco.h5 \ ~/CastData/data/CalibrationRuns2018_Reco.h5 \ --back ~/CastData/data/DataRuns2017_Reco.h5 \ --back ~/CastData/data/DataRuns2018_Reco.h5 \ --ε 0.95 \ --modelOutpath /tmp/trained_mlp_mixed_data.pt

NOTE: The one thing I just realized is: the accuracy we print is of course related to the actual prediction of the network, i.e. which of the two output neurons is the maximum value. So maybe our approach of only looking at one and adjusting based on that is just dumb and the network actually is much better than we think?

The numbers we see as accuracy actually make sense.

Consider predictBackground output:

Pred set: Average loss: 0.0169 | Accuracy: 0.9956 p inds len 1137 compared to all 260431

The 1137 clusters left after cuts correspond exactly to 99.56% (this is based on using the network's real prediction and not the output + cut value). The question here is: at what signal efficiency is this? From the CDL data it would seem to be at ~99%.

The network we trained today, including checkpoints is here: ./resources/nn_devel_mixing/18_03_23/

[X]Check what efficiency we get for calibration data instead of background -> Yeah, it is also at over 99% efficiency. So we get a 1e-5 background rate at 99% efficiency. At least that's not too bad.

:

The processed expected limit files are now here:

./resources/lhood_limits_automation_with_nn_support/limits/

with the logL output files in the lhood folder.

We'll continue on later with the processed.txt file as a guide there.

1.12.

The expected limits for

resources/lhood_limits_automation_with_nn_support/limits/ are

still running because our processed continuation check was incorrect

(looking at full path & not actually skipping files!).

Back to the NN: Let's look at the output of the network for both output neurons. Are they really essentially a mirror of one another?

predictAll in train_ingrid creates a plot of the different data

kinds (55Fe, CDL, background) and each neurons output prediction.

This yields the following plot by running:

./train_ingrid ~/CastData/data/CalibrationRuns2017_Reco.h5 \ ~/CastData/data/CalibrationRuns2018_Reco.h5 \ --back ~/CastData/data/DataRuns2017_Reco.h5 \ --back ~/CastData/data/DataRuns2018_Reco.h5 \ --ε 0.95 \ --modelOutpath ~/org/resources/nn_devel_mixing/18_03_23/trained_mlp_mixed_datacheckpoint_epoch_95000_loss_0.0117_acc_0.9974.pt \ --predict

where we can see the following points:

- the two neurons are almost perfect mirrors, but not exactly

- selecting the

argmaxof the two neurons gives us that neuron, which has a positive value almost certainly, due to the mirror nature around 0. It could be different (e.g. both neurons giving a positive or a negative value), but looking at the data this does not seem to happen (if then very rarely). - a cut value of

0should reproduce pretty exactly the standard neural network prediction of picking theargmax

Question: Can the usage of both neurons be beneficial given the small but existing differences in the distributions? Not sure how if so.

An earlier checkpoint (the one before the extreme jump in the loss

value based on the loss figure; need to regenerate it, but similar to

except as log10) yields the following neuron output:

./train_ingrid ~/CastData/data/CalibrationRuns2017_Reco.h5 \ ~/CastData/data/CalibrationRuns2018_Reco.h5 \ --back ~/CastData/data/DataRuns2017_Reco.h5 \ --back ~/CastData/data/DataRuns2018_Reco.h5 \ --ε 0.95 \ --modelOutpath ~/org/resources/nn_devel_mixing/18_03_23/trained_mlp_mixed_datacheckpoint_epoch_65000_loss_0.0103_acc_0.9977.pt \ --predict

We can clearly see that at this stage in training the two types of signal data are predicted quite differently! In that sense the latest model is actually much more like what we want, i.e. same prediction for all different kinds of X-rays!

What does the case with the worst loss look like?

./train_ingrid ~/CastData/data/CalibrationRuns2017_Reco.h5 \ ~/CastData/data/CalibrationRuns2018_Reco.h5 \ --back ~/CastData/data/DataRuns2017_Reco.h5 \ --back ~/CastData/data/DataRuns2018_Reco.h5 \ --ε 0.95 \ --modelOutpath ~/org/resources/nn_devel_mixing/18_03_23/trained_mlp_mixed_datacheckpoint_epoch_70000_loss_0.9683_acc_0.9977.pt \ --predict

Interestingly essentially the same. But the accuracy is the same as before, only the loss is different. Not sure why that might be.

Training the network again after the charge bug was fixed:

./train_ingrid ~/CastData/data/CalibrationRuns2017_Reco.h5 \ ~/CastData/data/CalibrationRuns2018_Reco.h5 \ --back ~/CastData/data/DataRuns2017_Reco.h5 \ --back ~/CastData/data/DataRuns2018_Reco.h5 \ --ε 0.95 \ --modelOutpath /t/nn_training/trained_model_charge_cut_bug_fixed.pt

which are stored here:

./resources/nn_devel_mixing/19_03_23_charge_bug_fixed/

with the generated plots:

./Figs/statusAndProgress/neuralNetworks/development/charge_cut_bug_fixed

Looking at the loss plot, at around epoch 83000 the training data

started to outpace the test data (test didn't get any worse though and

test accuracy improved slightly).

Also the ~all_prediction.pdf plot showing how CDL and 55Fe data is

predicted is interesting. The CDL data is skewed significantly more

to the right than 55Fe, explaining the again prevalent difference in

55Fe efficiency for a given CDL eff:

./effective_eff_55fe \ -f ~/CastData/data/CalibrationRuns2017_Reco.h5 \ --model ~/org/resources/nn_devel_mixing/19_03_23_charge_bug_fixed/trained_model_charge_cut_bug_fixedcheckpoint_epoch_100000_loss_0.0102_acc_0.9978.pt \ --ε 0.95

Run: 83 for target: signal Keeping : 759 of 916 = 0.8286026200873362

Run: 88 for target: signal Keeping : 763 of 911 = 0.8375411635565313

Run: 93 for target: signal Keeping : 640 of 787 = 0.8132147395171537

Run: 96 for target: signal Keeping : 4591 of 5635 = 0.8147293700088731

Run: 102 for target: signal Keeping : 1269 of 1588 = 0.7991183879093199

Run: 108 for target: signal Keeping : 2450 of 3055 = 0.8019639934533551

Run: 110 for target: signal Keeping : 1244 of 1554 = 0.8005148005148005

Run: 116 for target: signal Keeping : 1404 of 1717 = 0.8177052999417589

Run: 118 for target: signal Keeping : 1351 of 1651 = 0.8182919442761962

Run: 120 for target: signal Keeping : 2784 of 3413 = 0.8157046586580721

Run: 122 for target: signal Keeping : 4670 of 5640 = 0.8280141843971631

Run: 126 for target: signal Keeping : 2079 of 2596 = 0.8008474576271186

Run: 128 for target: signal Keeping : 6379 of 7899 = 0.8075705785542474

Run: 145 for target: signal Keeping : 2950 of 3646 = 0.8091058694459682

Run: 147 for target: signal Keeping : 1670 of 2107 = 0.7925961082107261

Run: 149 for target: signal Keeping : 1536 of 1936 = 0.7933884297520661

Run: 151 for target: signal Keeping : 1454 of 1839 = 0.790647090810223

Run: 153 for target: signal Keeping : 1515 of 1908 = 0.7940251572327044

Run: 155 for target: signal Keeping : 1386 of 1777 = 0.7799662352279122

Run: 157 for target: signal Keeping : 1395 of 1817 = 0.7677490368739681

Run: 159 for target: signal Keeping : 2805 of 3634 = 0.7718767198679142

Run: 161 for target: signal Keeping : 2825 of 3632 = 0.7778083700440529

Run: 163 for target: signal Keeping : 1437 of 1841 = 0.7805540467137425

Run: 165 for target: signal Keeping : 3071 of 3881 = 0.7912909044060809

Run: 167 for target: signal Keeping : 1557 of 2008 = 0.775398406374502

Run: 169 for target: signal Keeping : 4644 of 5828 = 0.7968428277282087

Run: 171 for target: signal Keeping : 1561 of 1956 = 0.7980572597137015

Run: 173 for target: signal Keeping : 1468 of 1820 = 0.8065934065934066

Run: 175 for target: signal Keeping : 1602 of 2015 = 0.7950372208436725

Run: 177 for target: signal Keeping : 1557 of 1955 = 0.7964194373401534

Run: 179 for target: signal Keeping : 1301 of 1671 = 0.7785757031717534

Run: 181 for target: signal Keeping : 2685 of 3426 = 0.7837127845884413

Run: 183 for target: signal Keeping : 2821 of 3550 = 0.7946478873239436

Run: 185 for target: signal Keeping : 3063 of 3856 = 0.7943464730290456

Run: 187 for target: signal Keeping : 2891 of 3616 = 0.7995022123893806

This is for a local efficiency. So once again 95% in CDL correspond to about 80% in 55Fe. Not ideal.

Let's try to train a network that also includes the total charge, so it has some idea of the gas gain in the events.

Otherwise we leave the settings as is:

./train_ingrid \ ~/CastData/data/CalibrationRuns2017_Reco.h5 \ ~/CastData/data/CalibrationRuns2018_Reco.h5 \ --back ~/CastData/data/DataRuns2017_Reco.h5 \ --back ~/CastData/data/DataRuns2018_Reco.h5 \ --ε 0.95 \ --modelOutpath /t/nn_training/trained_model_incl_totalCharge.pt

Interestingly, when including the total charge, the loss of the test data remains lower than of the training set! Models: ./resources/nn_devel_mixing/19_03_23_with_total_charge/ and plots: ./Figs/statusAndProgress/neuralNetworks/development/with_total_charge/ Looking at the total charge, we see the same behavior of CDL and 55Fe data essentially. The background distribution has changed a bit.

We could attempt to change our definition of our loss

function. Currently we in now way enforce that our result should be

close to our target [1, 0] and [0, 1]. Using a MSE loss for

example would make sure of that.

Now training with MSE loss. -> Couldn't get anything sensible out of MSE loss. Chatted with BingChat and it couldn't quite help me (different learning rate etc.), but it did suggest to try L1 loss (mean absolute error), which I am running with now.

L1 loss: ./resources/nn_devel_mixing/19_03_23_l1_loss/ ./Figs/statusAndProgress/neuralNetworks/development/l1_loss/ The all prediction plot is interesting. We see the same-ish behavior in this case as in the cross entropy loss. In the training dataset we can even more clearly see two distinct peaks. However, especially the effective efficiencies in the 55Fe data are all over the place:

./effective_eff_55fe \ -f ~/CastData/data/CalibrationRuns2017_Reco.h5 \ --model ~/org/resources/nn_devel_mixing/19_03_23_l1_loss/trained_model_incl_totalCharge_l1_losscheckpoint_epoch_100000_loss_0.0157_acc_0.9971.pt \ --ε 0.95

Run: 83 for target: signal Keeping : 781 of 916 = 0.8526200873362445

Run: 88 for target: signal Keeping : 827 of 911 = 0.9077936333699231

Run: 93 for target: signal Keeping : 612 of 787 = 0.7776365946632783

Run: 96 for target: signal Keeping : 4700 of 5635 = 0.8340727595385981

Run: 102 for target: signal Keeping : 1292 of 1588 = 0.8136020151133502

Run: 108 for target: signal Keeping : 2376 of 3055 = 0.7777414075286416

Run: 110 for target: signal Keeping : 1222 of 1554 = 0.7863577863577863

Run: 116 for target: signal Keeping : 1453 of 1717 = 0.8462434478741991

Run: 118 for target: signal Keeping : 1376 of 1651 = 0.8334342822531798

Run: 120 for target: signal Keeping : 2966 of 3413 = 0.8690301787283914

Run: 122 for target: signal Keeping : 5049 of 5640 = 0.8952127659574468

Run: 126 for target: signal Keeping : 2157 of 2596 = 0.8308936825885979

Run: 128 for target: signal Keeping : 6546 of 7899 = 0.8287124952525636

Run: 145 for target: signal Keeping : 2729 of 3646 = 0.7484914975315414

Run: 147 for target: signal Keeping : 1517 of 2107 = 0.7199810156620788

Run: 149 for target: signal Keeping : 1152 of 1936 = 0.5950413223140496

Run: 151 for target: signal Keeping : 1135 of 1839 = 0.6171832517672649

Run: 153 for target: signal Keeping : 1091 of 1908 = 0.5718029350104822

Run: 155 for target: signal Keeping : 974 of 1777 = 0.5481148002250985

Run: 157 for target: signal Keeping : 978 of 1817 = 0.5382498624105668

Run: 159 for target: signal Keeping : 2083 of 3634 = 0.5731975784259769

Run: 161 for target: signal Keeping : 2152 of 3632 = 0.5925110132158591

Run: 163 for target: signal Keeping : 1264 of 1841 = 0.6865833785985878

Run: 165 for target: signal Keeping : 2929 of 3881 = 0.7547023962896161

Run: 167 for target: signal Keeping : 1467 of 2008 = 0.7305776892430279

Run: 169 for target: signal Keeping : 4458 of 5828 = 0.7649279341111874

Run: 171 for target: signal Keeping : 1495 of 1956 = 0.7643149284253579

Run: 173 for target: signal Keeping : 1401 of 1820 = 0.7697802197802198

Run: 175 for target: signal Keeping : 1566 of 2015 = 0.7771712158808933

Run: 177 for target: signal Keeping : 1561 of 1955 = 0.7984654731457801

Run: 179 for target: signal Keeping : 1105 of 1671 = 0.6612806702573309

Run: 181 for target: signal Keeping : 2425 of 3426 = 0.7078225335668418

Run: 183 for target: signal Keeping : 2543 of 3550 = 0.716338028169014

Run: 185 for target: signal Keeping : 3033 of 3856 = 0.7865663900414938

Run: 187 for target: signal Keeping : 2712 of 3616 = 0.75

So definitely worse in that aspect.

Let's try cross entropy again, but with L1 or L2 regularization.

./train_ingrid \ ~/CastData/data/CalibrationRuns2017_Reco.h5 \ ~/CastData/data/CalibrationRuns2018_Reco.h5 \ --back ~/CastData/data/DataRuns2017_Reco.h5 \ --back ~/CastData/data/DataRuns2018_Reco.h5 \ --ε 0.95 \ --modelOutpath ~/org/resources/nn_devel_mixing/19_03_23_l2_regularization/trained_model_incl_totalCharge_l2_regularization.pt

First attempt with:

SGDOptions.init(0.005).momentum(0.2).weight_decay(0.01)

does not really converge. I guess that is too large.. :) Trying again

with 0.001. This seems to work better.

Oh, it broke between epoch 10000 and 15000, but better again at 20000

(but worse than before). It stayed on a plateau above the previous

afterwards until the end. Also the distributions of the outputs are

quite different now.

./train_ingrid ~/CastData/data/CalibrationRuns2017_Reco.h5 ~/CastData/data/CalibrationRuns2018_Reco.h5 --back ~/CastData/data/DataRuns2017_Reco.h5 --back ~/CastData/data/DataRuns2018_Reco.h5 --ε 0.95 --modelOutpath ~/org/resources/nn_devel_mixing/19_03_23_l2_regularization/trained_model_incl_totalCharge_l2_regularizationcheckpoint_epoch_100000_loss_0.0261_acc_0.9963.pt --predict --plotPath ~/org/Figs/statusAndProgress/neuralNetworks/development/l2_regularization ./train_ingrid ~/CastData/data/CalibrationRuns2017_Reco.h5 ~/CastData/data/CalibrationRuns2018_Reco.h5 --back ~/CastData/data/DataRuns2017_Reco.h5 --back ~/CastData/data/DataRuns2018_Reco.h5 --ε 0.95 --modelOutpath ~/org/resources/nn_devel_mixing/19_03_23_l2_regularization/trained_model_incl_totalCharge_l2_regularizationcheckpoint_epoch_10000_loss_0.0156_acc_0.9964.pt --predict --plotPath ~/org/Figs/statusAndProgress/neuralNetworks/development/l2_regularization

./resources/nn_devel_mixing/19_03_23_l2_regularization/

./Figs/statusAndProgress/neuralNetworks/development/l2_regularization/

Looking at the prediction of the final checkpoint

(*_final_checkpoint.pdf) we see that we still have the same kind of

shift in the data. However, after epoch 10000

()

we see a much clearer overlap between the two (but likely also more

background?).

Still interesting, maybe L2 reg is useful if optimized to a good

parameter.

Let's look at the effective efficiencies of this particular checkpoint

and comparing with the very last one:

First the last:

./effective_eff_55fe \ -f ~/CastData/data/CalibrationRuns2017_Reco.h5 \ --model ~/org/resources/nn_devel_mixing/19_03_23_l2_regularization/trained_model_incl_totalCharge_l2_regularizationcheckpoint_epoch_100000_loss_0.0261_acc_0.9963.pt \ --ε 0.95

Run: 118 for target: signal Keeping : 1357 of 1651 = 0.8219261053906723

Run: 120 for target: signal Keeping : 2828 of 3413 = 0.8285965426311164

Run: 122 for target: signal Keeping : 4668 of 5640 = 0.8276595744680851

Run: 126 for target: signal Keeping : 2097 of 2596 = 0.8077812018489985

Run: 128 for target: signal Keeping : 6418 of 7899 = 0.8125079123939739

Run: 145 for target: signal Keeping : 2960 of 3646 = 0.811848601206802

Run: 147 for target: signal Keeping : 1731 of 2107 = 0.8215472235405791

Run: 149 for target: signal Keeping : 1588 of 1936 = 0.8202479338842975

Run: 151 for target: signal Keeping : 1482 of 1839 = 0.8058727569331158

Run: 153 for target: signal Keeping : 1565 of 1908 = 0.820230607966457

Run: 155 for target: signal Keeping : 1434 of 1777 = 0.806978052898143

Run: 157 for target: signal Keeping : 1457 of 1817 = 0.8018712162905889

Run: 159 for target: signal Keeping : 2914 of 3634 = 0.8018712162905889

Run: 161 for target: signal Keeping : 2929 of 3632 = 0.8064427312775331

Run: 163 for target: signal Keeping : 1474 of 1841 = 0.8006518196632265

Run: 165 for target: signal Keeping : 3134 of 3881 = 0.8075238340633857

Run: 167 for target: signal Keeping : 1609 of 2008 = 0.8012948207171314

Run: 169 for target: signal Keeping : 4738 of 5828 = 0.8129718599862732

Run: 171 for target: signal Keeping : 1591 of 1956 = 0.8133946830265849

Run: 173 for target: signal Keeping : 1465 of 1820 = 0.804945054945055

Run: 175 for target: signal Keeping : 1650 of 2015 = 0.8188585607940446

Run: 177 for target: signal Keeping : 1576 of 1955 = 0.8061381074168797

Run: 179 for target: signal Keeping : 1339 of 1671 = 0.8013165769000599

Run: 181 for target: signal Keeping : 2740 of 3426 = 0.7997664915353182

Run: 183 for target: signal Keeping : 2856 of 3550 = 0.8045070422535211

Run: 185 for target: signal Keeping : 3146 of 3856 = 0.8158713692946058

Run: 187 for target: signal Keeping : 2962 of 3616 = 0.8191371681415929

Once again in the ballpark of 80% while at 95% for CDL. And for epoch 10,000?

./effective_eff_55fe \ -f ~/CastData/data/CalibrationRuns2017_Reco.h5 \ --model ~/org/resources/nn_devel_mixing/19_03_23_l2_regularization/trained_model_incl_totalCharge_l2_regularizationcheckpoint_epoch_10000_loss_0.0156_acc_0.9964.pt \ --ε 0.95

Run: 118 for target: signal Keeping : 1357 of 1651 = 0.8219261053906723

Run: 120 for target: signal Keeping : 2790 of 3413 = 0.8174626428362145

Run: 122 for target: signal Keeping : 4626 of 5640 = 0.8202127659574469

Run: 126 for target: signal Keeping : 2092 of 2596 = 0.8058551617873652

Run: 128 for target: signal Keeping : 6377 of 7899 = 0.8073173819470819

Run: 145 for target: signal Keeping : 2974 of 3646 = 0.8156884256719693

Run: 147 for target: signal Keeping : 1735 of 2107 = 0.8234456573327005

Run: 149 for target: signal Keeping : 1606 of 1936 = 0.8295454545454546

Run: 151 for target: signal Keeping : 1485 of 1839 = 0.8075040783034257

Run: 153 for target: signal Keeping : 1575 of 1908 = 0.8254716981132075

Run: 155 for target: signal Keeping : 1444 of 1777 = 0.8126055149127743

Run: 157 for target: signal Keeping : 1478 of 1817 = 0.8134287286736379

Run: 159 for target: signal Keeping : 2932 of 3634 = 0.8068244358833242

Run: 161 for target: signal Keeping : 2942 of 3632 = 0.8100220264317181

Run: 163 for target: signal Keeping : 1484 of 1841 = 0.8060836501901141

Run: 165 for target: signal Keeping : 3134 of 3881 = 0.8075238340633857

Run: 167 for target: signal Keeping : 1612 of 2008 = 0.8027888446215139

Run: 169 for target: signal Keeping : 4700 of 5828 = 0.8064516129032258

Run: 171 for target: signal Keeping : 1582 of 1956 = 0.8087934560327198

Run: 173 for target: signal Keeping : 1469 of 1820 = 0.8071428571428572

Run: 175 for target: signal Keeping : 1630 of 2015 = 0.8089330024813896

Run: 177 for target: signal Keeping : 1571 of 1955 = 0.8035805626598466

Run: 179 for target: signal Keeping : 1366 of 1671 = 0.817474566128067

Run: 181 for target: signal Keeping : 2734 of 3426 = 0.7980151780502043

Run: 183 for target: signal Keeping : 2858 of 3550 = 0.8050704225352112

Run: 185 for target: signal Keeping : 3122 of 3856 = 0.8096473029045643

Run: 187 for target: signal Keeping : 2937 of 3616 = 0.8122234513274337

Interesting! Despite the much nicer overlap in the prediction at this checkpoint, the end result is not that different. Not quite sure what to make of that.

Next we try Adam, starting with this:

var optimizer = Adam.init( model.parameters(), AdamOptions.init(0.005) )

./resources/nn_devel_mixing/19_03_23_adam_optim/ ./Figs/statusAndProgress/neuralNetworks/development/adam_optim/

-> Outputs are very funny. Extremely wide, need --clampOutput

O(10000) or more. CDL and 55Fe are quite separated though!

Enough for today.

[X]TryL1and L2 regularization of the network (weight decay parameter)[ ]Try L1 regularization[ ]Try Adam optimizer[ ]Try L2 with a value slightly larger and slightly smaller than0.001

1.12.1. TODOs [/]

[ ]If we want to include the energy into the NN training at some point we'd have to make sure to use the correct real energy for the CDL data and not theenergyFromChargecase! -> But currently we don't use the energy at all anyway.[ ]Using the energy could be a useful studying tool I imagine. Would allow to investigate behavior if e.g. only energy is changed etc.

[ ]Understand why seemingly nice L2 reg example at checkpoint 10,000 still has such distinction between CDL and 55Fe despite distributions 'promising' difference? Maybe one bin is just too big?

1.12.2. DONE Bug in withLogLFilterCuts? [/]

I just noticed that in the withLogLFilterCuts the following line:

chargeCut = data[igTotalCharge][i].float > cuts.minCharge and data[igTotalCharge][i] < cuts.maxCharge

is still present even for the fitByRun case. The body of the

template is inserted after the data array is filled. This means

that the cuts are applied to the combined data. That combined data

then is further filtered by this charge cut. For the fitByRun case

however, the minCharge and maxCharge field of the cuts variable

will be set to the values seen in the last run!

Therefore the cut wrongly removes many clusters based on the wrong

charge cut in this case!

The effect of this needs to be investigated ASAP. Both what the CDL distributions look like before and after, as well as what this implies for the lnL cut method!

Which tool generated CDL distributions by run?

cdl_spectrum_creation.

But, cdl_spectrum_creation uses the readCutCDL procedure in

cdl_utils. The heart of it is:

let cutTab = getXrayCleaningCuts() let grp = h5f[(recoDataChipBase(runNumber) & $chip).grp_str] let cut = cutTab[$tfKind] result = cutOnProperties(h5f, grp, cut.cutTo, ("rmsTransverse", cut.minRms, cut.maxRms), ("length", 0.0, cut.maxLength), ("hits", cut.minPix, Inf), ("eccentricity", 0.0, cut.maxEccentricity))

from the h5f.getCdlCutIdxs(runNumber, chip, tfKind) call, i.e. it

manually only applies the X-ray cleaning cuts! So it only ever looks

at the distributions of those and never actually the full

LogLFilterCuts equivalent of the above!

So we might have never noticed cutting away too much for each spectrum, ugh.

[X]We'll do the following: Add a set of plots that show for each ingrid property:

- Raw data

- cut using

readCutCDL withXrayReferenceCutwithLogLFilterCut

and then compare what we see. -> Instead of trying to implement this into

cdl_spectrum_creationwe wrote a separate small plotting script here: ./../CastData/ExternCode/TimepixAnalysis/Plotting/plotCdl/plotCdlDifferentCuts.nim

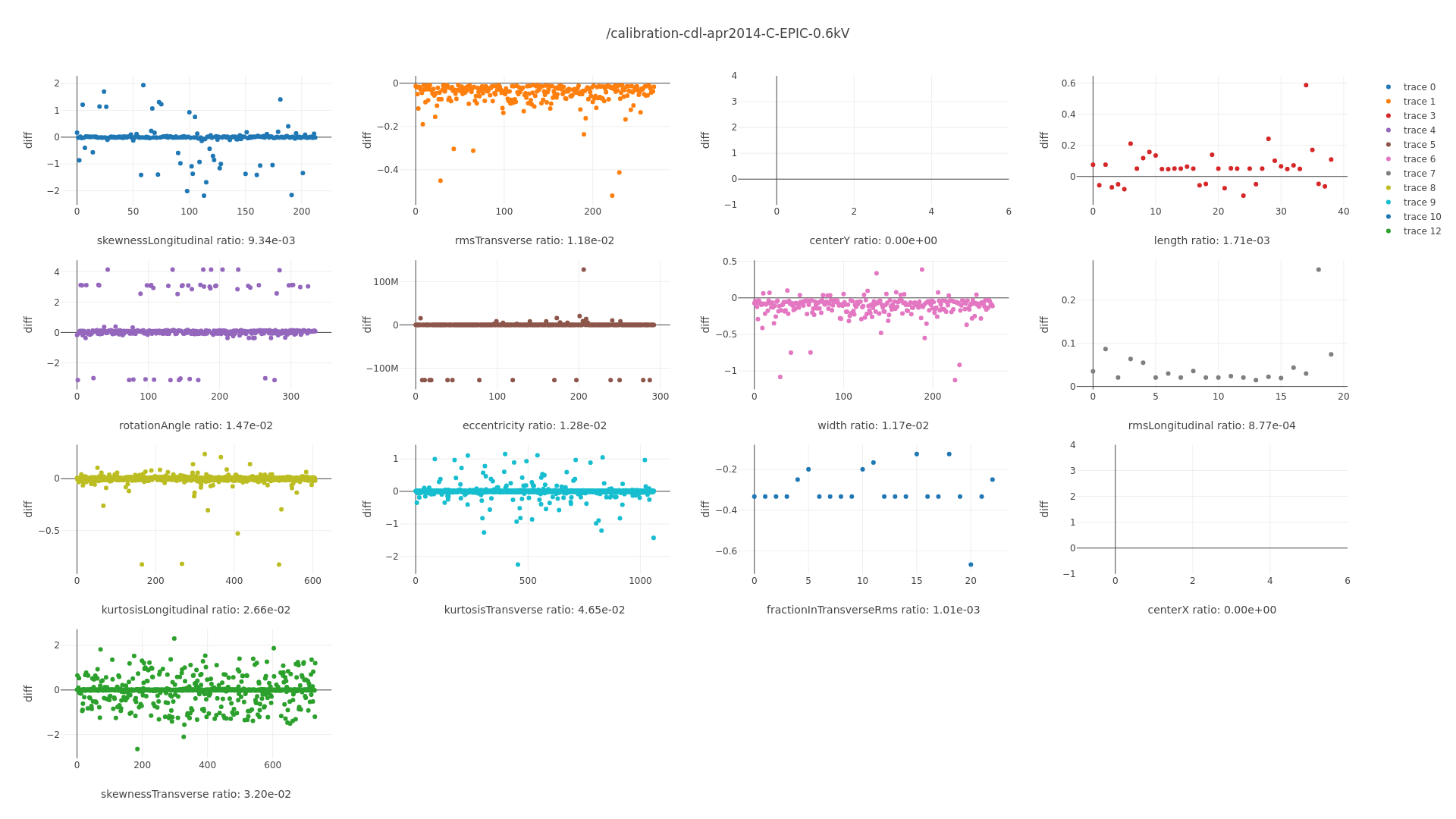

./plotCdlDifferentCuts -f ~/CastData/data/CDL_2019/CDL_2019_Reco.h5 -c ~/CastData/data/CDL_2019/calibration-cdl-2018.h5

generates the files found in ./Figs/statusAndProgress/cdlCuts/with_charge_cut_bug/ today (before fixing the charge cut bug).

NOTE: The plots have been updated and now include the cleaning cut case mentioned a paragraph down! Especially look at the following two plots

- Figs/statusAndProgress/cdlCuts/with_charge_cut_bug/Cu-Ni-15kV_totalCharge_histogram_by_different_cut_approaches.pdf

- Figs/statusAndProgress/cdlCuts/with_charge_cut_bug/Cu-Ni-15kV_rmsTransverse_histogram_by_different_cut_approaches.pdf

the total charge plot indicates how much is thrown away comparing LogLCuts & XrayCuts with CDL cuts and the rmsTransverse indicates how much in percentage of the signal is lost comparing the two.

The big question looking at this plot right now though is why the

X-ray reference cut behaves exactly the same way as the LogL cut does!

The 'last cuts' should only be applied to all data in the case of the

LogL cut usage!

-> The reason is that the X-ray reference cut case uses the I think

wrong set of two cuts. The idea should have been to reproduce the same

cuts as the CDL applies! But it's exactly only those cuts that contain

the charge cut and are intended to cut to the main peak of the

spectrum…

I mean I suppose it makes sense from the name, now that I think about

it. We'll add a withXrayCleaningCuts.

So, with the cleaning cut introduced, we get the behavior we would have expected. The LogL filter and XrayRef cuts lose precisely the peaks of the not last peak.

We'll fix it by not applying the charge cut in the case where we use

fitByRun.

The new plots are in: Figs/statusAndProgress/cdlCuts/charge_cut_bug_fixed/ and the same plots:

- Figs/statusAndProgress/cdlCuts/charge_cut_bug_fixed/Cu-Ni-15kV_totalCharge_histogram_by_different_cut_approaches.pdf

- Figs/statusAndProgress/cdlCuts/charge_cut_bug_fixed/Cu-Ni-15kV_rmsTransverse_histogram_by_different_cut_approaches.pdf

We can see we now keep the correct information!

This has implications for all the background rates and all the limits to an extent of course.

[X]Generate

likelihoodoutput with only lnL cut for Run-2 and Run-3 at 80% and compare with background rate from all likelihood combinations generated yesterday. That should give us an idea if it's necessary to regenerate all outputs and limits again. First need to regenerate the likelihood values in all the data files though.likelihood -f ~/CastData/data/DataRuns2017_Reco.h5 --cdlYear 2018 --cdlFile ~/CastData/data/CDL_2019/calibration-cdl-2018.h5 --computeLogL likelihood -f ~/CastData/data/DataRuns2018_Reco.h5 --cdlYear 2018 --cdlFile ~/CastData/data/CDL_2019/calibration-cdl-2018.h5 --computeLogL likelihood -f ~/CastData/data/CalibrationRuns2017_Reco.h5 --cdlYear 2018 --cdlFile ~/CastData/data/CDL_2019/calibration-cdl-2018.h5 --computeLogL likelihood -f ~/CastData/data/CalibrationRuns2018_Reco.h5 --cdlYear 2018 --cdlFile ~/CastData/data/CDL_2019/calibration-cdl-2018.h5 --computeLogL

and now for the likelihood calls:

./createAllLikelihoodCombinations \ --f2017 ~/CastData/data/DataRuns2017_Reco.h5 \ --f2018 ~/CastData/data/DataRuns2018_Reco.h5 \ --c2017 ~/CastData/data/CalibrationRuns2017_Reco.h5 \ --c2018 ~/CastData/data/CalibrationRuns2018_Reco.h5 \ --regions crGold \ --signalEfficiency 0.8 \ --vetoSets "{fkLogL}" \ --out /t/playground \ --cdlFile ~/CastData/data/CDL_2019/calibration-cdl-2018.h5 \ --dryRun

and finally compare the background rates:

plotBackgroundRate \ ~/org/resources/lhood_limits_automation_with_nn_support/lhood/likelihood_cdl2018_Run2_crGold_lnL.h5 \ ~/org/resources/lhood_limits_automation_with_nn_support/lhood/likelihood_cdl2018_Run3_crGold_lnL.h5 \ /t/playground/likelihood_cdl2018_Run2_crGold_signalEff_0.8_lnL.h5 \ /t/playground/likelihood_cdl2018_Run3_crGold_signalEff_0.8_lnL.h5 \ --names "ChargeBug" --names "ChargeBug" \ --names "Fixed" --names "Fixed" \ --centerChip 3 \ --title "Background rate from CAST data, lnL@80, charge cut bug" \ --showNumClusters --showTotalTime \ --topMargin 1.5 --energyDset energyFromCharge \ --outfile background_rate_cast_lnL_80_charge_cut_bug.pdf \ --outpath /t/playground/ \ --quiet

The generated plot is:

As we can see we remove a few clusters, but the difference is absolutely minute. That fortunately means we don't need to rerun all the limits again!

Might still be beneficial for the NN training as the impact on other variables might be bigger.

1.13.

Continuing from yesterday, but before we do that, we need to generate

the new expected limits table using the script in

StatusAndProgress.org sec. [BROKEN LINK: sec:limit:expected_limits_different_setups_test].

[X]Generate limits table[ ]Regenerate all limits once more to have them with the correct eccentricity cut off value in the files -> Should be done, but not priority right now. Our band aid fix relying on the filename is fine for now.[ ]continue NN training / investigation[ ]Update systematics due todetermineEffectiveEfficiencyusing fixed code (correct energies & data frames) in thesis[ ]fix that same code forfitByRun

1.13.1. NN training

Let's try to reduce the number of neurons on the hidden layer of the network and see where that gets us in the output distribution.

(back using SGD without L2 reg):

./train_ingrid \ ~/CastData/data/CalibrationRuns2017_Reco.h5 \ ~/CastData/data/CalibrationRuns2018_Reco.h5 \ --back ~/CastData/data/DataRuns2017_Reco.h5 \ --back ~/CastData/data/DataRuns2018_Reco.h5 \ --ε 0.95 \ --modelOutpath ~/org/resources/nn_devel_mixing/20_03_23_hidden_layer_100neurons/trained_model_hidden_layer_100.pt \ --plotPath ~/org/Figs/statusAndProgress/neuralNetworks/development/hidden_layer_100neurons/

The CDL vs. 55Fe distribution is again slightly different (tested on checkpoint 35000). Btw: also good to know that we can easily run e.g. a prediction of a checkpoint while the training is ongoing. Not a problem whatsoever.

Next test a network that only uses the three variables used for the lnL cut! Back using 500 hidden neurons. Let's try that training while the other one is still running…

If this one shows the same distinction in 55Fe vs CDL data that is actually more damning for our current approach in some sense than anything else. If not however, than we can analyze which variable is the main contributor in giving us that separation in the predictions!

./train_ingrid \ ~/CastData/data/CalibrationRuns2017_Reco.h5 \ ~/CastData/data/CalibrationRuns2018_Reco.h5 \ --back ~/CastData/data/DataRuns2017_Reco.h5 \ --back ~/CastData/data/DataRuns2018_Reco.h5 \ --ε 0.95 \ --modelOutpath ~/org/resources/nn_devel_mixing/20_03_23_only_lnL_vars/trained_model_only_lnL_vars.pt \ --plotPath ~/org/Figs/statusAndProgress/neuralNetworks/development/only_lnL_vars

It seems like in this case the prediction is actually even in the opposite direction! Now the CDL data is more "background like" than the 55Fe data. ./Figs/statusAndProgress/neuralNetworks/development/only_lnL_vars/all_predictions.pdf What do the effective 55Fe numbers say in this case?

./effective_eff_55fe -f ~/CastData/data/CalibrationRuns2017_Reco.h5 --model ~/org/resources/nn_devel_mixing/20_03_23_only_lnL_vars/trained_model_only_lnL_varscheckpoint_epoch_100000_loss_0.1237_acc_0.9504.pt --ε 0.95

Error: unhandled cpp exception: Could not run 'aten::emptystrided' with arguments from the 'CUDA' backend. This could be because the operator doesn't exist for this backend, or was omitted during the selective/custom build process (if using custom build). If you are a Facebook employee using PyTorch on mobile, p lease visit https://fburl.com/ptmfixes for possible resolutions. 'aten::emptystrided' is only available for these backends: [CPU, Meta, BackendSelect, Pytho n, Named, Conjugate, Negative, ZeroTensor, ADInplaceOrView, AutogradOther, AutogradCPU, AutogradCUDA, AutogradXLA, AutogradLazy, AutogradXPU, AutogradMLC, Au togradHPU, AutogradNestedTensor, AutogradPrivateUse1, AutogradPrivateUse2, AutogradPrivateUse3, Tracer, AutocastCPU, Autocast, Batched, VmapMode, Functionali ze, PythonTLSSnapshot].

CPU: registered at aten/src/ATen/RegisterCPU.cpp:21249 [kernel] Meta: registered at aten/src/ATen/RegisterMeta.cpp:15264 [kernel] BackendSelect: registered at aten/src/ATen/RegisterBackendSelect.cpp:606 [kernel] Python: registered at ../aten/src/ATen/core/PythonFallbackKernel.cpp:77 [backend fallback] Named: registered at ../aten/src/ATen/core/NamedRegistrations.cpp:7 [backend fallback] Conjugate: fallthrough registered at ../aten/src/ATen/ConjugateFallback.cpp:22 [kernel] Negative: fallthrough registered at ../aten/src/ATen/native/NegateFallback.cpp:22 [kernel] ZeroTensor: fallthrough registered at ../aten/src/ATen/ZeroTensorFallback.cpp:90 [kernel] ADInplaceOrView: fallthrough registered at ../aten/src/ATen/core/VariableFallbackKernel.cpp:64 [backend fallback] AutogradOther: registered at ../torch/csrc/autograd/generated/VariableType2.cpp:12095 [autograd kernel] AutogradCPU: registered at ../torch/csrc/autograd/generated/VariableType2.cpp:12095 [autograd kernel] AutogradCUDA: registered at ../torch/csrc/autograd/generated/VariableType2.cpp:12095 [autograd kernel] AutogradXLA: registered at ../torch/csrc/autograd/generated/VariableType2.cpp:12095 [autograd kernel] AutogradLazy: registered at ../torch/csrc/autograd/generated/VariableType2.cpp:12095 [autograd kernel] AutogradXPU: registered at ../torch/csrc/autograd/generated/VariableType2.cpp:12095 [autograd kernel] AutogradMLC: registered at ../torch/csrc/autograd/generated/VariableType2.cpp:12095 [autograd kernel] AutogradHPU: registered at ../torch/csrc/autograd/generated/VariableType2.cpp:12095 [autograd kernel] AutogradNestedTensor: registered at ../torch/csrc/autograd/generated/VariableType2.cpp:12095 [autograd kernel] AutogradPrivateUse1: registered at ../torch/csrc/autograd/generated/VariableType2.cpp:12095 [autograd kernel] AutogradPrivateUse2: registered at ../torch/csrc/autograd/generated/VariableType2.cpp:12095 [autograd kernel] AutogradPrivateUse3: registered at ../torch/csrc/autograd/generated/VariableType2.cpp:12095 [autograd kernel] Tracer: registered at ../torch/csrc/autograd/generated/TraceType2.cpp:12541 [kernel] AutocastCPU: fallthrough registered at ../aten/src/ATen/autocastmode.cpp:462 [backend fallback] Autocast: fallthrough registered at ../aten/src/ATen/autocastmode.cpp:305 [backend fallback] Batched: registered at ../aten/src/ATen/BatchingRegistrations.cpp:1059 [backend fallback] VmapMode: fallthrough registered at ../aten/src/ATen/VmapModeRegistrations.cpp:33 [backend fallback] Functionalize: registered at ../aten/src/ATen/FunctionalizeFallbackKernel.cpp:52 [backend fallback] PythonTLSSnapshot: registered at../aten/src/ATen/core/PythonFallbackKernel.cpp:81 [backend fallback]

Uhh, this fails with a weird error… I love the "If you are a

Facebook employee" line!

Oh, never mind, I simply forgot the -d:cuda flag when compiling,

oops.

Run: 83 for target: signal Keeping : 823 of 916 = 0.898471615720524 Run: 88 for target: signal Keeping : 820 of 911 = 0.9001097694840834 Run: 93 for target: signal Keeping : 692 of 787 = 0.8792884371029225 Run: 96 for target: signal Keeping : 5079 of 5635 = 0.9013309671694765 Run: 102 for target: signal Keeping : 1409 of 1588 = 0.8872795969773299 Run: 108 for target: signal Keeping : 2714 of 3055 = 0.888379705400982 Run: 110 for target: signal Keeping : 1388 of 1554 = 0.8931788931788932 Run: 116 for target: signal Keeping : 1541 of 1717 = 0.8974956319161328 Run: 118 for target: signal Keeping : 1480 of 1651 = 0.8964264082374318 Run: 120 for target: signal Keeping : 3052 of 3413 = 0.8942279519484324 Run: 122 for target: signal Keeping : 4991 of 5640 = 0.8849290780141844 Run: 126 for target: signal Keeping : 2274 of 2596 = 0.8759630200308166 Run: 128 for target: signal Keeping : 6973 of 7899 = 0.8827699708823902 Run: 145 for target: signal Keeping : 3287 of 3646 = 0.9015359297860669 Run: 147 for target: signal Keeping : 1887 of 2107 = 0.8955861414333175 Run: 149 for target: signal Keeping : 1753 of 1936 = 0.9054752066115702 Run: 151 for target: signal Keeping : 1662 of 1839 = 0.9037520391517129 Run: 153 for target: signal Keeping : 1731 of 1908 = 0.9072327044025157

The numbers are hovering around 90% for the 95%

desired. Interesting. And not what we might have expected. I suppose

the different distributions in the CDL output then are related to

different CDL targets. Some are vastly more left than others? What

would the prediction look like if we restrict ourselves to the

MnCr12kV target?

Modified one line in predictAll, added this:

.filter(f{`Target` == "Mn-Cr-12kV"})

let's run that on the same model (last checkpoint) and see how it

compares in 55Fe vs CDL.

Indeed, the CDL data now is more compatible with the 55Fe data (and

likely slightly more to the right explaining the 90% for the target

95).

Be that as it may, the difference in the ROC curves of one of our "good" networks and this one is pretty stunning. Where the good ones are almost a right angled triangle, this one is pretty smooth: Figs/statusAndProgress/neuralNetworks/development/only_lnL_vars/roc_curve.pdf

1.13.2. DONE Expected limits table

cd $TPA/Tools/generateExpectedLimitsTable ./generateExpectedLimitsTable --path ~/org/resources/lhood_limits_automation_with_nn_support/limits

| εlnL | Scinti | FADC | εFADC | Septem | Line | eccLineCut | εSeptem | εLine | εSeptemLine | Total eff. | Limit no signal | Expected Limit |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0.9 | true | true | 0.98 | false | true | 0 | 0.7841 | 0.8602 | 0.7325 | 0.7587 | 3.7853e-21 | 7.9443e-23 |

| 0.9 | true | false | 0.98 | false | true | 0 | 0.7841 | 0.8602 | 0.7325 | 0.7742 | 3.6886e-21 | 8.0335e-23 |

| 0.9 | true | true | 0.98 | false | true | 1.2 | 0.7841 | 0.8794 | 0.7415 | 0.7757 | 3.6079e-21 | 8.1694e-23 |

| 0.8 | true | true | 0.98 | false | true | 0 | 0.7841 | 0.8602 | 0.7325 | 0.6744 | 4.0556e-21 | 8.1916e-23 |

| 0.8 | true | true | 0.98 | false | true | 0 | 0.7841 | 0.8602 | 0.7325 | 0.6744 | 4.0556e-21 | 8.1916e-23 |

| 0.9 | true | true | 0.98 | false | true | 1.4 | 0.7841 | 0.8946 | 0.7482 | 0.7891 | 3.5829e-21 | 8.3198e-23 |

| 0.8 | true | true | 0.98 | false | true | 1.2 | 0.7841 | 0.8794 | 0.7415 | 0.6895 | 3.9764e-21 | 8.3545e-23 |

| 0.8 | true | true | 0.9 | false | true | 0 | 0.7841 | 0.8602 | 0.7325 | 0.6193 | 4.4551e-21 | 8.4936e-23 |

| 0.9 | true | true | 0.98 | false | true | 1.6 | 0.7841 | 0.9076 | 0.754 | 0.8005 | 3.6208e-21 | 8.5169e-23 |

| 0.8 | true | true | 0.98 | false | true | 1.4 | 0.7841 | 0.8946 | 0.7482 | 0.7014 | 3.9491e-21 | 8.6022e-23 |

| 0.8 | true | true | 0.98 | false | true | 1.6 | 0.7841 | 0.9076 | 0.754 | 0.7115 | 3.9686e-21 | 8.6462e-23 |

| 0.9 | true | false | 0.98 | true | true | 0 | 0.7841 | 0.8602 | 0.7325 | 0.6593 | 4.2012e-21 | 8.6684e-23 |

| 0.7 | true | true | 0.98 | false | true | 0 | 0.7841 | 0.8602 | 0.7325 | 0.5901 | 4.7365e-21 | 8.67e-23 |

| 0.9 | true | true | 0.98 | true | true | 0 | 0.7841 | 0.8602 | 0.7325 | 0.6461 | 4.3995e-21 | 8.6766e-23 |

| 0.7 | true | false | 0.98 | false | true | 0 | 0.7841 | 0.8602 | 0.7325 | 0.6021 | 4.7491e-21 | 8.7482e-23 |

| 0.8 | true | true | 0.98 | true | true | 0 | 0.7841 | 0.8602 | 0.7325 | 0.5743 | 4.9249e-21 | 8.7699e-23 |

| 0.8 | true | true | 0.98 | false | false | 0 | 0.7841 | 0.8602 | 0.7325 | 0.784 | 3.6101e-21 | 8.8059e-23 |

| 0.8 | true | true | 0.8 | false | true | 0 | 0.7841 | 0.8602 | 0.7325 | 0.5505 | 5.1433e-21 | 8.855e-23 |

| 0.7 | true | true | 0.98 | false | true | 1.2 | 0.7841 | 0.8794 | 0.7415 | 0.6033 | 4.4939e-21 | 8.8649e-23 |

| 0.8 | true | true | 0.98 | true | false | 0 | 0.7841 | 0.8602 | 0.7325 | 0.6147 | 4.5808e-21 | 8.8894e-23 |

| 0.9 | true | false | 0.98 | true | false | 0 | 0.7841 | 0.8602 | 0.7325 | 0.7057 | 3.9383e-21 | 8.9504e-23 |

| 0.7 | true | true | 0.98 | false | true | 1.4 | 0.7841 | 0.8946 | 0.7482 | 0.6137 | 4.5694e-21 | 8.9715e-23 |

| 0.8 | true | true | 0.9 | true | true | 0 | 0.7841 | 0.8602 | 0.7325 | 0.5274 | 5.3406e-21 | 8.9906e-23 |

| 0.9 | true | true | 0.9 | true | true | 0 | 0.7841 | 0.8602 | 0.7325 | 0.5933 | 4.854e-21 | 9e-23 |